A Mainstream Contributor To Sexual Exploitation

Known as the "dark web alternative"

Telegram unleashes a new era of exploitation. Messaging app Telegram serves as a safe haven for criminal communities across the globe. Sexual torture rings, sextortion gangs, deepfake bots, and more all thrive on an alarming scale.

Take Action

Updated 12/6/24: Four months after the arrest of Telegram CEO Pavel Durov in France, Telegram has announced a partnership with the UK’s Internet Watch Foundation (IWF) to help combat child sexual abuse that has run rampant on the platform. Telegram will now use IWF’s hashing technology to proactively detect and remove known child sexual abuse materials (CSAM) being shared in public parts of the site. The platform will also deploy tools to block non-photographic depictions of child sexual abuse, such as known AI child sexual abuse imagery, and tools to block links to webpages known to host CSAM. This is a significant step for Telegram, which has previously refused to engage with such child protection schemes. While NCOSE applauds this first step toward preventing child sexual abuse on the platform, Telegram must do more to combat grooming of children and image-based sexual abuse as well. With nearly 30 million downloads of Telegram in the U.S. in 2023, Telegram should voluntarily report CSAM to NCMEC too, which they have never done.

Updated 10/15/24: Telegram CEO Pavel Durov was arrested in France in August 2024 as part of a larger investigation into “complicity” in illegal transactions and possessing and distributing child sexual abuse material (CSAM). Telegram also apologized to South Korean authorities for its handling of deepfake pornography shared on the platform by rings at two major universities after police launched an investigation accusing Telegram of “abetting” distribution of this content. Following his arrest, Durov announced that Telegram had removed “problematic content” and updated its terms of service to make it clear that Telegram would share IP addresses and phone numbers with law enforcement in response to valid legal requests. NCOSE renews its call for the U.S. Department of Justice to investigate Telegram.

Messaging app Telegram, increasingly referred to as “the new dark web,” facilitates multiple forms of image-based sexual abuse – including deepfake pornography, nonconsensual distribution of sexually explicit material (sometimes called “revenge pornography”), and sexual extortion (“sextortion”). The platform is also rampant with child sexual abuse material (CSAM) and grooming of minors. Telegram’s glaringly inadequate and/or nonexistent policies, coupled with adamant refusal to moderate content, directly facilitate and exacerbate sexual abuse and exploitation on the platform.

Since its inception, Telegram has served as an epicenter of extremist activities, connecting a thriving ecosystem for the most egregious of crimes perpetuated by vast networks of perpetrators. Telegram allows and rapidly spreads every form of sexual abuse and exploitation (and countless other illegal activities) – profiting from some of the most heinous acts imaginable. Telegram has been a primary source and multiplier for the following:

- Sadistic torture and sextortion rings operated by pedophiles, and even children

- Pedophiles connecting to view, trade, and sell child sexual abuse material (CSAM)

- Image-based sexual abuse creation and dissemination, including “revenge pornography” networks and “nudifying” technology

- Deepfake pornography development and monetization – including of minors

- Sex trafficking (including of children) and prostitution

- Children having sexual interactions online, including with adults

- Selling of “date rape” drugs

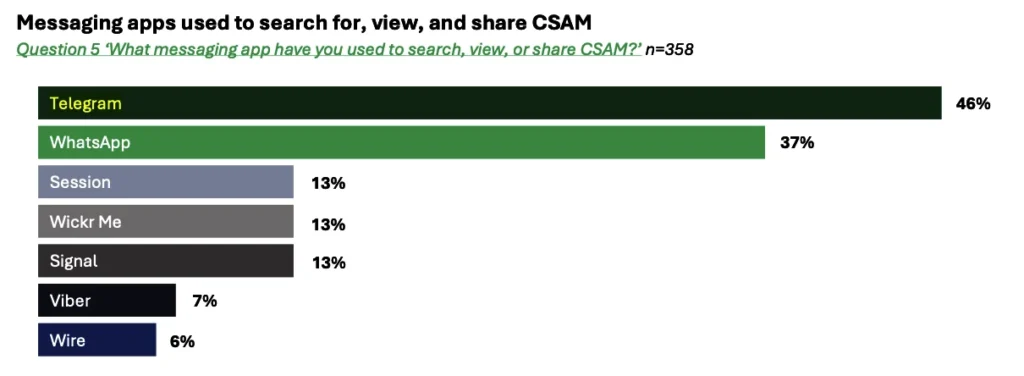

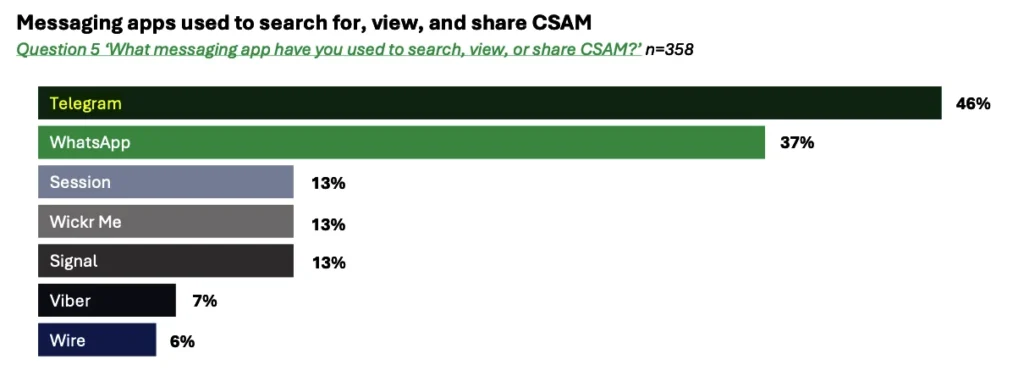

In a February 2024 study, Tech Platforms Used by Online Child Sexual Abuse Offenders, Telegram was noted as the #1 most popular messaging app used to “search for, view, and share CSAM” by almost half of the offender respondents (46% Telegram, 37% WhatsApp).

Telegram is clearly aware of the extent of the crimes taking place on the platform. The app has been banned in more than a dozen countries. Law enforcement agencies, nonprofit organizations, cybersecurity analysts, and investigative journalists have been sounding the alarm about Telegram for years.

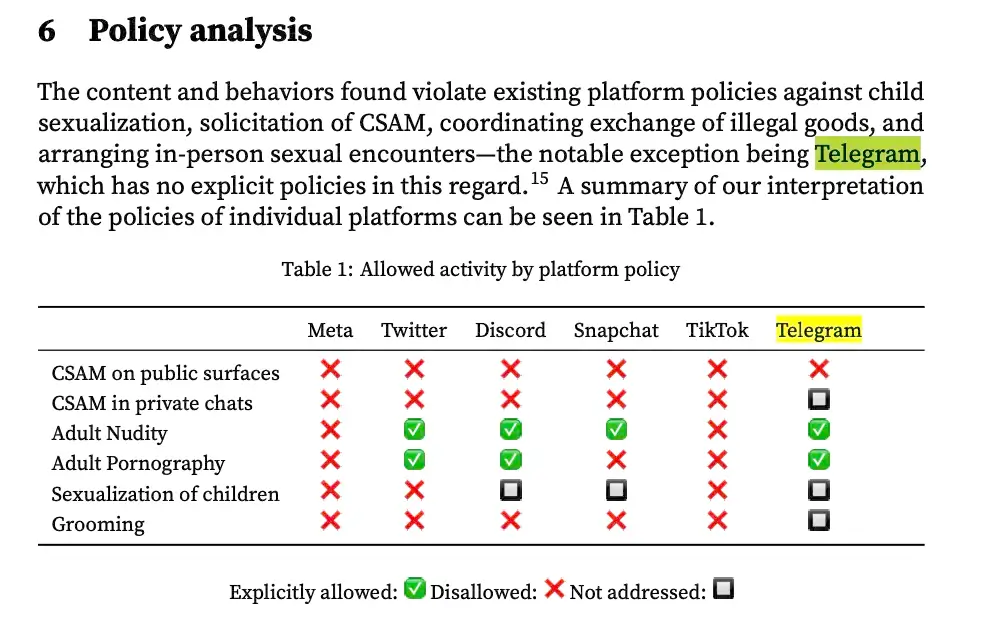

Telegram’s very design seems built to invite and protect criminals and predators, affording them a low-risk, high-reward environment in which to operate without fear of consequences. The distributed infrastructure, end-to-end encryption for one-to-one calls and self-destructing “Secret Chats,” lack of age or consent verification, reliance on user reports and administrator oversight, vague classification of “private” versus “public” content, and astonishing lack of content moderation (or even policies) provide the perfect environment for widespread exploitation. The Stanford Internet Observatory even concluded in a June 2023 report that Telegram implicitly allows the trading of CSAM in private channels (as there is no explicit policy against CSAM in private chats, and no policy at all against sexualization of children or grooming) and does not detect for known CSAM as researchers found the illegal content being traded openly in public groups.

Telegram’s channels are designed for broadcasting to unlimited audiences (some containing tens of millions of members) and groups can have up to 200,000 members (and send/receive up to 2GB of any file type), which allows instances of abuse and exploitation to be shared rapidly and at scale. As one of the top ten most popular apps in the world with over 1 billion downloads from the Google Play Store and the fourth most popular social media app in the Apple App Store, reaching 800 million average monthly users, what happens on Telegram is affecting millions of people. And not just Telegram’s own users – but the untold numbers of people being abused and exploited on and through the app without their knowledge, the countless families and communities devastated because of the crimes the app enables.

Our Requests for Improvement

-

Normally, the National Center on Sexual Exploitation offers recommendations for improvement and invites constructive dialogue to develop solutions for making a platform free from sexual abuse and exploitation. However, we have no confidence that Telegram cares to engage with any entity about safety matters, nor have they shown any substantive effort to-date to stem the rampant crime on the platform.

Telegram is so dangerous and such a threat to so many people that we believe it should be shut down. Note: Since the launch of the 2024 Dirty Dozen List, Telegram has made changes to clarify the platform will share IP addresses and phone numbers in response to valid legal requests, and has partnered with the Internet Watch Foundation (IWF) to proactively detect and remove CSAM.

An Ecosystem of Exploitation: Grooming, Predation and CSAM

The core points of this story are real, but the names have been changed and creative license has been taken in relating the events from the survivor’s perspective.

Proof

Evidence of Exploitation

The instances of criminal activity and sexual exploitation of women and children outlined in proof sections below barely scratch the surface of these problems or capture the magnitude of the serious harm Telegram inflicts on individuals, families, communities, and countries. NCOSE commits to ensuring all proper authorities with any power to hold Telegram accountable in the United States or abroad are aware of the scope and scale of devastation Telegram perpetuates.

STRONG TRIGGER WARNING: Graphic text descriptions of extremely horrific and unusual crimes against children, as well as several mentions of suicide. The content found on Telegram is so horrific that the following descriptions are likely to be troubling even to those who are accustomed to reading about sexual exploitation issues.

Telegram is Teeming with Multiple Forms of Image-Based Sexual Abuse (IBSA)

Image-based sexual abuse (IBSA) is the creating, threatening to share, sharing, or using of recordings (still images or videos) of sexually explicit or sexualized materials without the consent of the person depicted. Often, IBSA includes the use of sexually explicit or sexualized materials to groom or extort a person, or to advertise commercial sexual abuse. This umbrella term encompasses several iterations of sexual exploitation, including but not limited to recorded rape or sex trafficking, non-consensually shared/recorded sexually explicit content (sometimes called “revenge porn”), the creation of photoshopped/artificial pornography (including nonconsensual “deepfake” pornography), and more.

AI-generated Pornography

One of the fastest growing forms of image-based sexual abuse is the creation and distribution of AI-generated pornography, sometimes referred to as synthetic sexually explicit material (SSEM), which encompasses deepfake pornography, “nudifying” technology, AI-generated pornography of adults, etc. While the awareness of this type of abuse is only now coming to the fore, it has long been a problem on Telegram.

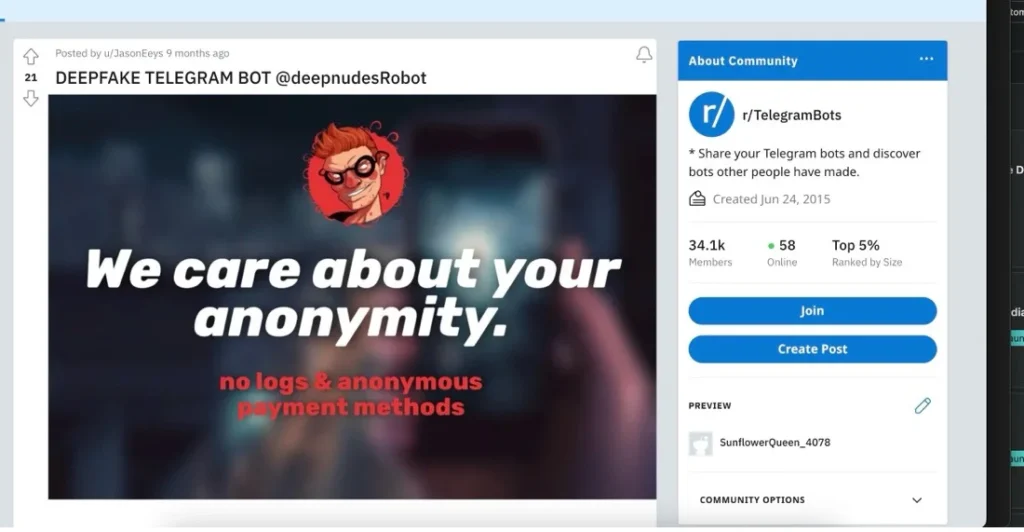

- In 2020, Sensity AI, an Amsterdam-based deepfake detection and AI-generated image recognition company, identified a deepfake ecosystem on Telegram, revealing its focal point to be an AI-powered “nudifying” bot that allowed users to ‘strip’ women and girls of their clothing. The ecosystem consisted of seven channels, at least 100,000 members, and more than 45,000 unique members across the channels. From April 2020 to July 2020, “nudified” images within the ecosystem grew by 198%, totaling over 104,800 women and girls being publicly “nudified” on Telegram. Further, this ecosystem marked a significant shift from celebrities to private individuals with 70% of users self-reporting they used the bot to “strip” private individuals (women and girls they knew in life or found on Instagram). Researchers found both adult women and underage girls had been victimized. Despite Telegram’s knowledge of this ecosystem, SSEM groups continued to exist on the platform.

- In 2021, the Taiwanese YouTuber “Xiao Yu” was arrested for operating a Telegram network comprised of four groups containing 8,000 users dedicated to selling, profiting from, and circulating “deepfake” pornography videos of famous Taiwanese women. Users were required to pay an ‘entrance fee’ between 100 NT (~$3 USD) and 400 NT (~$12 USD) to join, generating at least 2,779,600 NT (~$90,000 USD) in income.

Despite being aware of these problem for roughly four years now, Telegram has refused to take any substantive actions to reduce future harm from occurring, resulting in the widespread adoption of sexually exploitative technology by at the very least a million users across hundreds of Telegram channels.

In 2023 alone, there has been a significant rise in “nudification” bots globally, with Telegram playing a major role in this rapid growth. In AI-Generated ‘Undressing’ Images Move from Niche Pornography Discussion Forums to a Scaled and Monetized Online Business, researchers note that Telegram is often the source of the creation and dissemination of SSEM technology.

Like countless other businesses, synthetic NCII providers use social media platforms to market their services and drive web traffic to affiliate links. The point of service, however, where the images are generated and sold, usually takes place on the provider’s website or messaging services such as Telegram and Discord.

Mainstream platforms predominantly serve as marketing points where synthetic NCII providers can advertise their capabilities and build an audience of interested users. The bulk of these actors’ activity appears to be focused on directing potential customers to off-platform spaces, such as their own websites, Telegram groups used to access their services, or mobile stores to download an affiliated app.

Researchers also found that the number of links advertising undressing apps increased more than 2,400% across social media platforms like Reddit and X in 2023, with a million users belonging to 52 synthetic sexually explicit material (deepfake pornography) groups on Telegram for the month of September 2023 alone.

Telegram was also at the center of a story that captured attention worldwide when more than 30 middle school aged girls were “undressed” by male classmates in Spain using “nudify” apps found on Telegram. The images were also shared on Telegram and at least one girl was sexually extorted. [Note: due to the victims being minors, this should be classified as child sexual abuse material.]

A NCOSE researcher found more than 73 “nudification” bots on Telegram in one day doing minimal searches (evidence provided upon request). Most of these were advertised through channels and contained links to groups where the bots were accessible. The monetization of these apps/bots/services has become ubiquitous through either token systems, ads, or subscription-based tiers ranging from $.99-$300+ and occurs through mainstream payment processors (PayPal, Stripe, Coinbase, etc.).

Deeply concerning is the symbiotic relationship between Telegram and other social media and messaging platforms like Instagram, Facebook, X, Reddit, and Discord, with many of these platforms used extensively by children. For example, NCOSE researchers found an entire Discord server dedicated to “nudifying” bots, with nude images in profile pictures and an event tagged “dark web of teens and porn today!!” that listed bots for “nudifying” teens and women and “cp” (short for child pornography)—all linking to Telegram.

Telegram affords anonymity, privacy, and community to dangerous users but does not grant any protections or privacy to victims.

The question then remains, when will Telegram care about victims more than perpetrators?

Non-Consensual Dissemination of Sexually Explicit Material (NCDSEM)

In addition to AI-created sexually exploitative material, many other forms of IBSA thrive on Telegram. The anonymity, weak regulation, and community fostered on Telegram normalizes this behavior and allows it to proliferate, with specific channels and groups created for:

- The sole purpose of sharing IBSA, often explicitly stating this purpose in the chat box. The only rule in these groups is often “sending pictures of (ex) girlfriends, friends, or acquaintances.” Researchers were kicked out of three groups for failing to comply with this rule.

- “Mainstream” pornography, including IBSA, thereby normalizing IBSA to be seen as mainstream pornography.

- “Spy mode” for sharing content of girls and women captured with hidden cameras in showers or “upskirting.”

- “Linking” to share sexually explicit images taken from various social media sites, particularly Instagram and Facebook. These images were consensually taken and posted on social media by the victims and then non-consensually screenshotted and posted on Telegram, highlighting the problem of cross-platform IBSA.

- Automated IBSA: bots created with the intention of gathering, storing, and sharing IBSA and females’ personal information, automatizing the dissemination of IBSA among channels and groups.

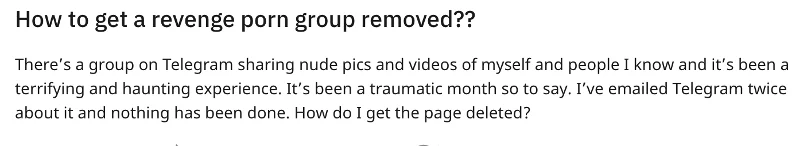

Multiple survivors have spoken out about how past romantic partners have non-consensually shared explicit materials of them (sometimes called “revenge porn”) through Telegram groups with hundreds of members.

Some survivors have taken to posting on social media in desperation because Telegram has failed to help them remove IBSA. See the post below taken from Reddit [survivor identifying information redacted]:

Even IBSA of sex trafficking victims, which also rises to the level of CSAM in the cases of those who were minors at the time, has been facilitated via Telegram:

Cho Joo-bin, a 24-year-old man, hosted online rooms on encrypted messaging app Telegram, where users paid to see young girls perform demeaning sexual acts carried out under coercion, according to South Korean police. As many as 74 victims were blackmailed by Cho into uploading images onto the group chats, and some of the users paid for access, police said. Officials suspect there are about 260,000 participants across Cho’s chat rooms. At least 16 of the girls were minors, according to officials.

This case unequivocally demonstrates Telegram as a flagrant sanctuary for illicit, exploitative, and predatory activities, precipitating the alarming proliferation of private, egregious criminal networks. It underscores the urgent need for decisive action to address and dismantle these pervasive and perpetual occurrences.

Sexual Extortion (or “Sextortion”)

Sexual extortion, or sextortion, is commonplace on Telegram. While it is also a form of image-based sexual abuse, it entails the added element of blackmail.

Sextortion is not only prevalent on Telegram, but the platform often serves as a go-to secondary location to which perpetrators lure victims in order to obtain sexually explicit material. According to the United States Federal Trade Commission, about 40% of 2022 sexual extortion cases contained reports with detailed narratives (2,000+ characters in length) mentioning WhatsApp, Google Chat, or Telegram.

NCOSE researchers found over 100 posts of self-reported cases of sextortion on Telegram in the subreddit /sextortion occurring within a six-month period in 2023. Victims often had similar experiences in which they met someone on either Instagram or a dating site and began exchanging images or discussed the exchange of imagery and were soon led to continue this activity on Telegram because of its anonymity and lack of content moderation. Perpetrators would then extort victims and threaten to release the images to friends, families, and co-workers, often sharing personal information they found of the victims, unless the victim sent money. Payments could also be sent through Telegram. It is clear based on NCOSE’s findings and other recent research reports that experiences of sextortion are pervasive on Telegram.

Child Sexual Abuse Material Facilitated On and Through Telegram

Updated 7/2/24: On June 28, 2024, a pornography actor was arrested and charged with sending and receiving hundreds of child sexual abuse material (CSAM) videos via Telegram. The press release announcing the arrest said those CSAM videos depicted children as young as infants, including a video showing a 10-year-old child bound and raped.

……………………………..

Child sexual abuse material is regularly shared or sold on Telegram.

In a February 2024 study, Tech Platforms Used by Online Child Sexual Abuse Offenders, Telegram was noted as the most popular messaging app used to “search for, view, and share CSAM” by almost half of the offender respondents (46% Telegram, 37% WhatsApp).

The Stanford Internet Observatory even concluded in a June 2023 report that Telegram implicitly allows the trading of CSAM in private channels (as there is no explicit policy at all against CSAM in private chats, sexualization of children, or grooming) and does not detect for known CSAM as researchers found the illegal content being traded openly in public groups.

A 2022 study on pedophilic activity on Telegram found groups sharing and selling CSAM in which all content was openly accessible in front-facing groups and hundreds of videos were uploaded to these groups. Out of 15,200+ messages, researchers identified 53 Telegram invite links, 407 active third-party links, with 2,424 messages containing engagements with the term “CP,” over 1,170 links to file sharing service Mega.nz containing anywhere between 1.5GB-100GB of CSAM, and hundreds of CSAM videos, all of which were openly accessible to view within the groups. Most videos had 30 seconds of play time and the average view per piece of content was 3,100. On average, identified victims were aged 10-15 years, with 66% being female and 34% being male.

Telegram Hosting Vile, Satanic Pedophile Extortionist Cult *Trigger Warning*

In September 2023, the FBI issued a warning about a satanic pedophilic cult operating under various aliases on messaging platforms, using Telegram as its main source of communication, who were targeting minors to extort them into recording or livestreaming acts of self-harm, suicide, sexually explicit content, sexual abuse of siblings, animal torture, and even murder. Members gained access by sharing such videos and livestreams of the minors they had coerced, as well as videos of adults abusing minors.

According to the FBI warning, the group appeared to target minors “8 and 17 years old, especially LGBTQ+ youth, racial minorities, and those who struggle with a variety of mental health issues, such as depression and suicidal ideation.” Hundreds of minors were victimized by the group.

Forced self-harm included cutting, stabbing, or “fansigning” (writing or cutting specific numbers, letters, symbols, or names onto your body). Members of the group would use extortion and blackmail to induce extreme fear into victims, threatening to share sexually explicit videos or photos of the minor victims with their family, friends, and/or post to the internet. The end-goal was to compel the minors to die by suicide on live-stream for their own entertainment or to increase their “status” within the cult.

Offshoot groups from the pedophilic extortion group also proliferated on Telegram, Discord, and now-defunct site Omegle – one that also included sex trafficking. A legal affidavit detailed images of abuse of prepubescent girls identified in the offender’s possession that are so graphic and horrifying, we have chosen not to describe them.

A media consortium investigating these groups collected and analyzed 3 million Telegram messages, as well as examining court and police records from multiple countries and interviewing researchers, law enforcement, and seven victims or their families. While this case has been covered by multiple media outlets in the past few months, there is no indication that Telegram has taken any action other than removing some channels when reporters have elevated them. The latest news story regarding these cases, published in The Washington Post on March 14, On popular online platforms, predatory groups coerce children into self-harm, noted, “After reporters sought comment, Telegram shut down dozens of groups the consortium identified as communication hubs for the network.”

Children are being tortured and are dying on and because of Telegram.

Telegram knowingly allows CSAM, sex trafficking, and pedophilic activity to thrive on its platform. Below are details of various cases in 2023:

- In November 2023, a 17-year-old teenage boy in Taiwan was arrested for sex trafficking two teenage girls on Telegram. According to reports, the teenage boy would gain commissions from the girls in exchange for advertising their sexual services on a Telegram chat group and arranged for them to meet up with sex buyers.

- In August 2023, a former FBI Contractor was indicted for allegedly contacting roughly a dozen minor boys over Discord and Snapchat and purchasing hundreds of videos and images of CSAM from Telegram.

- In July 2023, CSAM sourced from hundreds of hacked Hikvision cameras was found being sold on Telegram. Videos were advertised through keyword terms “like ‘cp’ (child porn), ‘kids room,’ ‘family room,’ and ‘bedroom of a young girl’ to entice potential buyers.” These channels, since removed, had thousands of members.

- In July 2023, a 35-year-old Herndon, VA man was sentenced to 25 years in prison for having groomed a 15-year-old girl to produce CSAM of herself that he then distributed to other offenders over Telegram. According to court documents, the offender’s devices revealed over 20,000 images and 500 videos of CSAM, including 486 known series with identified victims.

- In July 2023, a 58-year-old Danish man was arrested after having traveled from Denmark to Fresno to sexually exploit a minor female. The perpetrator contacted an undercover agent who had created the profile as a mother with a seven-year-old daughter, on a dark web website dedicated to persons interested in pedophilia. Over the next few months, the perpetrator sent messages to the undercover agent through the website’s messaging feature, as well as Telegram, explaining in detail the sexual acts in which he desired to engage with the mother and child. Additionally, he sent images of adults sexually abusing young children and discussed having another child with the mother and molesting the newborn.

- In June 2023, a mother sued the parent companies of both Scruff, an LGTBQ+ dating site, and Telegram, accusing them “of gross negligence, design defect, intentional infliction of emotional distress, and human trafficking violations — among other claims.” Her four-year-old daughter was groomed and sexually exploited by two men, whose discussions and sharing of CSAM of the minor occurred on the two apps.

- In January 2023, a 24-year-old East Bay man was sentenced to 10 years (120 months) in prison for selling CSAM on Telegram in exchange for money or gift cards. The perpetrator operated numerous Instagram accounts that were linked together, and to his Telegram account, which he would use to post CSAM, offer access to Telegram groups, and then provide CSAM in exchange for money.

Unfortunately, there have been many more cases than those described above.

Lack of or Inadequate Policies and Refusal to Moderate Content

As a Carnegie Endowment for International Peace September 2023 article noted: “Telegram is particularly notorious for its light-touch content moderation policy, which prohibits calls for violence but does not police closed groups or ban hateful or doxing posts that call for action implicitly rather than explicitly.”

As noted multiple times above, Telegram lacks even the most basic policies preventing illegal content and activity, and has been noted by researchers as likely not even using existing technology to detect known CSAM. Telegram has yet to make any confirmed reports to NCMEC’s CyberTipLine, instead reporting child abuse in a Telegram channel titled, “Stop Child Abuse” – only reporting the aggregate of banned groups and channels rather than by instances (images).

In 2023 alone, Telegram banned an average of 49.7K channels and groups per month related to child abuse.

But this is no reason to praise Telegram. This number is terrifying in its sheer volume and speaks more to how pervasive CSAM is on the platform rather than being any indicator of proactive action on Telegram’s part. Even more disturbing is the fact that this massive tally represents entire channels and does not reflect the number of individual instances of CSAM that were found or reported on Telegram. It’s also important to note that because Telegram does not implement any proactive moderation or detection tools, and refuses to moderate anything except publicly accessible content, these reports are primarily from individual users. Furthermore, it is unclear if Telegram ever reports instances of CSAM to law enforcement or proper authorities. We know that Telegram does not report to NCMEC.

Telegram claims to remove illegal imagery within 10-12 hours with a permissible limit of 24 hours, stating, “We take immediate and stringent action as prescribed by the law of the land in response to any such violations. When CP/CSAM/RGR content is reported, we initiate prompt actions to remove the offending material. Our average response time for removal is 10-12 hours, well within the permissible time limit of 24 hours as stipulated by the aforementioned regulations.” Yet NCOSE researchers made a report to Telegram, NCMEC, and IWF in early November 2023 about a “nudifying” bot depicting suspected CSAM – the bot is still active as of March 2024.

Telegram policies state that it only processes requests for “publicly available” content like “sticker sets, channels, and bots,” and notes that all chats and groups are private amongst their participants (i.e., Telegram making it clear it will not accept responsibility for content on the platform). Telegram’s recent “banning” of Hamas-owned channels to comply with Google Play Store regulations exemplifies that Telegram seems to only respond to dangerous situations when the platform itself is threatened – such as being removed from the app stores. But even bans are ineffective because when channels are banned, ‘banned’ members easily create another channel and continue to engage in abusive and harmful activity.

Fast Facts

Apple App Store rating: 17+, Google Play Store rating: M (Mature 17+), Common Sense Media rating: 17+ , Bark rating: 17+, 1/5 stars, Telegram’s policy: Telegram is for everyone who wants fast and reliable messaging and calls (EU/UK citizens must be 16+)

#1 platform where minor platform users are most likely to indicate experiencing an online sexual interaction at 28%, tied with Omegle (28%), and followed by Kik (24%), Snapchat (21%), Facebook (19%), and Twitter (19%)

Minor users of Telegram reported a notable (+8%) increase in online sexual interactions with someone they believed to be an adult from 2021 to 2022.

Telegram (46%) noted as the most popular messaging app used by CSAM offenders to search for, view, or share CSAM, followed by WhatsApp (37%)

Telegram (45%) identified as the top messaging app used by CSAM offenders to attempt to establish contact with a child, followed by WhatsApp (41%)

Recommended Reading

Stay up-to-date with the latest news and additional resources

Telegram founder's arrest is part of a larger investigation into alleged 'complicity' in child exploitation and drug trafficking

Adult Film Actor Justin Heath Smith, a/k/a “Austin Wolf,” Charged With Distribution Of Child Pornography

eSafety Commissioner Julie Inman Grant issues legal notices to Meta, Google, WhatsApp, Telegram, Reddit and X

On popular online platforms, predatory groups coerce children into self-harm

A Revealing Picture: AI-Generated ‘Undressing’ Images Move from Niche Pornography Discussion Forums to a Scaled and Monetized Online Business

Tech Platforms Used by Online Child Sexual Abuse Offenders: Research Report with Actionable Recommendations for the Tech Industry

The Use of Telegram for Non-Consensual Dissemination of Intimate Images: Gendered Affordances and the Construction of Masculinities

updates

Resources

Violent Online Groups Extort Minors to Self-Harm and Produce Child Sexual Abuse Material

- Is someone sharing explicit images of you (either real or fake) without your consent? Get help from the Cyber Civil Rights Initiative

- Report child sexual exploitation to NCMEC