A Mainstream Contributor To Sexual Exploitation

“Moving fast” and making money at all costs leaves children to pay the price

Meta’s launch of end-to-end encryption, open-sourced AI, and virtual reality are unleashing new worlds of exploitation, while its brands continue to rank among the most dangerous for kids.

Take Action

Updated 10/11/24: Ahead of the House Energy and Commerce Committee’s markup of KOSA in September 2024, Meta announced new Instagram Teen Accounts with defaulted safety settings, which NCOSE asked for when we placed Meta on the 2024 Dirty Dozen List, including:

- Private Accounts: Teen accounts will automatically be set to private so that the teen must approve new followers and people who don’t follow them can’t see their content or interact with them.

- Restricted Messaging & Interactions: Teens can only be messaged, tagged, or mentioned by people they follow or are already connected to.

- Sensitive Content Restrictions: Teen accounts will automatically be set to the highest content restrictions, to limit their exposure to inappropriate content.

And teens under 16 will need parental permission to change their privacy and safety settings! You can read more about this policy change in our blog and press statement.

Updated 7/12/24: A new report from Thorn and NCMEC released in June 2024, Trends in Financial Sextortion: An Investigation of Sextortion Reports in NCMEC CyberTipline Data, analyzed more than 15 million sextortion reports to NCMEC between 2020 and 2023. The report found:

- Instagram (60%) is the #1 platform where perpetrators threatened to distribute sextortion images, followed by Facebook (33.7%).

- Instagram (81.3%) is the #1 platform where perpetrators actually distributed sextortion images out of reports that confirmed dissemination of the images on specific platforms, followed by Snapchat (16.7%) and Facebook (7.8%).

- Instagram (45.1%) is the #1 platform where perpetrators initially contacted victims for sextortion, followed by Snapchat (31.6%) and Facebook (7.1%).

- Of reports that mentioned a “secondary location” where victims were moved from one platform to another, 14% named WhatsApp, 6.6% named Instagram, and 2.9% named Facebook.

- Over the last two years, both Facebook and Instagram reports of sextortion to NCMEC have shifted from a pattern of rapidly submitting reports within days of an incident to more recently having a median delay of over a month between sextortion events and their reports.

Meta is one of the world’s most dangerous place for a child to “play.”

“May contain images of child sex abuse.” This was a warning pop-up that Meta’s Instagram surfaced for certain search results, with further explanation that this type of content is illegal.

Incomprehensibly, this message also included a button to “view results anyway.”

As unbelievable as it may seem, this was an actual option offered by Meta: for users to view content that its tools were able to flag as likely to contain some of the most egregious images imaginable.

This decision by Meta – which unleashed the fury of US senators onto Meta CEO Mark Zuckerberg during a Congressional hearing about tech platforms and child sex abuse – paints a stark picture of Meta’s negligent, profit-first approach to child online safety.

Meta’s platforms – Facebook, Messenger, Instagram, and WhatsApp – have consistently been ranked for years as the top hotspots for a host of crimes and harms: pedophile networks sharing child sex abuse material, where CSAM offenders first contact children, exploitative algorithms promoting children to adults, sex trafficking, sextortion, and image-based sexual abuse.

A flood of evidence from the past year alone – from whistleblowers, lawsuits, investigations, and Congressional hearings – has confirmed the suspicions of many: Mark Zuckerberg and Meta leadership have a very long track record of explicitly making the choice not to rectify harms it knows it is both causing and perpetuating on children…Or only doing so when forced by public pressure, bad press, or Congressional hearings.

- Whistleblower Arturo Bejar was alerted by his own 14-year-old daughter that she and her friends were constantly being solicited by older men. His research then found that one out of every eight kids under the age of 16 reported experiencing unwanted sexual advances on Instagram in the last seven days.

- A leading psychologist who provided guidance to Meta on suicide prevention and self-harm resigned from her position on Meta’s SSI expert panel, alleging that the tech giant is willfully neglecting harmful content on Instagram, disregarding expert recommendations, and prioritizing financial gain over saving lives.

- As many as 100,000 children every day were sexually harassed on Meta platforms in 2021, such as receiving pictures of adult genitalia. Despite this horrific statistic, Meta didn’t implement default settings for teens to protect them from receiving DMs from people they didn’t follow or weren’t connected to until three years later, just one week before the Meta was set to testify at a Senate hearing. And even then, Meta only implemented this default setting for ages 13-15. What about the older teens?

- Despite knowledge of multiple cases of 16-17-year-olds dying by suicide due to Instagram sextortion schemes, Meta refuses to provide 16-17-year-olds with the same life-saving features given to 13-15-year-olds, such as blocking messages from accounts they aren’t connected to and preventing all minors from seeing sexually explicit content in messages.

When a US Senator told Mark Zuckerberg and four other Big Tech CEOs “you have blood on your hands,’ he was not being hyperbolic.

Meta repeatedly claims to lead the industry in child online protection. Yet the evidence is overwhelming that so-called “safety measures” are not only ineffective, but that Meta actually facilitates harm – such as by providing access to child sexual abuse material (CSAM) with the “see results anyway” button for potential CSAM, and by algorithms that actually connect pedophiles to each other and to children’s accounts. Meta not only connects children to predators, Meta is itself a perpetrator.

Yet even amidst revelation after revelation of known harms, instead of dedicating its seemingly limitless resources into substantive changes that would truly protect children (as well as adult users), Meta made the devastating decision to move to end-to-end encryption – effectively blinding itself to literally tens of millions of cases of child sex abuse and exploitation, image-based sexual abuse, and any other criminal activity.

To make matters even worse (which seems almost impossible), Meta’s decisions around AI technologies are facilitating the creation and dissemination of deepfake pornography, “nudifying” tools, and sexually explicit chat bots – some of which have been made to imitate minors. Ads and profiles for individuals and groups promoting these forms of abuse are commonplace across Meta platforms. And despite repeated stories of sexual assault and gang rape in Meta’s “Horizon Worlds” virtual reality game, Meta opened it up to teens 13-17 years old.

Since October 2023, Attorneys General in all 50 states have taken action to hold Meta accountable. 42 states have taken legal action to sue Meta with 12 lawsuits for perpetuating harm to minors, while Attorneys General from 26 states sent a joint letter to demand that Meta cease monetizing child exploitation. Congress has also called Meta to account for its permittance and perpetuation of harm to minor-aged users, and dozens of child safety activists have decried Meta’s move to E2EE.

Meta released a slew of safety updates prior to the Congressional hearing in another overdue partial response to long-standing advocacy efforts; however, these updates remain insufficient in addressing the severe harms perpetuated by Meta’s platforms, particularly concerning the safety of children and vulnerable adults. This reactive approach, highlighted by instances like the Francis Haugen hearing and reports from reputable sources like the Stanford Internet Observatory, underscores Meta’s consistent pattern of minimal intervention despite internal research and external feedback.

For more on information on Meta’s previous shortcomings regarding promised updates and safety features, see HERE.

Meta is a mega exploiter and must be held to account for the countless crimes and harms it continues to perpetuate unabated.

Our Requests for Improvement

- Implement client-side detection for CSAM for all end-to-end encrypted spaces across Meta platforms. Remove, ban, and report all accounts

- Revise the definition of "teen" to encompass all minors 13–17 and ensure all available privacy and safety features are defaulted to the highest level and apply to all teens 13–17 across all Meta platforms. (Note: NCOSE believes that Meta platforms have been proven so unsafe for children, they should be a 17+ app)

- Set all minor-aged accounts’ “followers” and “following” lists to “always remain private,” and provide adults (18+) the option to keep "followers" and "following" lists private similar to Facebook to prevent sextortion and image-based sexual abuse.

- Ban and remove all nudifying bots, profiles, and ads within the Meta library and across all Meta platforms and products.

- Require all Meta AI products to undergo robust red teaming efforts with child safety experts and organizations to rigorously test and identify vulnerabilities in AI systems, ensuring they are resilient against misuse and effectively safeguard children's privacy and well-being.

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

This is a composite story, based on common survivor experiences. Real names and certain details have been changed to protect individual identities.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Child Sexual Exploitation and Abuse

Not only are Meta platforms consistently ranked among the top for various types of child sex abuse and sexual exploitation, an increasing number of whistleblower testimonies, unredacted lawsuits, and investigations have proven that Meta often knows the extensive harms its platforms, tools, and policies perpetuate – and decides to do nothing about them.

It is incomprehensible that Meta fails to protect 16 and 17-year-olds, as exemplified by the tragic experience of a father who lost his 17-year-old son to sextortion. This is a situation which likely would not have been prevented by the recent changes Meta implemented, since the protections are only offered to 13–15-year-olds. Despite Meta’s apparent recognition of the harm caused by its products and policies, demonstrated from internal research and external insights, the company only appears to act when subjected to intense public scrutiny – such as during events like the Francis Haugen hearing, reports from entities like the Stanford Internet Observatory, and critical articles from reputable sources like The Wall Street Journal. This reactive pattern indicates that Meta frequently opts for minimal interventions in response to major negative press rather than proactively addressing issues identified through ongoing research and external feedback.

Evidence of child sex abuse and exploitation on Meta platforms and products: Facebook, Messenger, Instagram, WhatsApp, and Oculus Quest (‘Metaverse’):

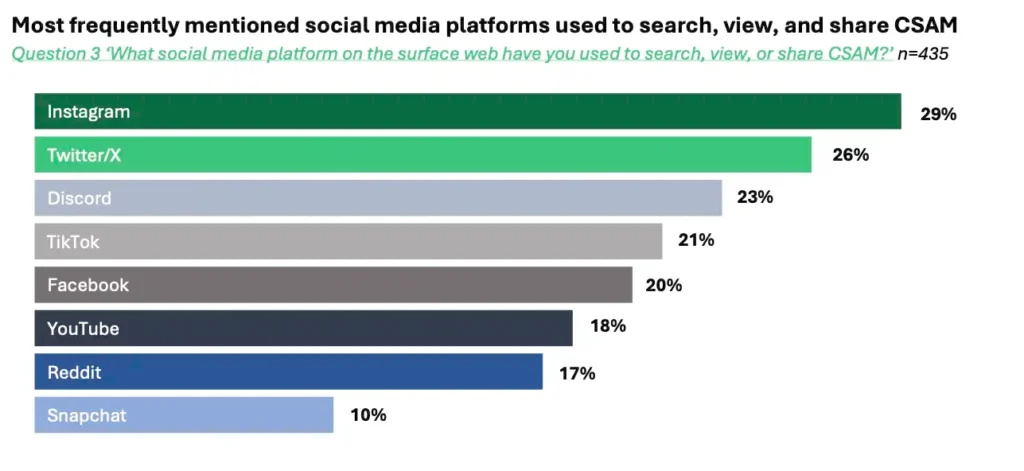

- Tech Platforms Used by Online Child Sex Abuse Offenders (Suojellaan Lapsia, Protect Children, February 2024)

- Instagram is the #1 social media platform where CSAM offenders reported finding and sharing CSAM; Facebook is in the top 5.

- WhatsApp is the #2 most popular messaging app used by offenders to find and share CSAM.

- Instagram (#1) and Facebook (#2) are the top two social media platforms used by CSAM offenders to attempt to establish first contact with a child.

- WhatsApp is the #2 most popular messaging app used by CSAM offenders to attempt to establish first contact with a child.

The image below illustrates the platforms most commonly utilized by CSAM offenders for searching, viewing, and sharing CSAM, based on data obtained from a self-reported survey conducted among CSAM offenders.

Captured from Tech Platforms Used by Online Child Sex Abuse Offenders, Suojellaan Lapsia, Protect Children, February 2024

- Instagram is the #2 platform for the highest rates of sextortion (Instagram, 41% and Snapchat, 42%, roughly 80% of reports mention Instagram or Snapchat – Canadian Centre for Child Protection, November 2023).

- Instagram is the #2 parent-reported platform for sexually explicit requests to children; Facebook named #1 (Parents Together report Afraid, Uncertain, and Overwhelmed: A Survey of Parents on Online Sexual Exploitation of Children, survey of 1,000 parents, April 2023).

- Instagram correlated with higher rates of parent-reported children sharing sexual images of themselves – a form of child sexual abuse material (Parents Together, April 2023).

- Instagram is the only platform listed in top 5 worst in every single one of Bark’s Annual Report categories for second year in a row: severe sexual content, severe suicidal ideation, depression, body image concerns, severe bullying, hate speech, severe violence (Bark 2023 Annual Report analyzed 5.6 billion activities on family accounts across texts, email, YouTube, and 30+ apps and social media platforms).

- WhatsApp’s response time to action CSAM content is shockingly slow, taking an average of 26-29 hours to action reported CSAM content (Australia eSafety Commissioner, 2022).

- NCOSE researchers identified a disturbing trend of sextortion cases linked to WhatsApp, averaging almost one case per day in the last two weeks alone.

- Thorn Report, November 2023:

- Facebook (9%), Instagram (8%), and Messenger (9%) are all among the top 5 platforms where minors reported having an online sexual interaction with someone they believed to be an adult (Thorn Report Findings, November 2023)

- Meta-owned Facebook (13%), Instagram (13%), and Messenger (13%) tied as the #2 platform where most minors reported having an online sexual experience. Facebook is in the top five platforms with the highest rates of online sexual interactions among minor platform users, tied with X at 19%.

- Percentage of minors who have shared their own SG-CSAM that use Meta platforms daily: Facebook – 67%, Instagram – 74%, Messenger – 66%, WhatsApp – 50%.

- Percentage of minors who have reshared SG-CSAM of others that use Meta platforms daily: Facebook – 53%, Messenger – 52%, Instagram – 58%, WhatsApp – 29%.

- Percentage of minors who have been shown nonconsensually reshared SG-CSAM that use Meta platforms daily: Facebook – 56%, Messenger – 54%, Instagram – 56%, WhatsApp – 35%.

Meta’s Oculus Quest and ‘Metaverse’

In 2022, NCOSE named Meta to the Dirty Dozen List in part because of the sexual abuse and exploitation occurring within Meta’s Metaverse through the Oculus Quest VR headset. One of the most concerning spaces in this “metaverse” was Horizon Worlds, a then 18+ game. NCOSE found growing evidence that many children are “playing” in an adult space with no protections for minors.

Horizon Worlds has been dangerous since its inception. During the beta-testing of Horizon Worlds in 2021, female testers reported instances of sexual assault and rape – one of which occurred even when the block feature was turned on. Another woman said that within sixty seconds of entering she was virtually gang raped by four male avatars. Meta only implemented the “personal boundary” safety feature after its official launch and reports of more women being sexually assaulted.

Additionally, on March 8, 2023, the Center for Countering Digital Hate published a report about bullying, sexual harassment of minors, and harmful content on Horizon Worlds.

Despite knowledge of these harms, in April 2023, Meta made Horizon Worlds available to young users between ages 13-17.

End-to-End Encryption (E2EE) Will Allow CSAM and Other Crimes to Thrive Undetected

Meta’s decision to implement end-to-end encryption was considered a devastating blow to global child protection efforts due to its potential to create an environment conducive to the proliferation of child sexual abuse material (CSAM) and online grooming. While E2EE is designed to protect user privacy, it also shields criminal activities from detection, including the sexual exploitation of children. Meta’s adoption of end-to-end encryption (E2EE), despite being the largest reporter of CSAM, submitting over 27 million reports to NCMEC in 2022, posits Meta as a protector of predators, privacy, and profit – not children. This approach endangers vulnerable individuals and severely undermines Meta’s testament and responsibility for enhancing online child safety.

Through E2EE, messaging content is inaccessible to anyone, including Meta itself, unless a message is reported by a user. Without the ability to monitor communications for such harmful behaviors, Meta may as well be rolling out the welcome mat for predators to operate without fear of detection or intervention.

Meta could implement content detection technology that would work in E2EE areas of the platform by only scanning content at the device level – placing content detection mechanisms directly on users’ devices rather than on the platforms’ servers. By implementing E2EE across its platforms, Meta effectively closes the door on proactive measures to identify and prevent the circulation of CSAM, as well as the grooming of minors by predators.

Refusing to implement viable detection technology, despite its potential to safeguard children from exploitation and abuse, is nothing short of negligent.

Meta made the move to E2EE despite internal research and communications showing that…

- Over one-quarter (28%) of minors on Instagram received message requests from adults they did not follow, indicating a significant risk of unwanted or potentially harmful contact (2020).

- In a July 2020 internal chat, one employee asked, “What specifically are we doing for child grooming (something I just heard about that is happening a lot on TikTok)?” The response from another employee was, “Somewhere between zero and negligible. Child safety is an explicit non-goal this half” (2020).

- Meta’s internal research found that the feature ‘People You May Know (PYMK)’ contributed to 75% of cases involving inappropriate adult-minor contact (2021).

- The majority of Instagram users under the age of 13 lie about their age to gain access to the platform, acknowledging the danger of relying on a user’s stated age…“[s]tated age only identifies 47% of minors on [Instagram]…” Moreover, “99% of disabled groomers do not state their age … The lack of stated age is strongly correlated to IIC [inappropriate interactions with children] violators.” (2021).

- One out of every eight users under the age of 16 reported experiencing unwanted sexual advances on Instagram in the last seven days (2021).

- Roughly 100,000 children per day experienced online sexual harassment, such as receiving pictures of adult genitalia (2021).

- Sexually explicit chats are 38x higher on Instagram than Facebook.

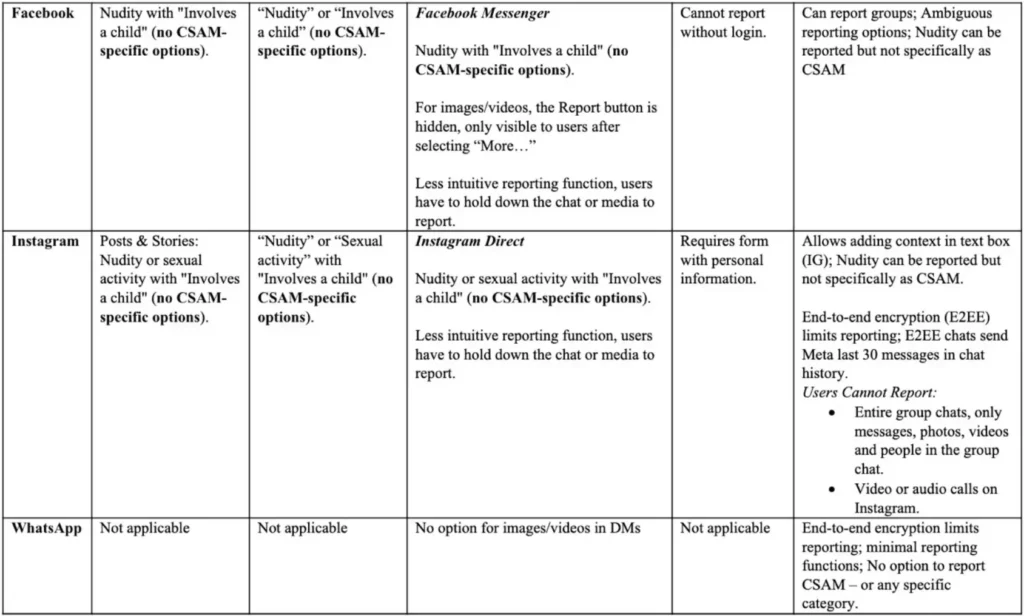

- Disappearing messages (secret chats that are encrypted) were listed as one of the “immediate product vulnerabilities” that could harm children, because of the difficulty reporting disappearing videos and confirmation that safeguards available on Facebook were not always present on Instagram.” Instagram Direct and Facebook Messenger STILL do not have a clear-cut option to report CSAM or unwanted sexual messages (2020).

NCOSE, along with numerous other child online safety organizations, strongly oppose Meta’s shift to End-to-End Encryption (E2EE), as it effectively ends Meta’s ability to proactively detect and remove CSAM across all areas of its platforms. Below are just a few excerpts of statements from global child safety experts on Meta’s move to E2EE.

National Center for Missing and Exploited Children (NCMEC)

“Meta’s choice to implement end-to-end encryption (E2EE) on Messenger and Facebook with no ability to detect child sexual exploitation is a devasting blow to child protection…

With the default expansion of E2EE across Messenger, images of children being sexually exploited will continue to be distributed in the dark. While child victims are revictimized as images of their abuse circulate, their abusers and the people who trade the imagery will be protected.

NCMEC urges Meta to stop its roll-out until it develops the ability to detect, remove and report child sexual abuse imagery in their E2EE Messenger services.

We will never stop advocating for victims of child sexual exploitation. Every child deserves a safe childhood.”

National Center on Sexual Exploitation (NCOSE)

“By implementing end-to-end encryption, Meta has guaranteed that child sexual abuse cannot be investigated on its platforms. Meta has enabled, fostered, and profited from child exploitation for years, and continues to be an incredibly dangerous platform as recent evidence from several Wall Street Journal investigations, child safety organization data, and whistleblower testimonies have confirmed. Yet in face of these damning revelations, Meta has done the exact opposite of what it should do to combat child sexual exploitation on its platforms. Meta has effectively thrown up its hands, saying that child sexual abuse is not its problem,” said Dawn Hawkins, CEO, National Center on Sexual Exploitation.

“Meta’s ‘see no evil’ policy of E2EE without exceptions for child sexual abuse material places millions of children in grave danger. Pedophiles and predators around the globe are doubtlessly celebrating – as their crimes against kids will now be even more protected from detection.

“Meta must immediately reverse its implementation of E2EE on Facebook and Messenger, find real solutions to ensure the protection of children, and meaningfully address ways the company can combat child sexual abuse,”

For NCOSE’s entire statement, see our press release.

Thorn

“…Today’s announcement by Meta that it will move Messenger for Facebook to full end-to-end encryption does not adequately balance privacy and safety. We anticipate this change will result in a massive amount of abuse material going undetected and unreported—essentially turning Messenger into a haven for the circulation of child sexual abuse material.

We strongly encourage Meta to rethink its encryption strategy and to implement stronger child safety measures that adequately address known risks to children before proceeding.

Decisions like Meta’s Messenger end-to-end encrypting have a direct impact on our collective ability to defend children from sexual abuse. Thorn is committed to developing and sharing technologies and strategies that protect children across all digital environments. We invite our partners and the broader tech community to join this crucial conversation, fostering a future where privacy and safety can coexist harmoniously.”

John Carr

“Late yesterday UK time, Meta took a massive backwards step. A strong policy which protected children is being abandoned. Meta is doing this in the name of privacy. However, their new approach tramples on the right to privacy of some extremely vulnerable people – victims of child sexual abuse – and ignores the victims’ right to human dignity while also introducing new levels of danger for a great many other children. The company will face a hail of fiery and well-deserved criticism, not least because there were alternatives which would have allowed them to achieve their stated privacy objectives without putting or leaving any children in harm’s way…

In 2018, using clever tools such as PhotoDNA, later added to by programmes they developed in-house, Facebook established some new systems. These allowed the company to find, delete and report to the authorities, images of children being sexually abused. These were all illegal still pictures or videos which someone had published or distributed, or was trying to, either via Facebook’s main platform or via their two main messaging apps, Messenger and Instagram Direct…

…Through efforts of the kind described Meta established itself as an acknowledged leader in online child protection. However, on 6th December 2023, Meta started to introduce end-to-end encryption (E2EE) to Messenger, and it has made clear it intends to follow suit with Instagram Direct. Thereby, it is wilfully blinding itself to all the possibilities that were there before. It is a truly shocking decision which I for one never believed they would actually implement. I thought they would back off. More fool me…”

For more information about E2EE, see our non-exhaustive list of statements by global child safety experts and see here to access John Carr’s full blog, “Meta says money matters more than children.”

Proliferation of AI-Facilitated Sexual Abuse

Despite Meta’s promise of fair and responsible AI systems, Meta AI is riddled with abuse and exploitation. In December 2023, Meta released a free standalone AI image-generator website, “Imagine with Meta AI,” in which Meta used 1.1 billion publicly visible Facebook and Instagram images to train the AI model. Given Meta’s historical negligence in moderating its platform, particularly in cases of child sexual abuse material (CSAM), image-based sexual abuse (IBSA), and sexual extortion, it is deeply concerning that their image generator is potentially trained on abusive content they have been reluctant to remove.

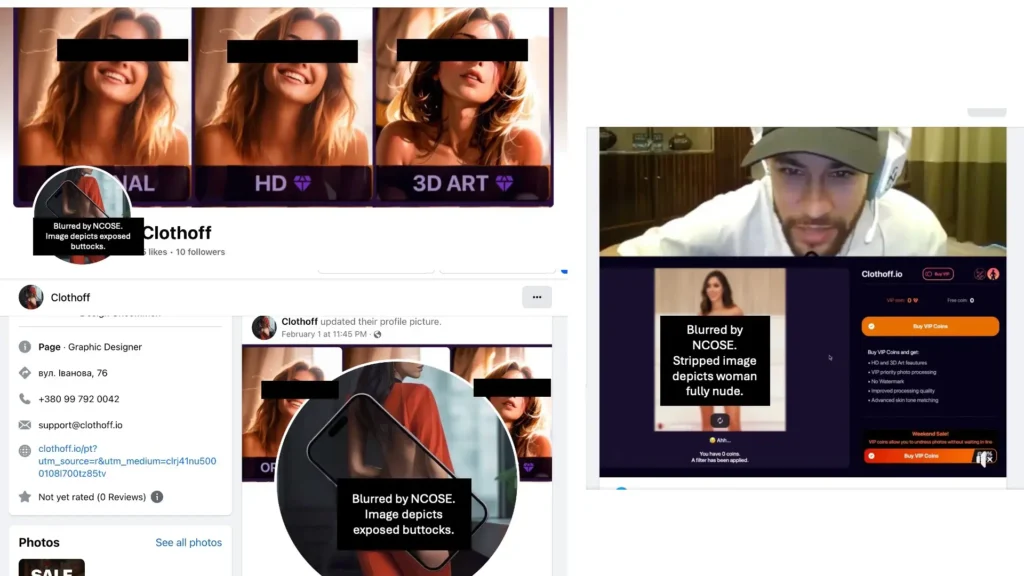

Recent news articles have also documented Meta’s reluctance to moderate its platform for cases of image-based sexual abuse and synthetic sexually explicit media (SSEM). For example, a 2023 NBC article found that Facebook ran hundreds of deepfake pornography ads depicting well known female celebrities engaging in sexual acts, despite its policies against this content.

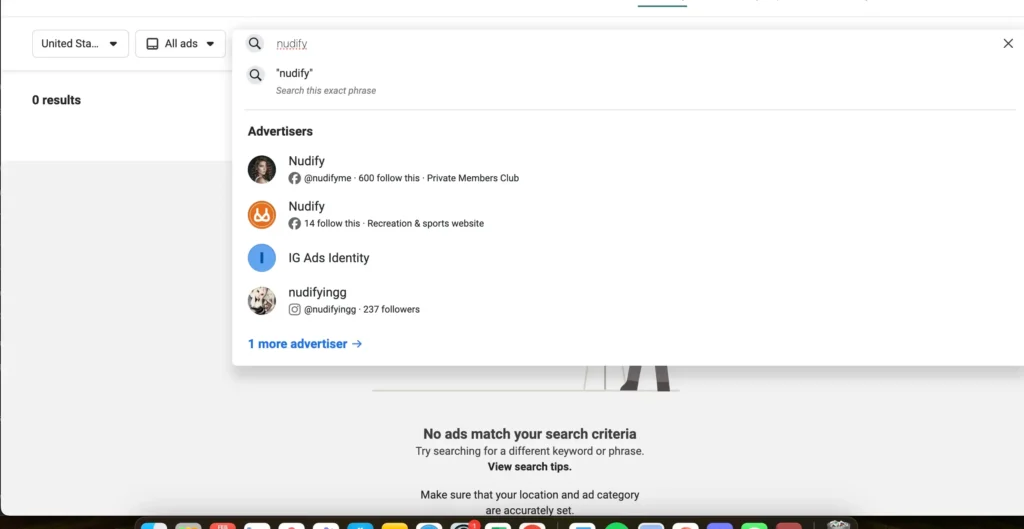

NCOSE researchers easily found ads, profiles, and groups promoting “nudifying” bots and deepfake pornography on Meta platforms and even on Meta’s ad library.

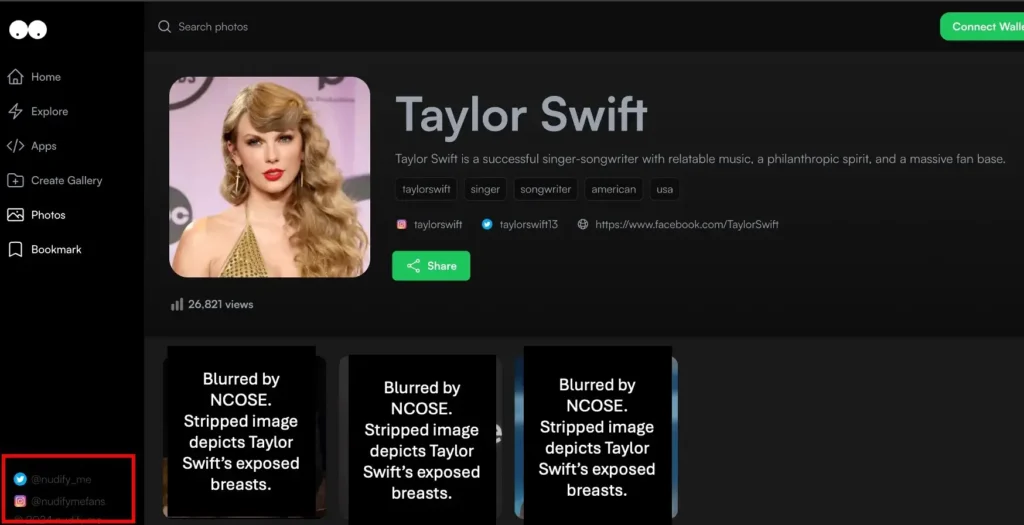

One of the profiles found on Facebook for “Nudify” took NCOSE researchers to a site dedicated to the creation, dissemination, and monetization of deepfake pornography of popular female celebrities.

Even more concerning is the rapid permeation of sexually exploitative technology on mainstream platforms. The same Facebook profile that directed NCOSE researchers to “Nudify.me” – a website dedicated to the creation, dissemination, and monetization of “nudifying” imagery – also advertised itself as a “private and extravagantly luxurious lifestyle Club in the Metaverse.”

Some profiles even had videos demonstrating how to “nudify” women with the bot.

In late 2023, Meta’s open-source tool Llama 2 was used to create sex bots, some of which were children. Meta could have prevented this with moderation and supervision of their AI technology, but they chose not to. This is concerning considering that Meta plans to release Llama 3, a “looser” version of Llama 2, in July 2024. Given Meta’s poor track record in moderation, these plans practically guarantee an influx of cases of sexual abuse and exploitation when bad actors abuse Meta’s opened-sourced AI.

Insufficient Reporting Features

As Meta begins to fully adopt E2EE across all its platforms, the need for simple, easy, and accessible reporting features is more important than ever before. Unfortunately, Meta makes reporting content on their platforms difficult and burdensome. Although Meta’s policies explicitly prohibit various harmful behaviors and content, their reporting features fail to reflect the depth of these policies. None of Meta’s platforms have a clearly visible reporting category for CSAM; rather, the option to report this content is hidden behind other, not necessarily intuitive, reporting categories. For example, on Instagram, one must first state that they are reporting “nudity,” and only then will they see an option to note that the nudity “involves a child.” It is imperative that the mechanism to report CSAM be as intuitive and obvious to users as possible – especially considering the sheer volume of CSAM on Meta’s platforms, evinced by the fact that Meta’s platforms accounted for over 85% of total reports made to the National Center on Missing and Exploited Children in 2022.

Further, in February 2024, The Guardian revealed that all automated CSAM reports sent to the National Center for Missing and Exploited Children’s (NCMEC) Cyber Tipline that are not reviewed by human moderators cannot be opened by NCMEC or law enforcement without a search warrant. Meta is known for boasting its robust detection technology but provides little reassurance that these reports are verified by human moderators before being sent to NCMEC. This backlogs investigations for law enforcement and abandons children who may be in physical danger, as there’s nothing NCMEC or law enforcement can do without a search warrant.

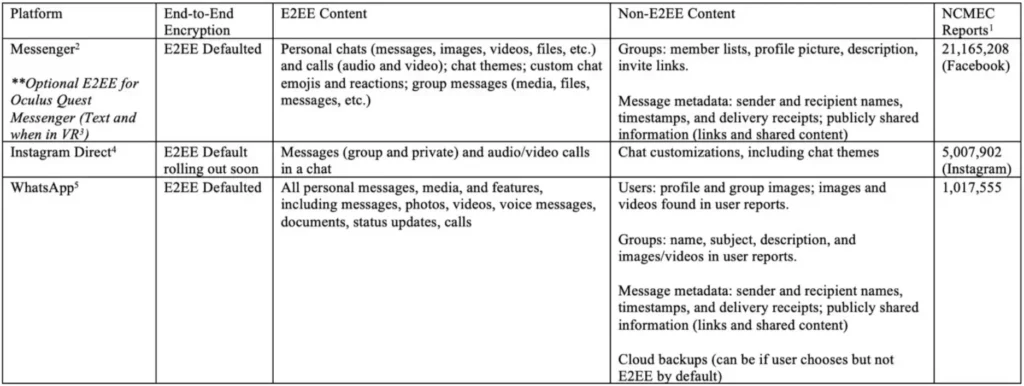

Current State of E2EE Across Meta Platforms

- Messenger: E2EE Defaulted (new features here).

- Instagram Direct: E2EE Default rolling out soon.

- Oculus Quest Messenger (Text and when in VR): E2EE Optional

- WhatsApp: E2EE Defaulted.

CSAM Reporting Features by Meta Platform

E2EE Content Versus Non-E2EE Content by Meta Platform

Recommended Reading

Stay up-to-date with the latest news and additional resources

Financial sextortion most often targets teen boys via Instagram, according to new data

Instagram Recommends Sexual Videos to Accounts for 13-Year-Olds, Tests Show

Trends in Financial Sextortion: An investigation of sextortion reports in NCMEC CyberTipline data

The Influencer Is a Young Teenage Girl. The Audience Is 92% Adult Men.

Meta Staff Found Instagram Tool Enabled Child Exploitation. The Company Pressed Ahead Anyway.

Updates

Resources

- Resource for Teens to remove their sexually explicit content off Instagram TakeItDown.NCMEC.org

- Resource for Adults to remove image-based sexual abuse off Instagram StopNCII.org

- Collective Shout: We reported 100 pieces of child exploitation content to Instagram – They removed just three

- Protect Young Eyes: Instagram Adds New Parental Controls. We’re Underwhelmed.

- The Hill (article by Lina Nealon) Congress must force Big Tech to protect children from online predators