A Mainstream Contributor To Sexual Exploitation

Reddit is riddled with sexploitation

Child sex abuse material, sex trafficking, and image-based sexual abuse hide in plain sight among endless pornography subreddits allowed on this platform…content which will be further monetized now that Reddit is public

Take Action

Reddit—a mainstream discussion platform hosting over two million user-created “communities”—is a hub of image-based sexual abuse, hardcore pornography, prostitution—which very likely includes sex trafficking—and overt cases of child sexual exploitation and child sexual abuse material (CSAM)

Reddit is known as one of the most popular discussion platforms on the Internet right now, with about 73 million active users each day. In 2023, Reddit averaged 1.2 million posts and 7.5 million comments daily. And in just six months, from January to June 2023, Reddit users exchanged 472,984,011 personal messages.

One can easily find countless subreddits for just about anything one can think of—including entire communities devoted to the sharing of image-based sexual abuse, hardcore pornography, prostitution, and overt cases of child sexual exploitation. Reddit is the ‘front page’ of sexual exploitation, providing a platform for the most egregious forms of sexual exploitation and abuse, where sexual exploiters, predators, traffickers, and deepfakers alike find community and common ground.

Reddit’s policies—including a new policy clarifying cases of image based sexual abuse (sometimes referred to as “revenge porn”) and child safety—while pretty on paper, have not translated into the proactive prevention and removal of these abuses in practice.

The following reports from researchers and online safety organizations demonstrate the wide-ranging sexual exploitation occurring across Reddit:

- The number of links advertising “undressing apps” increased more than 2,400% across social media platforms like Reddit and X in 2023 (Graphika, 2024).

- #3 app flagged for severe sexual content (Bark, 2023).

- Reddit is one of the top eight social media platforms that child sex abuse offenders used to search, view, and share CSAM, or to establish contact with a child (Suojellaan Lapsia, Protect Children, February 2024).

- Percentage of minors with SG-CSAM experiences that use Reddit at least once per day: 34% of minors have shared their own SG-CSAM, 26% have reshared SG-CSAM of others, 23% have been shown nonconsensually reshared SG-CSAM (Thorn, November 2023).

- One of the top ten online platforms teens report having seen pornography (UK Children’s Commissioner, 2023).

- Australian eSafety Comissioner research identified Reddit as one of the most popular social media sites young people report seeing pornography on (Canberra, Australia: Australian Government, 2023).

Despite Reddit’s numerous public attempts to clean up their image, including efforts to blur explicit imagery and introducing new policies to increase public safety, real changes to the platform have been few and far between. And with Reddit going public on March 21, 2024 all these abuses are now being monetized even further.

Our Requests for Improvement

- Implement robust age and consent verification measures – ban pornography and sexually explicit content until such measures are actively enforced to prevent image-based sexual abuse and child sexual abuse content on Reddit.

- Require mandatory email verification for current and future users, as anonymity allows for abuse without accountability.

- Make Reddit 17+ and prevent minors from accessing your platform – both Apple App Store and Google Play age ratings for Reddit are 17+ and M for “Mature,” respectively. Given that pornography is so easily accessible and other harms are rampant, children should not be on Reddit; asking Reddit for parental controls or other safety measures would be like asking Pornhub to make itself kid-friendly.

- Ban all forms of AI-generated pornography on Reddit – Reddit has no way of confirming age and consent verification of images used in training sets or the individuals depicted in generated images. Reddit’s June 2023 clarification to only ban AI-generated pornography of identifiable adults is another example of purposefully creating ambiguous policies providing ample space for exploitation and loopholes of such policies.

- Ban external links to pornography and sexually explicit media – the links NCOSE researchers found frequently led to sexually explicit content on pornography sites and social media platforms that contained indicators of IBSA and CSAM.

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

This is a composite story, based on common survivor experiences. Real names and certain details have been changed to protect individual identities.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Image-based Sexual Abuse

Following Reddit’s placement on the 2021, 2022, and 2023 Dirty Dozen lists, Reddit was finally inspired to make changes to their policies governing image-based sexual abuse. It “only” took them three years! Yet, NCOSE’s research found that these policy changes have been largely ineffective and have failed to bring about any positive change to protect users.

While NCOSE acknowledges Reddit’s improved blurring mechanisms for sexually explicit content, blurring non-consensual sexually explicit imagery does not make the content any less non-consensual. This feature is easily bypassed. When you click the post that is blurred, users are met with an unblurred image after clicking.

The subreddit r/PornID (dedicated to identifying women in sexually explicit imagery and videos) has an astonishing number of subscribers, ranging from 1.3 million in 2023 to 1.7 million in 2024. The thumbnails, although blurred, do not negate the fact that this subreddit, like dozens of others NCOSE researchers found, is dedicated to sharing non-consensual content and identifying women in such content potentially resulting in other forms of abuse such as doxing.

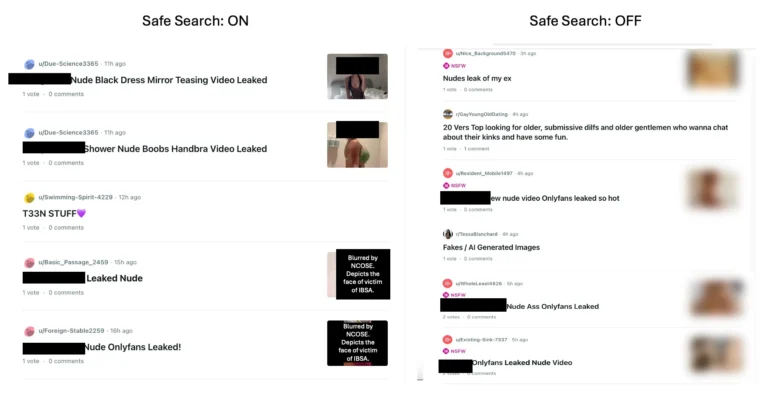

Searches with Reddit’s “Safe Search” Content Filter ON still return posts containing non-consensual content or links to such content. Further, when this filter is ON, sexually explicit content is openly shown, as the blurring filter only functions when Safe Seach is OFF. Considering Reddit’s historical negligence in moderating sexually explicit content, it is deeply concerning that this content is still slipping through Reddit’s ‘improved’ filters.

Despite Reddit’s update to Rule 3 in June 2023, the new policy literally titled “Never Post Intimate or Sexually Explicit Media of Someone Without Their Consent,” NCOSE researchers have found that Reddit is still littered with non-consensual content, including AI-generated content. For example, December 2023 research found a 2400% surge in ads for “undressing apps,” despite Reddit’s policies against non-consensual content and sexually explicit ads, underscoring the platform’s inadequacies in curbing image-based sexual abuse.

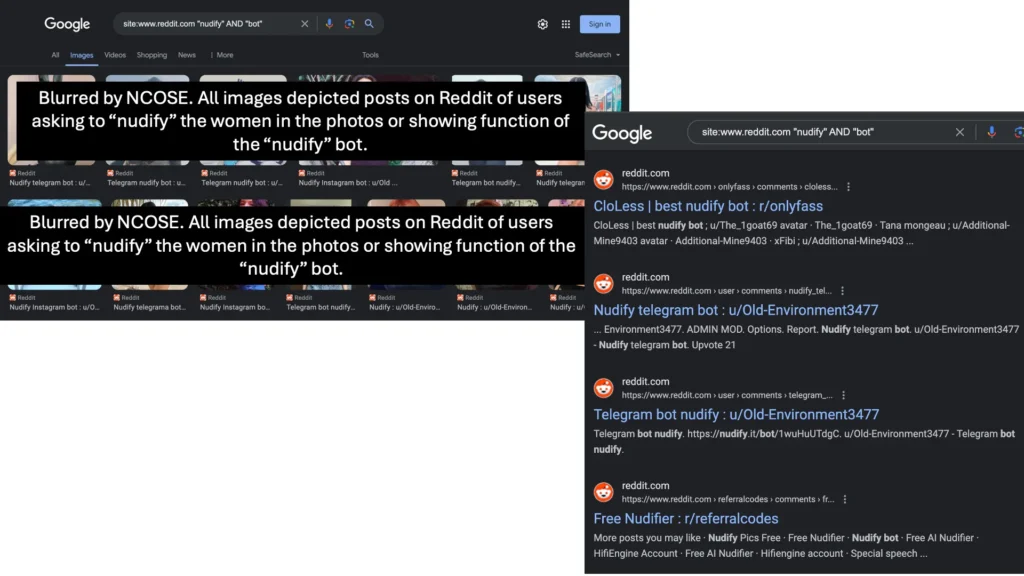

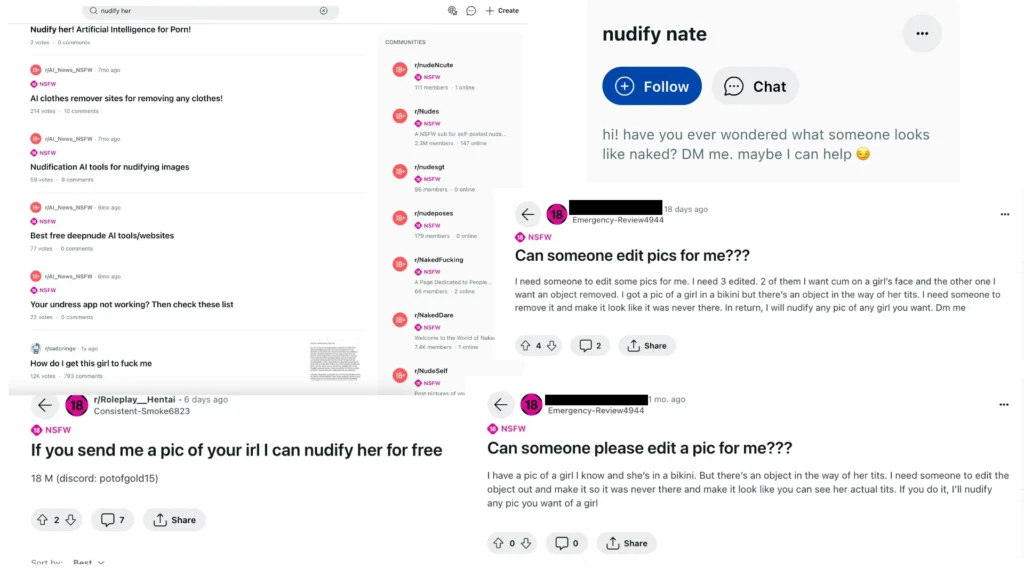

Reddit still fails to properly moderate content depicting, soliciting, or advertising IBSA. In fact, many posts on Reddit offer paid “services” to create deepfake pornography of specific individuals, based on innocuous images that the “client” submits.

Reddit’s increasing number of communities dedicated to the creation and distribution of AI-generated pornography is alarming. A dedicated AI-generated pornography community NCOSE reported on in 2023 grew by almost 3x the number of subscribers in a year—from 48,000 to 128,000 members. This exploitative tool amassed over 15 million “donated” sexually explicit and pornographic images to train their technology, and posts AI-generated pornography by the hour on Reddit. During its soft launch in July 2023, Unstable Diffusion CEO and Co-Founder stated the model was generating over 500,000 images a day. A 2023 Forbes article also highlighted the absolute explosion of AI-generated pornography on Reddit, indicating that the platform’s ambiguous policies have allowed it to flourish.

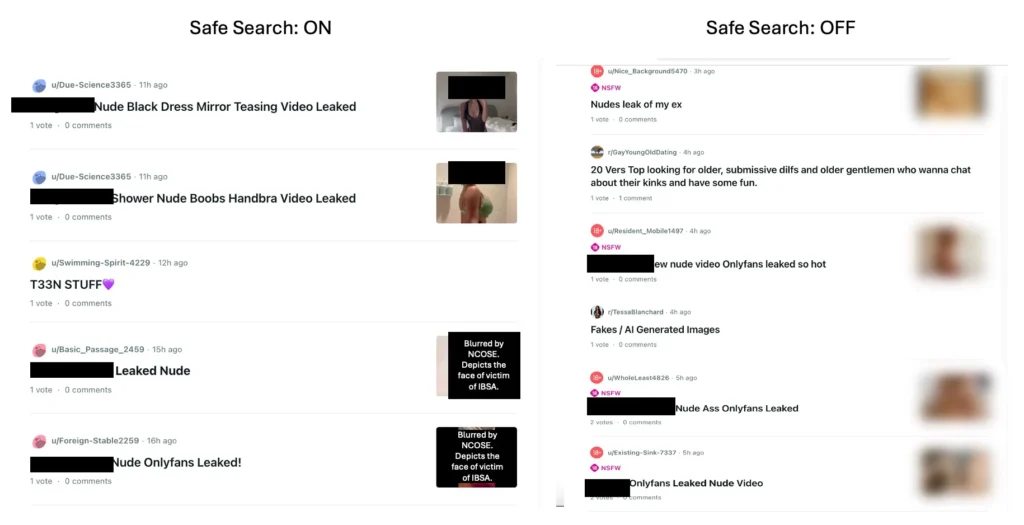

Considering Reddit’s recent partnership with Google, disclosed in the March 2024 S-1 filing with the SEC, this adds another layer of concern. This deal, which is expected to generate $66.4 million in revenue by the end of 2024, allows Google to train AI models using Reddit’s content. This arrangement is particularly alarming, given Reddit’s historical challenges with proactively identifying and eliminating sexually explicit content, including abusive and illegal imagery. Despite the new May 2023 policy banning third party and API access to sexually explicit content, the potential for Google’s AI models to be trained on the pervasive amount of sexually explicit, nonconsensual, and potentially illegal material on Reddit is exceptionally high.

Reddit’s lack of effective content blurring measures, the absence of age or consent verification mechanisms, and historical failure to do any content moderation at all, present blatant opportunities for abuse and raise profound safety concerns. This is incredibly concerning, especially considering Reddit’s historical failure to proactively identify sexually explicit content and remove abusive and illegal imagery from its platform.

Consequently, if Reddit continues to show the same negligence towards content moderation and the same permissive attitude toward questionably prohibited content on its platform, there is a grave risk of Google’s AI being trained on and generating sexually exploitative content, resulting in a proliferation of sexual abuse and exploitation.

Reddit remains an extremely risky investment and an overall dangerous platform.

Child Sexual Abuse Material (CSAM)

Because Reddit refuses to implement meaningful age or consent verification for the sexually explicit content that is constantly being shared on its platform, Reddit is a hub not only for IBSA, but for child sexual abuse material (CSAM) as well.

In June 2023, Reddit announced positive policy changes to the Child safety policy, including banning child sexualization and suggestive images of children. This policy update also included prohibiting comments, pictures, cartoon images, and poses that sexualize minors; prohibiting predatory or inappropriate behaviors, such as “sexual role-play where an adult might assume the role of a minor;” and banning the use of Reddit to facilitate inappropriate interactions with minors on other platforms.

While NCOSE applauded this policy change, we have yet to see meaningful enforcement. Recent research underscores the persistent availability of CSAM, content that sexualizes minors, and AI-generated sexually explicit and suggestive content of minors on Reddit. For example, research conducted by the Stanford Internet Observatory in December 2023, found child sexual abuse material (CSAM) originating on Reddit, had been used to train AI models such as Stable Diffusion. Further, the actions taken by the Australian eSafety Commissioner – one of the leading authorities on online child safety in the world – issued a legal notice to Reddit (amongst five other platforms) requesting a detailed inquiry into Reddit’s practices to detect and remove CSAM from the platform suggests additional concern.

Unfortunately, NCOSE has yet to see substantial evidence of comprehensive enforcement since Reddit updated their child safety policy:

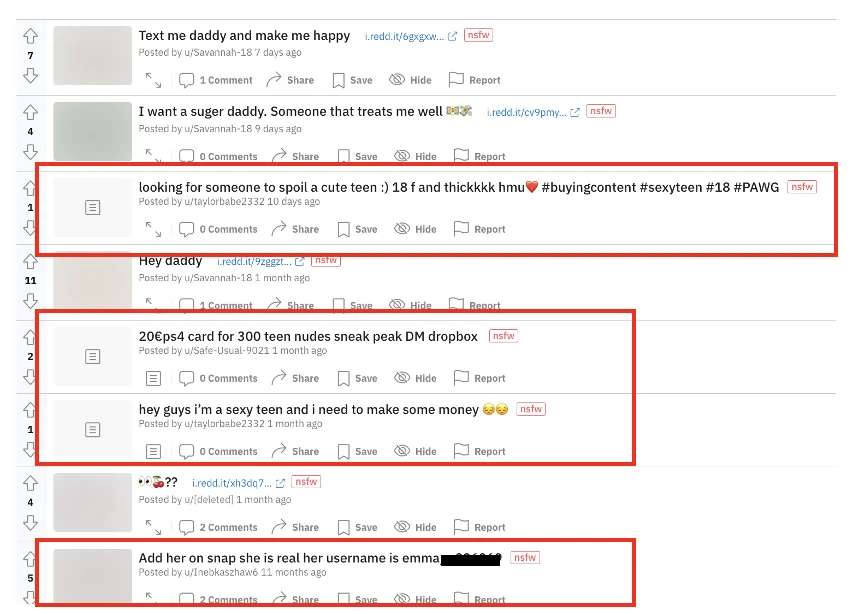

- With Safe Search ON, NCOSE researchers found that the search “mega teen leaks” and “mega links” returned over 100 results in the last 60 days, most openly soliciting or searching for links demonstrating high indication of child sexual abuse material (CSAM). (NCOSE, April 2024).

- Content attached to posts from the above search results and contained imagery suggesting child sexual abuse between adults and minors. One post titled, “MEGA links telegram::@cum_file5,” contained an image with the text, “Boys for Sale,” depicting a fully clothed adult man covering the mouth and grabbing the genital region of an underage boy, who was wearing only his underwear and had visible red marks of abuse across his chest (NCOSE, April 2024).

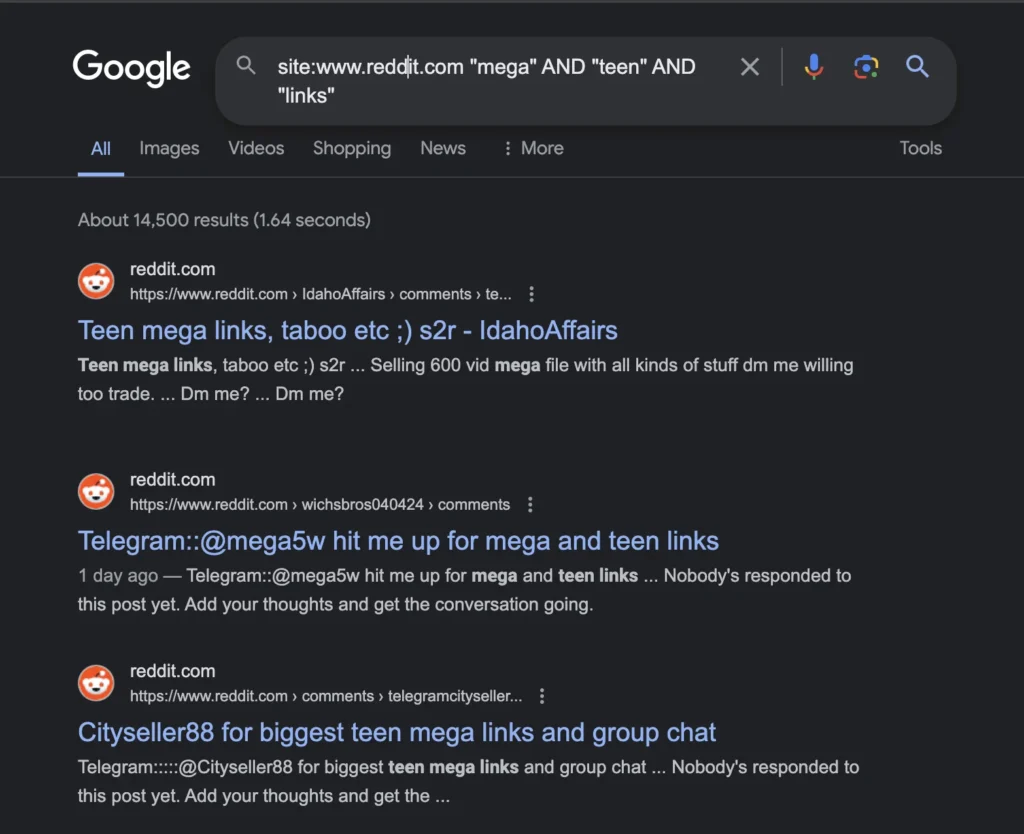

- NCOSE researchers conducted a Google search for “site:www.reddit.com “mega” AND “teen”” and received 14,500 results of content on Reddit containing high indications of child sexual abuse imagery sharing, trading, and selling (NCOSE, April 2024).

- NCOSE researchers analyzed the top 100 pornography subreddits (what is tagged on Reddit as “NSFW”) and found numerous examples of child sexualization and/or possible indicators of child sexual abuse material—including titles like “xsmallgirls” and references to flat-chestedness (NCOSE, July 2023).

Search results conducted on April 5, 2024, for “site:www.reddit.com “mega” AND “teen”” returned 14,500 results of extremely concerning content.

Not only has CSAM still been found on the platform, but subreddits sexualizing minors are pervasive across Reddit. Even with the Sensitive Content filter ON to block sexually explicit results (not to mention their policies banning sexualization of minors), NCOSE researchers were exposed to links and images sexualizing minors labeled “teen nudes” – one of which was a link to a minor no more than ten years old.

While the minor is fully clothed in the thumbnail, why is this image – accompanied by a website clearly featuring explicit content – featured with the filters ON? More importantly, why is this content allowed on Reddit?

NCOSE researchers also found dozens of subreddits and posts containing similarly sexualizing language and imagery, some posts even containing content with high indications of CSAM.

CSAM is still illegal, even if Reddit has taken steps to blur it. Reddit KNOWS about the pervasiveness of exploitative and illegal content on their platform; blurring it is simply slapping a bandaid on this festering wound for legislators and investors.

Evidence of CSAM and Sexual Exploitation of Minors on Reddit

In November 2023, a Thorn report, Youth Perspectives on Online Safety, revealed the annual percentage of minors with Self Generated-CSAM (SG-CSAM) experiences that use Reddit at least once per day: 34% of minors had shared their own SG-CSAM, 26% had reshared SG-CSAM of others, and 23% had been shown nonconsensually reshared SG-CSAM. While it is unclear whether the minors in the study willingly chose to share that CSAM or were coerced into creating and sharing these images of themselves, it is clear that minors are not shielded from CSAM that exists on Reddit, despite Reddit’s attempted policy changes. Without Age and Consent Verification for Reddit users, this problem will only continue to grow.

News reports, such as those documented below, are examples of Reddit’s problematic “hands off” approach when it comes to regulating non-consensual sexually explicit content, allowing child sexual abuse material (CSAM) to exist on the platform.

The following cases are just a few reports from the past year:

- On February 2, 2024, an Ashland, KY man was charged with the sharing and distribution of nude images of young boys aged 10-14. He had distributed them to as many as 20 others on Reddit.

- On January 25, 2024, a 37-year-old Dover, NJ man was arrested for possessing CSAM and uploading it onto Reddit. The initial report was made following a NCMEC CyberTip, as reported to the CyberTipline.

- In November 2023, a former associate pastor of the Baptist church and Chaplain at the Oklahoma State Penitentiary was arrested for engaging in sexually explicit chats with minors on Reddit and possessing CSAM.

- On August 30, 2023, an Albert Lea, Minnesota man pleaded guilty to four counts of possession of CSAM after admitting to buying CSAM off of Reddit. He was arrested based on a CyberTip from NCMEC.

- In August 2023, a Glendale, Wisconsin police officer was fired and arrested for posting CSAM on Reddit. He had at least six images in his possession at the time of the arrest.

Until Reddit can implement robust age and consent verification processes for sexually explicit content, and create clarity and consistency for policy implementation, pornography must be prohibited and deleted to stem the CSAM currently rampant in Reddit’s discussion forums.

Severe Sexual Content, Sexual Violence, and Sexualization of Minors on and through Reddit

Reddit refuses to implement effective content policies and moderation practices for harmful content. Severe sexual content, sexual violence, and the sexualization of minors STILL exists on Reddit despite being banned in June 2023. Our partners are clear: Reddit is one of the most popular apps where this content still appears, despite the so-called ‘policy changes.’

Evidence of Severe Sexual Content, Sexual Violence, and Sexualization of Minors on Reddit:

- #3 app flagged for severe sexual content (Bark, 2023).

- Top 10 social media sites through which children have accessed pornography (UK Children’s Commissioner, May 2023).

- Australian eSafety Commissioner research identified Reddit as one of the most popular social media sites on which young people report seeing pornography (Canberra, Australia: Australian Government, 2023).

Subreddits sexualizing minors are pervasive. Even with the Sensitive Content filter ON to block sexually explicit results (not to mention their policies banning sexualization of minors), NCOSE researchers were exposed to links and images sexualizing minors labeled “teen nudes” — one of which was a link to a minor no more than ten years old.

NCOSE researchers also found dozens of subreddits and posts containing similarly sexualizing language and imagery, some posts even containing content with high indications of CSAM.

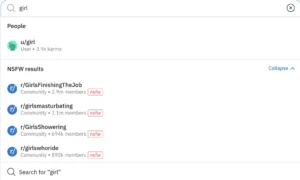

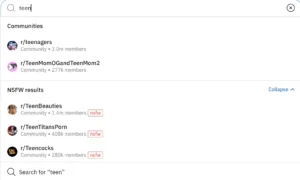

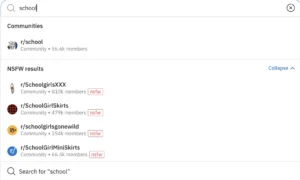

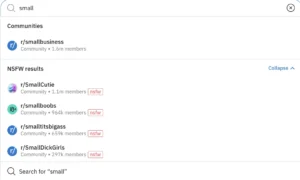

When NCOSE researchers typed in seemingly innocuous search terms ‘girl,’ ‘teen,’ ‘school,’ ‘small,’ and ‘petite,’ subreddits with titles devoted to the sexualization of minors were suggested by Reddit’s search algorithms. See our search results below:

In addition to this, NCOSE researchers have found artificial / illustrated pornography depicting bestiality and even illustrated bestiality involving child-like figures.

In 2023, NCOSE exposed a subreddit dedicated to AI-generated bestiality imagery, some of which depicted child-like figures. While the original subreddit we reported was removed, the owner EASILY made a new account and subreddit with the same title – and even admitted to doing so (proof available upon request). The new subreddit is still active today (April 5, 2024), and contains imagery depicting bestiality involving child-like figures and posts of users sharing their experiences of actual bestiality. It is horrendous that Reddit allows this clearly nefarious and likely illegal content to remain.

Ecosystem of Exploitation

Absence of industry-standard protections and practices and anemic policy implementation on Reddit create an ecosystem of exploitation.

Lack of accountability.

Reddit is substantially behind current safety and moderation practices, failing to implement even the most basic safety practices, such as requiring a verified email address – something that is now an industry-wide standard. As a result, ban evasions, throw-away accounts, and escaping accountability for posting harmful and illicit content are commonplace on Reddit. Instagram, Facebook, and TikTok all require users to provide a valid email address to create a profile on their app and limit the ability to search content without creating an account – basic features that Reddit fails to require.

Lack of either protection or tools for moderators.

One study estimated that moderators do a total of $3.4 million worth of unpaid moderation for Reddit. Female moderators have also reported experiencing abuse once community members are aware of their gender, contributing yet again to Reddit’s problems with misogyny and sexism. Moderators have experienced abuse at the hands of throw-away accounts for attempting to make their communities safe and enjoyable environments – something Reddit admins fail to achieve.

Lack of reporting mechanisms.

Reddit also fails to provide effective and easy reporting mechanisms for users and moderators on their platform. Even when one user reported the sale of suspected CSAM, Reddit refused to act.

This is especially concerning, given that moderators do not have the tools or ability to remove content from Reddit permanently. Moderators can “remove” content and ban users from subreddits. Still, the content continues to exist on the platform marked as [removed] and remains visible until a Reddit Admin physically deletes the [removed] content.

An article in 2022 exposed Reddit’s refusal to allow moderators to remove content which inadvertently led to a collection of posts in the subreddit r/TikTokThots, a community of 1.3 million users, in which moderators removed posts containing potential CSAM that remain searchable on Reddit today.

Failure to implement existing policies.

As is clear by reviewing all the compiled evidence, Reddit fails to implement its own policies – with newly announced policies easily proven ineffective.

In addition to the recently updated policy on image-based sexual abuse, which failed NCOSE researcher testing, Reddit announced automatic tagging of sexually explicit images in March 2022. Thankfully, in 2023, NCOSE researchers witnessed a significant improvement in the automatic tagging and blurring of sexually explicit images and videos. However, because Reddit continues to allow communities and posts dedicated to non-consensual imagery, blurring these images while clearly allowing sexually explicit content does not adequately protect victims of image-based sexual abuse and AI-generated pornography.

Fast Facts

34% of minors who use Reddit at least once a day have shared their own self-generated CSAM (SG-CSAM), 26% have reshared SG-CSAM of others, 23% have been shown nonconsensually reshared SG-CSAM (Thorn 2023)

44% of minors 9-17 have used Reddit

The number of links advertising “undressing apps” increased more than 2,400% across social media platforms like Reddit and X in 2023