A Mainstream Contributor To Sexual Exploitation

Microsoft’s GitHub is the global hub for creating sexually exploitative AI tech

The vast majority of deepfakes, “nudify” apps, and AI-generated child sex abuse content originate on this platform owned by the world’s richest company. #deepfakehub

Take Action

Updated 10/11/24: On September 12, 2024, the White House announced new private sector voluntary commitments to combat image-based sexual abuse (IBSA), which included a public commitment from Microsoft’s GitHub prohibiting projects that are “designed for, encourage, promote, support, or suggest in any way” the creation of IBSA. This policy was quietly implemented back in May – a month after the Dirty Dozen List reveal. As a result, several repositories hosting IBSA code have since been removed from GitHub, including DeepFaceLab, which hosted the code used to create 95% of deepfakes and sent users directly to the most prolific sexual deepfake website, MrDeepfakes.

Imagine: your daughter’s high school principal calls to tell you there are nude images of her all over the Internet.

She swears it’s not her…but it’s her face. And it looks so incredibly, terrifyingly real.

Finally, it’s revealed that some jilted male classmates took images of her and other girls in her class to make deepfake pornography – and it was likely created on GitHub.

This very trauma is happening to more and more girls and women due to Microsoft-owned GitHub.

So what is GitHub?

Imagine a social network for programmers similar to Google Docs, where people who write code for software projects can come together, share their work, collaborate on projects, and learn from each other. GitHub is the world’s leading developer platform and code hosting community with over 100 million developers worldwide. It’s popular amongst developers because of its open-source design, allowing anyone to access, use, change, and share software. Some of the biggest names in the tech industry (Google, Amazon, Twitter, Meta, and Microsoft) use GitHub for various initiatives.

GitHub, the world’s leading AI-powered developer platform is also arguably the most prolific space for Artificial Intelligence development.

Unfortunately, GitHub is also a significant contributor to the creation of sexually exploitative technology and a major facilitator of the growing crimes of image-based sexual abuse (the capture, creation, and/or sharing of sexually explicit images without the subject’s knowledge or consent) as well as AI-generated CSAM: child sexual abuse material created using artificial intelligence to appear indistinguishable from a real child.

- GitHub is a hotbed of sexual deepfake repositories (where the codes are) and forums (where people chat) dedicated to the creation and commodification of synthetic media technologies, as well as ‘nudify’ apps that take women’s images and “strip them” of clothing, such as DeepNude. Currently, nudifying technology only works on images of women.

- GitHub hosts codes and datasets used to create AI-generated CSAM, which experienced an unprecedented explosion of growth in 2023: evolving from a theoretical threat to a very real and imminent threat across the globe. In December 2023, the Stanford Internet Observatory discovered over 3,200 images of suspected CSAM in the training set of LAION-5B, a popular generative AI platform called Stable Diffusion. This dataset was available on GitHub.

Open-sourced repositories dedicated to sexual abuse and exploitation thrive on GitHub, allowing users to replicate, favorite (“star”), and collaborate on sexually exploitative technology without repercussions. Yet, GitHub remains focused on putting the needs of developers at the core of their content moderation policies, failing to acknowledge how a lack of moderation impacts countless victims of sexual abuse and exploitation. This means removing and blocking tools used for image-based sexual abuse and AI-generated CSAM on the platform.

The innovation and creative solutions offered by artificial intelligence are to be celebrated. However, technological advances must not supersede or come at the cost of people’s safety and well-being. Technology should be built and developed to fight, rather than facilitate, sexual exploitation and abuse.

By providing a space to collaborate in amplifying abuse, GitHub is not only condoning criminal conduct – it is directly contributing to it.

Microsoft’s GitHub has the potential to drastically reduce the number of adults and minors victimized through image-based abuse and AI-generated CSAM, and could lead the tech industry in ethical approaches to AI.

While GitHub emphasizes that removing one repository can impact developers, they overlook the significant positive impact it can have on victims of abuse by making the platform safer and reducing harmful content.

Review the proof we’ve collected, read our recommendations for improvement, and see our notification letter to GitHub for more details.

*Please also see our Discord and Reddit pages for examples of the type of deepfake pornography, other forms of image-based sexual abuse, and AI-generated CSAM that originate on GitHub and proliferate across platforms.

Our Requests for Improvement

- Ban private and public repositories dedicated to the creation of deepfake pornography, ‘nudifying,’ and AI-generated pornography and pornography and sexually explicit imagery scraping tools from GitHub. This includes removing current repositories, preventing the replication or creation of similar repositories for sexually exploitative technology and pornography and sexually explicit imagery scraping software development

- Require all open-sourced repositories for image generation hosted on your site to join the C2PA and adhere to their guiding principles

- Proactively moderate content and community forums on GitHub for sexually explicit imagery – including those created with AI technology, non-consensual imagery, AI-generated sexually explicit imagery, external links and discussions around using AI technology to facilitate sexual abuse and exploitation, and discussions that promote the normalization of these behaviors

- Default minor-aged accounts, including middle and high-school student repositories to ‘private’ to prevent minors’ exposure to the abundance of harmful content on GitHub

- Implement basic safety standards: parental controls, teacher/administrative controls, and age-gate repositories inappropriate for minor-aged users

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

This is a composite story, based on common survivor experiences. Real names and certain details have been changed to protect individual identities.

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

I once heard it said, “Trust is given, not earned. Distrust is earned.”

I don’t know if that saying is true or not. But the fact is, it doesn’t matter. Not for me, anyway. Because whether or not trust is given or earned, it’s no longer possible for me to trust anyone ever again.

At first, I told myself it was just a few anomalous creeps. An anomalous creep designed a “nudifying” technology that could strip the clothing off of pictures of women. An anomalous creep used this technology to make sexually explicit images of me, without my consent. Anomalous creeps shared, posted, downloaded, commented on the images… The people who doxxed me, who sent me messages harassing and extorting me, who told me I was a worthless sl*t who should kill herself—they were all just anomalous creeps.

Most people aren’t like that, I told myself. Most people would never participate in the sexual violation of a women. Most people are good.

But I was wrong.

Because the people who had a hand in my abuse weren’t just anomalous creeps hiding out in the dark web. They were businessmen. Businesswomen. People at the front and center of respectable society.

In short: they were the executives of powerful, mainstream corporations. A lot of mainstream corporations.

The “nudifying” bot that was used to create naked images of me? It was made by codes hosted on GitHub—a platform owned by Microsoft, the world’s richest company. Ads and profiles for the nudifying bot were on Facebook, Instagram, Reddit, Discord, Telegram, and LinkedIn. LinkedIn went so far as to host lists ranking different nudifying bots against each other. The bot was embedded as an independent website using services from Cloudflare, and on platforms like Telegram and Discord. And all these apps which facilitated my abuse are hosted on Apple’s app store.

When the people who hold the most power in our society actively participated in and enabled my sexual violation … is it any wonder I can’t feel safe anywhere?

Make no my mistake. My distrust has been earned. Decisively, irreparably earned.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

“Deepfake pornography” and other Image-based Sexual Abuse (IBSA) is facilitated on and through GitHub

GitHub significantly contributes to the creation of sexually exploitative technology and has been noted for facilitating the growing crime of image-based sexual abuse: the capture, creation, and/or sharing of sexually explicit images without the subject’s knowledge or consent. GitHub hosts guides, codes, and hyperlinks to sexual deepfake community forums dedicated to the creation, collaboration, and commodification of synthetic media technologies, and AI-leveraged ‘nudifiying’ websites and applications that take women’s images and “strip them” of clothing.(1)

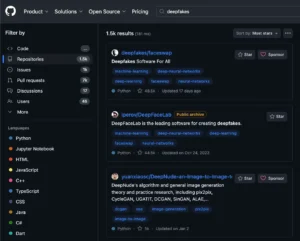

Open-sourced repositories dedicated to image-based sexual abuse (IBSA) thrive on GitHub, allowing users to replicate, favorite (star), and collaborate on sexually exploitative technology without repercussions. GitHub hosts three of the most notorious technologies used for synthetic sexually explicit material (SSEM) abuse: DeepFaceLab, DeepNude, and Unstable Diffusion.

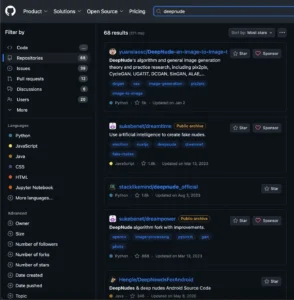

GitHub’s public statement against sexually exploitative source codes in 2019 cited codes such as DeepNude as violating their policies and noted they would be banned and removed from GitHub if similar codes or replications appeared. However, as of January 2024 similar codes remain active. Since May 2023, results for the search “deepnude” increased by 20%. This is up from 54 results in May 2023 to 68 results of similar repositories in February 2024, with the original source code for DeepNude still active on GitHub’s site.

Because of GitHub’s failure to effectively remove the original DeepNude source code, ban replications, and moderate GitHub for DeepNude-like replicas, “copycat” nudifying apps and bots continue to proliferate. A November 2023 study by Graphika found the number of links advertising ‘undressing apps’ increased more than 2,400% from 2022 to 2023 on social media, including on X and Reddit.

Research has shown that synthetic sexually explicit material (SSEM), such as nudifying technology is increasingly being used to target women in the general public. In 2019, Sensity uncovered a deepfake ecosystem with a DeepNude bot at the center and according to self-reports from users of the bot, 70% of targets were “private individuals whose photos are either taken from social media or private material.”(2)

The DeepFaceLab repository on GitHub directly sends users to the Mr.DeepFakes website – a website dedicated to creating, requesting, and selling sexual deepfakes of celebrities and ‘other’ women…As a 2020 Motherboard: Tech by Vice article It Takes 2 Clicks to Get From ‘Deep Tom Cruise’ to Vile Deepfake Porn, pointed out, “[i]t is impossible to support the DeepFaceLab project without actively supporting non-consensual porn.”

Unfortunately, the network of deepfake pornography enthusiasts extends beyond Mr.DeepFakes, encompassing platforms like Reddit, Discord, and Telegram, as well as different languages such as Russian and French. The circulation of deepfake content for sexual exploitation is a widespread and growing problem. According to a December 2023 research study by Home Security Heroes, the availability of deepfake pornography online has witnessed a staggering increase of 550% between 2019 and 2023.

Given that one out of every three deepfake models is pornographic, and considering the fact that developer interest in deepfake technology is the most common reason men watch deepfake pornography (57%), followed by celebrity interest (48%), and fulfilling personalized sexual fantasies (33%), it is not surprising that the initial deepfake and nudification technologies were created by “interested programmers,” rather than experienced developers.

As of February 2024, the top three repositories tagged as “deepfakes” on GitHub are dedicated to the creation of synthetic sexually explicit material (SSEM), further incriminating GitHub in the facilitation of SSEM abuse.

Two things have remained the same with deepfakes since 2019: their purpose – pornography, and their target – women. Similar to Sensity AI’s findings in 2019 and 2021iii, the same study by Home Security Hereos found that 98% of all deepfake videos available online were of pornography and 99% of all forged (deepfake) pornography features women.

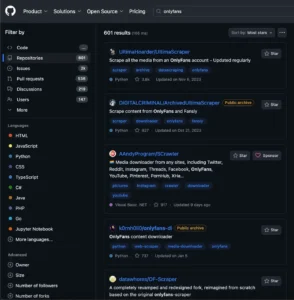

Pornography and sexually explicit imagery scrapers and “save” bots thrive on GitHub. These have been used to scrape and save images from pornography and social media websites. Considering the growing body of evidence, survivor testimony, and lawsuits against the most visited pornography sites (Pornhub, XVideos, XHamster) for hosting actual depictions of sex trafficking and child sex abuse material, rape, and various forms of image-based abuse (“revenge porn,” “upskirting/downblousing,” and “spycam shower footage”) GitHub’s allowance of these scrapers further contributes to the dissemination of criminal and/or nonconsensually captured content.

For example, search results for prostitution and pornography site “onlyfans” on GitHub doubled in 2023, increasing from 300 results in May 2023 to 601 results in February 2024, containing image scrapers for downloading content from OnlyFans. Some of these repositories offer users the option to “sponsor” or donate money to the abusive code operating. This further demonstrates GitHub’s pivotal role in contributing to IBSA and sexual exploitation by hosting such repositories.

- Ajder et al., “The State of Deepfakes: Landscape, Threats, and Impact,” September 2019; Henry Ajder, Giorgio Patrini, and Francesco Cavalli, “Automating Image Abuse: Deepfake Bots on Telegram,” Sensity, October 2020; Patrini, “The State of Deepfakes 2020: Update on Statistics and Trends,” March 2021; Volkert et al., “Understanding the Illicit Economy for Synthetic Media,” March 2020; “Hello World”; Hany Farid, “Creating, Using, Misusing, and Detecting Deep Fakes,” Journal of Online Trust and Safety 1, no. 4 (2022).

- Henry Ajder, Giorgio Patrini, and Francesco Cavalli, Automating Image Abuse: Deepfake bots on Telegram (Sensity, 2020), https://www.medianama.com/wp-content/uploads/SensityAutomatingImageAbuse.pdf

Child Sexual Abuse Material (CSAM) Facilitated by GitHub

This year alone, hundreds of thousands of images of AI-generated CSAM have been reported across the globe: NCMEC, IWF, President Biden and VP Harris, FBI, attorney generals from 54 US states and territories, the EU, Thorn, NCOSE, and many others have highlighted AI-generated CSAM as an imminent and rapidly developing threat to children globally.1

The disturbing consequences of GitHub’s reluctance to act on sexually exploitative technology for the last five years have resulted in the use of such technology to exploit and abuse minors in unimaginable ways. According to the Ireland-based reporting hotline, Hotline.ie, In 2022, they found 5,105 cases of classified CSAM containing computer-generated imagery (37% of all CSAM reports), compared to 1,329 cases (9%) in 2021. Over nine out of ten cases of AI-generated CSAM contained preteens, and two-thirds was found to “display adults subjecting children to penetrative sexual activity, and bestiality or sadistic elements.”

GitHub’s lack of effective content moderation practices allows sexually exploitative technology and IBSA to thrive. GitHub implements automated detection tools such as PhotoDNA to proactively scan for child sexual abuse material (CSAM), but GitHub fails to implement comprehensive moderation practices permitting technology found to have generated CSAM but only moderates for CSAM itself.

Even when GitHub does use PhotoDNA to identify and report to the National Center for Mission and Exploitation Children (NCMEC), these reports have failed to be sufficient enough for NCMEC to take any action. In 2022, GitHub was one of 36 ESPs with more than 90% of reports lacking actionable information. This meant GitHub’s reports were so vague that NCMEC couldn’t figure out where the crime happened or which law enforcement agency should get the report.

Below is a list of cases about AI-generated CSAM in the last six months of 2023 alone:

- In August 2023, male middle school students nudified over 30 female peers as a ‘joke’ – Spain.

- In September 2023, attorney generals from 54 US states and territories signed a joint letter to Congress demanding immediate action on the topic Artificial Intelligence and Child Sexual Exploitation.

- In November 2023, male high school students nudified over 30 female peers as a ‘joke’ – US.

- In October 2023, the Internet Watch Foundation released research finding that “most AI child sexual abuse imagery identified by IWF analysts is now realistic enough to be treated as real imagery under UK law*.”

- In November 2023, a Charlotte, NC child psychiatrist was sentenced to 40 years in prison for sexual exploitation of a minor and using artificial intelligence to create child sexual abuse material.

- In November 2023, a federal jury in Pittsburgh convicted a Pennsylvania man for possessing ‘modified’ child sexual abuse material (CSAM) of child celebrities.

- In December 2023, the Stanford Internet Observatory identified more than 3,200 images of suspected CSAM in the LAION-5B (on GitHub) training set, the data behind popular generative AI platform, Stable Diffusion.

- In January 2024 alone, five states have proposed/passed legislation specifically addressing AI-generated CSAM. (CA, FL, UT, OH, and SD).

GitHub is the source responsible for all the concerns outlined above. While 2023 was a year of unpreceded growth and fear, consisting of sporadic ‘wack-a-mole’ tactics to prevent the spread of AI-generated CSAM. With the exception of one instance, GitHub’s role in hosting source codes and repositories responsible for creating AI-generated CSAM lacked publicly scrutiny, allowing GitHub to continue hosting such sexually exploitative and potentially illicit codes with no accountability and little to no public scrutiny.

Lives are being ruined, reputations destroyed, and the innocence of children stolen. Yet, GitHub remains silent and unactionable, prioritizing developers over the victims impacted by the technology being developed.

Minors’ Accessibility and Exposure to Pornography and Sexually Explicit Content

NCOSE is deeply concerned about the extent of features on GitHub that may increase risk of harm to minors, such as limited content moderation policies and a lack of caregiver and teacher/administrative controls.

GitHub rates itself as 13+, and actively promotes numerous educational opportunities for students from middle school to college to learn software development and coding. Geared towards minor-aged users and student-teacher collaboration and development on GitHub, over 1.5 million students signed up for the Student Developer Pack Student Developer Pack by 2019. GitHub allows teachers and administrators to connect GitHub accounts to learning management systems such as Google Classroom, Canvas, and Moodle. The use of Google Classroom in primary education exponentially rose during the pandemic which surpassed 150 million student users by 2021. GitHub requires a valid email to create an account but fails to implement other basic safety features for students or underage users, such as age verification and meaningful content moderation.

NCOSE researchers did not find evidence of teacher/administrative safety controls for underage student users. While teachers can restrict public access to joining classroom repositories, “by default visibility to student repositories is public.” GitHub places the responsibility of administrators to upgrade their accounts to access private student repository capabilities – for a fee. Every child should be safe online, especially in an environment promoted for his or her education and learning services. Further, NCOSE researchers also did not find evidence of parental controls for underage users. GitHub is asking parents and teachers to put a price on their child’s safety. This feature should be free and on by default.

Notably lacking are the filters GitHub and its repositories offer. For example, a teacher asked the GitHub community whether the previously banned AUTOMATIC1111 model could, “filter for obscene language and imagery” so their elementary classroom can learn how to us AI.

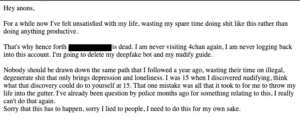

Another example was when NCOSE researchers found a nudifying and deepfake pornography repository created by a 15-year-old boy, who was trying to leave GitHub after becoming embroiled in the creation and dissemination of deepfake and nudity-related codes and imagery. His narrative embodies the consequences of GitHub’s lackluster moderation efforts. From exposure to harmful content, legal confrontations, to psychological repercussions, it is evident that GitHub’s current measures are insufficient.

Considering the open-sourced format and the abundance of sexually exploitative source coding, to prevent harmful consequences of exploitative technology on the platform GitHub urgently needs to implement the following:

- robust age verification systems

- parental controls

- proactive content moderation to better protect its youngest users

News Articles, Experiences of Abuse, and Academic Literature

Below is a list of news articles related to the exploitation facilitated by GitHub.

- ArsTechnia, Toxic Telegram group produced X’s X-rated fake AI Taylor Swift images, report says, January 26, 2024, https://arstechnica.com/tech-policy/2024/01/fake-ai-taylor-swift-images-flood-x-amid-calls-to-criminalize-deepfake-porn/

- Forbes, Alexandra Levine, Stable Diffusion 1.5 Was Trained on Illegal Child Sexual Abuse Material, Stanford Study Says, December 21, 2023, https://www.forbes.com/sites/alexandralevine/2023/12/20/stable-diffusion-child-sexual-abuse-material-stanford-internet-observatory/

- Time, ‘Nudify’ Apps That Use AI to ‘Undress’ Women in Photos Are Soaring in Popularity, December 8, 2023, https://time.com/6344068/nudify-apps-undress-photos-women-artificial-intelligence/

- Wired, Deepfake Porn Is Out of Control, October 16, 2023, https://www.wired.com/story/deepfake-porn-is-out-of-control/

- The Guardian, Paedophiles using open-source AI to create child sexual abuse content, says watchdog, September 13, 2023, https://www.theguardian.com/society/2023/sep/12/paedophiles-using-open-source-ai-to-create-child-sexual-abuse-content-says-watchdog

- Bloomberg Law, Google and Microsoft Are Supercharging AI Deepfake Porn, August 24, 2023, https://news.bloomberglaw.com/artificial-intelligence/google-and-microsoft-are-supercharging-ai-deepfake-porn

Fast Facts

The top three most popular repositories by stars and forks with the tag "deepfakes" are used for the purpose of creating synthetic sexually explicit media (SSEM).

The model used by Unstable Diffusion (AI-generated pornography site) is the 2nd most popular repository on GitHub for first time contributors.

Technological interest in deepfakes is the most popular reason men report for watching deepfake pornography (57%), followed by celebrity interest (48%) and desires to fulfill personalized sexual fantasies (36%).

GitHub hosts the source code to the software used to create 95% of deepfakes, DeepFaceLab, which directly sends interested users to the most prolific sexual deepfake website in the United States.

GitHub is one of the top 10 referral sites for Mr.DeepFakes.

Similar to Github

Huggingface

Updates

Stay up-to-date with the latest news and additional resources

Recommended Reading

North Carolina, Charlotte Child Psychiatrist Is Sentenced To 40 Years in Prison for Sexual Exploitation of a Minor and Using Artificial Intelligence to Create Child Pornography Images of Minors

Resources

Is someone sharing explicit images of you (either real or fake) without your consent? Get help from the Disrupt Campaign.