A Mainstream Contributor To Sexual Exploitation

Where a child’s life can change forever in a snap

Content on Snapchat may be fleeting, but the impact of sextortion, grooming, and child sex abuse can last a lifetime. This app is dangerous by its very design.

Take Action

Shawn,* an energetic and ambitious 16-year-old, was an avid Snapchat user. Shawn was used to receiving friend requests from people he didn’t know on Snap. He was not used to unknown young women requesting nude images from him.

When Amanda* sent images of herself to Shawn, and asked him for images of himself, he hesitated. Eventually, he thought: what’s the harm? On Snapchat, the images would automatically disappear anyway…right?

Instead, Shawn was blackmailed. ‘Amanda’ was actually a criminal ring that threatened to send Shawn’s nude images to his family and friends if he did not pay them thousands of dollars. Shawn tragically died by suicide hours later, in fear of the judgement he would face if his images.

What happened to Shawn is unfortunately not an outlier. Snapchat remains one of the most dangerous apps for children due to the extreme risks of harm that they face on the platform.

In fact, Snap has recently been noted as:

- #1 platform where the National Center on Missing and Exploited Children saw children receiving unwanted sexual advances from predators in 2024

- #1 social media app where sextortion occurs by the Canadian Centre for Child Protection

- #1 parent-reported platform for sharing of child sexual abuse material

- #1 platform where minors have reported having a sexual experience online

The rampant exploitation on Snapchat is especially concerning given Snapchat’s widespread popularity – particularly with young people. The platform is used by over 100 million Americans, including more than 20 million teenagers. Disturbingly, it is the second most used app by children under the age of 13 (who aren’t even supposed to be on there!).

Snapchat is dangerous by its very design – something that has been noted repeatedly. When CEO Jason Citron testified at a January 2024 Congressional hearing about Big Tech’s role in facilitating child online sexual abuse, he likened it to the lightness of a phone call or face-to-face conversation. In fact, Snapchat often stresses that it tries to mimic real-life interactions. One of the senators immediately quipped: “Did you and everyone else at Snap really fail to see that the platform was the perfect tool for sexual predators?” More than a decade ago, a digital forensics firm director warned that Snapchat’s ephemerality was a design feature predators found comforting, “Kids who are bullied or adults who are stalked may struggle to prove their cases. Predators can lure victims, comforted by the assumed ephemeral nature of the communication.” For years, NCOSE and industry experts have raised concerns over Snapchat’s deceptive sense of protection and privacy. Yet Snapchat’s design has remained largely unchanged since its inception, costing countless children their safety, well-being, even their lives.

After being placed on the 2023 Dirty Dozen List (as well as in previous years that it was named to the List), Snapchat did implement several of our recommendations to reduce children’s access to sexually explicit content in public areas like Stories and Discover. These changes included improved detection and moderation of sexually explicit content, improving visibility and content controls for parents Family Center (which sadly fewer than 1% of Snap parents use), and providing users with dedicated resources on exploitation and abuse. NCOSE has also been assured that more changes are coming. While we applaud the steps that Snap has taken to limit access to explicit content (and we bumped them down to Watchlist in September 2023), unfortunately our recent investigations have found that highly sexually suggestive and inappropriate content is still being pushed to minors without looking for it (see proof below).

Furthermore, many problematic features remain unchanged: leaving millions of children in harm’s way. To our knowledge, no substantive improvements have been made to Snap’s most dangerous aspect—the chats, which is where most of the grave harms happen.

In addition, Snap’s My Eyes Only feature, which is known to be used for storing CSAM, continues to be available for minors and predators alike. The caregiver monitoring tools in Family Center still fall short of providing adequate protections for kids. Although Snapchat automatically turns Content Controls ON for all new minor accounts joining the Family Center as of January 2024, the option to “restrict sensitive content” is NOT available to teens who are not connected through Family Center. Alarmingly, only “200,000 parents use Family Center and about 400,000 teens have linked their account to their parents using Family Center.” That’s less then 1% of Snapchat’s underage users linked to a family account.

Why would Snapchat limit protections to only those teens (fewer than 1%) whose parents are using Family Center?

The changes that Snap has made are needed, but are simply not enough. They took years of advocacy by NCOSE and other groups, placing millions of children in danger in the meantime. It took Snapchat one year to release parental controls for MyAI, and it took Snapchat two years to make any changes to the Family Center at all. The changes they did make were not intuitive, and Snap still can’t get sexually suggestive and explicit content off of Spotlight, Stories, and Discover pages, even after multiple updates to these features for this problem exactly.

For these reasons, NCOSE is keeping Snapchat on the Watchlist for 2024 to continue informing the public of the still-present dangers on the app and to monitor Snapchat to ensure publicized changes are actually being implemented.

*Composite story based on several sextortion cases

Our Requests for Improvement

NCOSE holds that Snapchat is too dangerous for children and believes the minimum age for users should be at least 16. Common Sense Media concurs. In addition to this, we recommend Snap make the following changes immediately. Kids can’t afford to wait any longer!

- Automatically block sexually explicit images (especially those sent to minor accounts) and accompany them with warnings, resources, and prompts to report, block, and remove such accounts. Provide messaging to minors who are about to send nude images about the risks. Bumble, Apple, Google, and Discord use technology to proactively blur sexually explicit images before they're viewed and Apple sends warnings, so why can't Snapchat?

- Proactively identify, remove, and block accounts and bots that are posting and promoting pornographic content and/or selling sex with greater efficiency and proactive measures. Despite the 2023 updates, accounts and content are still accessible and suggested to minor-aged accounts.

- Use available technology and blocking patterns to identify “suspicious adults” and further prevent them from engaging with minors. Stop suggesting any adult accounts to minors.

- Expand Family Center functions to allow parents to see what their children are exposed to on Stories and Spotlight; send alerts to parents when their children add or remove friends, settings are changed, or sexually explicit images are being sent or received.

- Default all minor accounts to the highest safety settings and make all available safety tools (like Content Controls) available to all youth (not just teens connected in Family Center).

An Ecosystem of Exploitation: Grooming, Predation and CSAM

The core points of this story are real, but the names have been changed and creative license has been taken in relating the events from the survivor’s perspective.

An Ecosystem of Exploitation: Grooming, Predation and CSAM

Like most thirteen-year-old boys, Kai* enjoyed playing video games. Kai would come home from school, log onto Discord, and play with NeonNinja,* another gamer he’d met online. It was fun … until one day, things started to take a scary turn.

NeonNinja began asking Kai to do things he wasn’t comfortable with. He asked him to take off his clothes and masturbate over a live video, promising to pay Kai money over Cash App. When Kai was still hesitant, NeonNinja threatened to kill himself if Kai didn’t do it.

Kai was terrified. NeonNinja was his friend! They’d been gaming together almost every day. He couldn’t let his friend kill himself…

But of course, “NeonNinja” was not Kai’s friend. He was a serial predator. He had groomed nearly a dozen boys like Kai over Discord and Snapchat. He had purchased hundreds of videos and images of child sexual abuse material through Telegram.

Every app this dangerous man used to prey on children could have prevented the exploitation from happening. But not one of them did.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Child Sex Abuse Material Sharing & Sexual Enticement Interactions

It is well-documented that Snapchat is one of the most popular apps for teens. Unfortunately, Snapchat is also the most popular – if not the most popular – app where children send sexually explicit images of themselves (a form of child sex abuse material) and where they have sexual interactions with adults. In fact, the sharing of CSAM on Snapchat is so severe that almost half of the child sexual abuse imagery reports throughout the past seven years came from Snapchat.

Snapchat’s appeal is based on the ability to share images that would disappear after ten seconds. This feature has allowed the app to flourish into the perfect platform for predators to trade CSAM, and Snapchat has subsequently become one of the most frequently mentioned social media platforms used by pedophiles to search, view, and share CSAM. It is also continuously in the top 5 most-reported platforms that CSAM offenders use to contact children, because the platform’s features make Snapchat appear to be a safe haven for this horrendous behavior.

Even if you don’t take our word for it, here is how many of our partners have ranked Snapchat.

- #1 parent-reported platform for sharing of child-sex abuse material (Parents Together, April 2023).

- #3 parent-reported platform for sexually explicit requests to children (Parents Together, April 2023).

- #1 platform minors reported having had an online sexual experience (Thorn Report, November 2023)

- #3 platform minor users were most likely to indicate they had experienced an online sexual interaction.

Grooming and online enticement cases

By design, Snapchat is the perfect hub for online enticement and sexual grooming of minors due to features like disappearing messages. News articles and podcasts frequently detail cases of predatory adults contacting, grooming, and even kidnapping minors to sexually assault them. According to the National Center for Missing and Exploited Children (NCMEC), Snapchat is the #1 location where online enticement of minors occurred in 2023.

- In a November 2023 Fortune article, a parent recounted the visceral feelings she experienced after a 20-year-old male adult had groomed, ‘formed a relationship’ with her daughter, and would sell drugs to her daughter at her middle school: “It was a terrifying, gut-wrenching experience as an adult.”

- A February 2024 Scrolling2Death podcast episode detailed a recent case wherein a UK predator had initially connected with a minor female on a different app but later shifted to Snapchat due to the feature of disappearing messages. He persuaded her to take a taxi and travel 65 miles to his residence, where he proceeded to sexually assault her.

- In January 2024, a BBC investigation revealed how Guernsey Police were warning parents of a group of predatory adults who were “sharing language with strong sexual or violent language and sexual images,” and who were encouraging children to share sexually explicit imagery of themselves. Police believed that in January 2024, there were more than 50 children in the group currently. Police wished to warn parents about the group and urged them to ask their child[ren] if they were in it.

Prevalence of Financial Sextortion on Snapchat

Sextortion

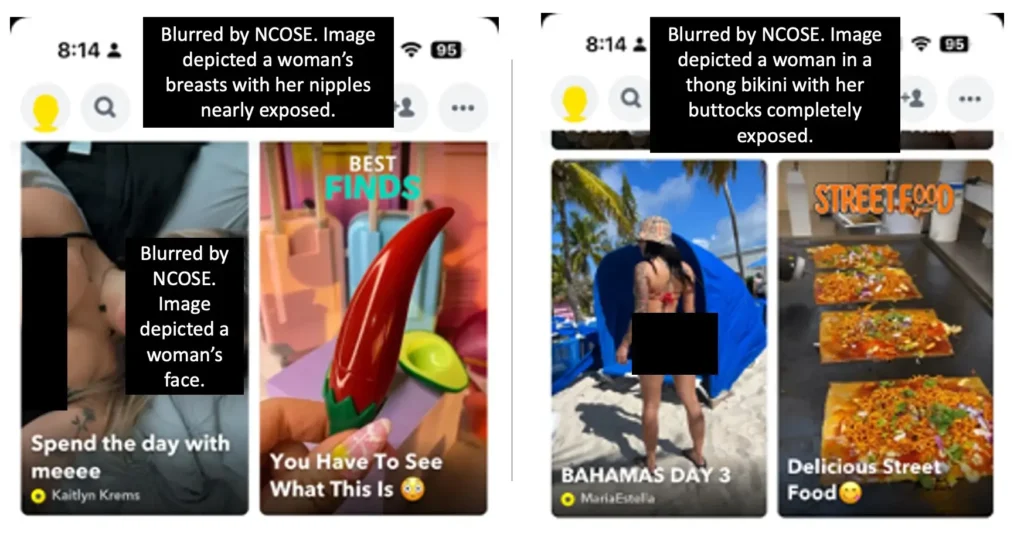

While Snapchat’s policies prohibit the use of unauthorized third-party apps, Snapchat has not informed the public of any actions they have made to restrict access by unauthorized third-party apps. These apps are often used to secretly record and screenshot sexually explicit imagery of victims by perpetrators of blackmail purposes. Snapchat, closely followed by Instagram is the platform with the highest reports of sextortion.

Even Snap’s own research has found that two thirds of Gen Z have been targeted for sextortion! (so why isn’t Snapchat doing everything it can to prevent this crime from happening?)

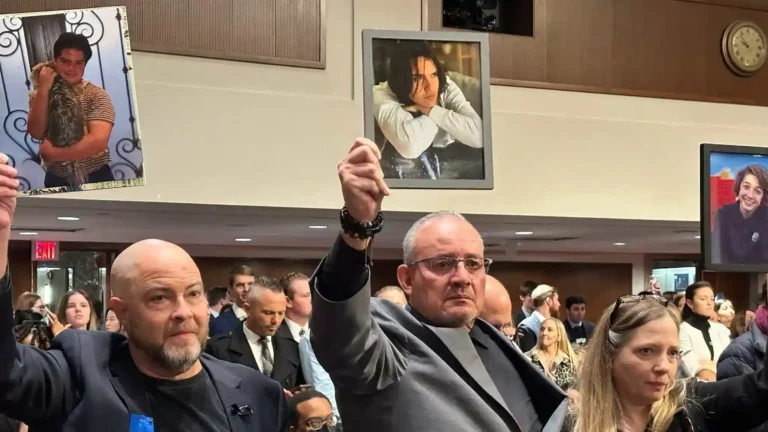

Worse, reports of minors dying by suicide because of sextortion on Snapchat are devastatingly common. From October 2021 to March 2023, the FBI and Homeland Security Investigations received over 13,000 reports of online financial sextortion of minors, involving more than 12,600 victims—primarily boys—that led to at least 20 suicides. In the last year, law enforcement agencies have received over 7,000 reports of online sextortion of minors, resulting in over 3,000 victims who were mostly boys, with more than a dozen victims having died by suicide.

News articles detailing minors dying by suicide after being victims of sextortion are frequently in the news [1], [2], [3], [4]. As the #1 platform for the highest rates of sextortion, Snapchat plays a critical role in fueling this epidemic.

How many more children will lose their lives or suffer lifelong trauma until Snap makes significant changes to ensure their platform is safe for kids?

Evidence of sextortion cases involving Snapchat

“Teen sextortion victim foils middle school coach’s scheme, uncovers identity: docs,” Fox News, July 31, 2023.

- A middle school basketball coach ran a sextortion scam that included at least four underage girls and spanned almost three years until a teenager exposed his crimes. He was sentenced to serve 30 years in federal prison.

- The 27-year-old predator would create fictitious social media profiles, as well as a profile under his own name, to “threaten, coerce and manipulate” his victims to “engage in sexual activity with him.”

- Offender: “Generally speaking, my scheme was to use my personal Snapchat account, as well as accounts I created in order to pose as fictitious males, to obtain sexually explicit images and videos from the minors. Further, I also used this scheme to convince at least one minor to engage in sexual activity with me.”

“‘IDK what to do’: Thousands of teen boys are being extorted in sexting scams,” Washington Post, October 2, 2023

- This article detailed multiple cases of sextortion involving teenage boys aged 15-17-years-old on Snapchat. The article specifically outlined cases in which teenage boys had told their parents about the situation; one family had even been using Life360 at the time their son was extorted.

“Teenage boy who fell prey to ‘sextortion’ plot ploughed his 4WD into grandmother’s car on the Sunshine Coast, killing her instantly,” Daily Mail, January 24, 2024,

- 17-year-old boy was sextorted and attempted to die by suicide by driving his car into the car of someone else. The 63-year-old woman tried to swerve out of the way, but it was too late; she died upon impact. The teenager sustained injuries but not life-threatening. He was sentenced to 3 years in jail.

Exposure to Sexually Explicit Content

Many of the improvements Snapchat made over the last several years have been focused on limiting the rampant pornography and sexually explicit and suggestive content promoted to minor aged accounts. And we have noted a significant improvement in this area – since being placed on the 2023 Dirty Dozen List, Snap has made it much harder for prostitution and pornography bots to advertise, and they have also made it more difficult for accounts to post links to such sites. However, following the updates, NCOSE’s corporate advocacy team has STILL continued to find links on Snap to these sites encouraging sexual exploitation.

Furthermore, highly sexualized content is still easily accessible in the more “public” and curated areas Spotlight and Stories, despite Snapchat assuring those areas were significantly improved as of August 2023. All minor accounts are supposedly set to the highest content setting to filter out sexually suggestive material.

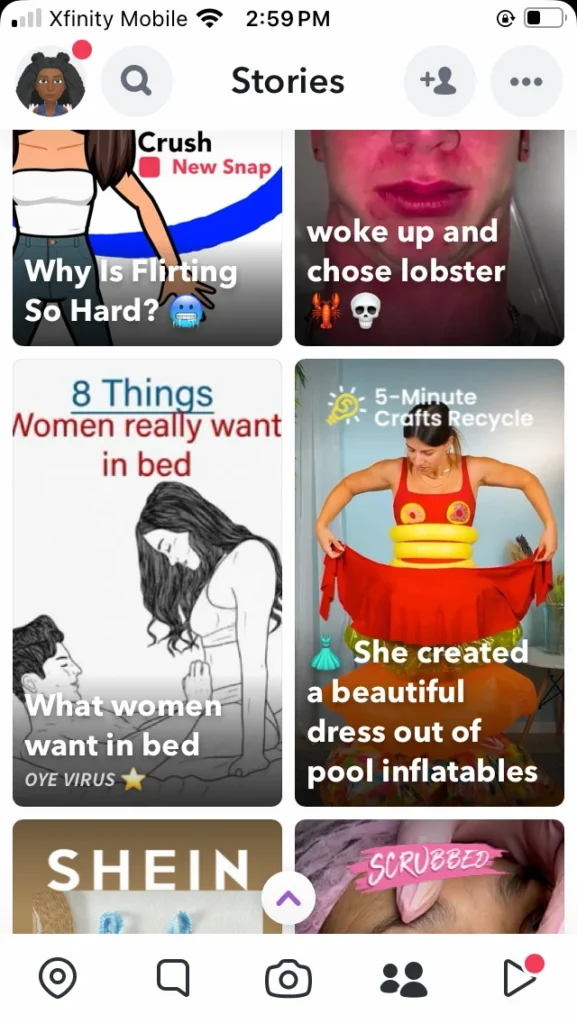

NCOSE researchers conducted quick tests in January 2024 to assess if Discover section had improved.

One researcher conducted tests a few days prior to the January 31 hearing. It took less than 10 minutes on a new account set to age 13 to organically find the following videos and images in Snapchat’s Discover section:

- “What women want in bed” with extremely graphic language, suggestions to “take control and grab us,” and alcohol promotion to “set the mood.”

- A video of a woman pole dancing with the heading “My New Job”

- A teen girl grabbing a teen boy’s private area while in the bathroom

20 more minutes led our researcher to:

- “Kiss or slap” a video series where young women in thongs ask men and other women to either kiss them or slap them (usually on the behind)

- Girls in dresses removing their underwear

- Clothed couples simulating sex on beds

- Teenage-looking girls doing jello shots

- Cartoons humping each other

Seen within minutes of creating a new 13-year-old Snapchat account Discover section, without searching for anything.

All of this is after Snapchat promised NCOSE and publicly shared that they made major improvements to rid Discover of inappropriate, sexualized content.

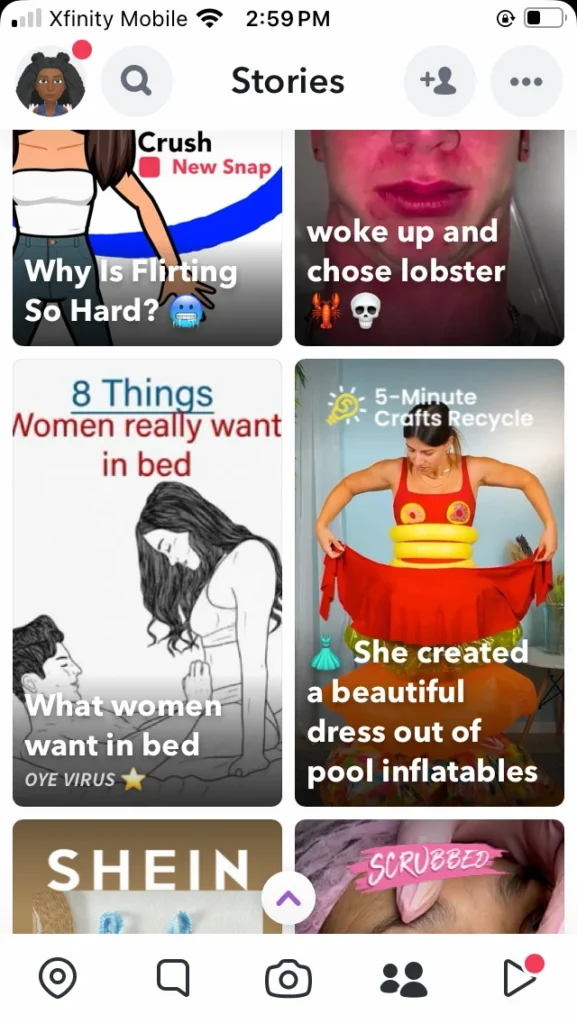

A second researcher ran some tests on January 25 on a new 14-year-old account and without having done ANY searches, within one minute the Stories populated on their Discover page depicted sexually explicit imagery with partially clothed individuals as well as sexually suggestive content. [additional screenshots available upon request]

Seen within minutes of creating a new 14-year-old Snapchat account Discover section, without searching for anything.

Other child safety advocates have found similar results.

“Infrequent/mild sexual content”? We vehemently disagree with this description.

In fact, recent studies note Snap as a go-to place for pornography and sexually explicit content:

- Ranked as the #2 most popular social media platform (excluding Twitter and dedicated pornography sites) where children were “most likely to have seen pornography” (UK Children’s Commissioner, January 2023).

- Among the highest rated platforms for parent-reported exposure to inappropriate sexual content (Parents Together, April 2023).

Dangerous and Risky Features

The riskiest section of Snapchat, not to mention the literal purpose of the app, the Chat (aka “Snaps”) has seen no substantive reform. Even after being named to the 2023 Dirty Dozen List, Snapchat failed to change the most dangerous area of its platform.

Chat

The Chat, or the “Snaps,” are where children are most often harmed: it’s where the child sexual abuse material is captured and shared; and it’s where sexual grooming and sexual extortion happen. While Snapchat could be taking basic, commonsense measures like proactively blocking sexually explicit content being sent to and from minors – it doesn’t.

Bumble, a dating app for adults, automatically blurs sexually explicit content. So why can’t Snap?

Encouragingly, automatic blurring of sexually explicit imagery is an emerging industry standard, with some of the largest BigTech corporations having either made this change or announced automatic blurring as an incoming feature in 2023:

- Apple: Defaulted blurring of sexually explicit imagery for iOS devices used by children 12 and under. This was a NCOSE recommendation.

- Google: Automatically blurred and blocked sexually explicit content for all minor aged accounts and proactively blurred explicit content for all adult accounts. This was a NCOSE recommendation.

- Discord: Announced automatic blurring of sexually explicit imagery for all minors aged 13-17-years-old across all areas of its platforms. This feature is currently being rolled out.

Again, the question remains: Why hasn’t Snapchat taken these steps?

My AI

In addition to the Chats (Snaps), one of Snapchat’s newest features, My AI, is also one of its most controversial releases – and for good reason.

In February 2023, Snap’s My AI was released without warning and was quickly exposed for giving advice to a researcher posing as a 13-year-old about how to have sex with a 31-year-old they met through Snapchat! Did Snap think that saying “sorry in advance” with a lengthy disclaimer somehow excused any potential harm to children by this experimental product that clearly wasn’t ready to be unleashed on minors? MyAI should NOT be available to minors at all (a sentiment more and more parents are sharing).

Although in March 2023 Snapchat promised to add parental controls around the chatbot, it took them a full year to finally roll out these controls. The parental controls included limiting My AI from responding to chats with their teens.

Meanwhile, Ofcom’s latest research finds that Snapchat My AI is the generative AI tool most widely used by online children, with half of UK online 7-17-year-olds reporting in June 2023 that they had used it.

By May of 2023, My AI was still giving inappropriate advice to teens. A May 2023 investigation even referred to My AI, as ‘A pedophile’s friend.’

In January 2024, just two weeks before Snapchat CEO Evan Spiegel was due to testify at the January 2024 Congressional hearing, Snapchat finally released a feature that allows parents to prevent their teens from chatting with My AI.

View our 2023 proof to see some of the conversations our 14-year-old researcher had with My AI from April 19 – 29 (these conversations were after Snap added so-called safeguards and thrust it onto everyone’s accounts without their consent).

Other risky features like Snap Map and Live Location, See Me in Quick Add, and My Eyes Only (which has been long called out by child safety advocates and police as a tool for keeping child sexual abuse material) continue to be available to minors.

Deceptive Information Around Safety and Well-being

In a March 2023 blog, NCOSE highlighted flaws in Snapchat’s Family Center. However, our concerns remain unaddressed even after Snapchat implemented updates in August 2023.

- Content Controls remain inaccessible to teens who are not part of the Family Center: This is unacceptable considering Snapchat’s CEO testified that less than 1% of American teens are connected to the Family Center.

- Content Controls only apply to Stories/Spotlight/Discover: They do not apply to the areas kids use most – the most dangerous areas: Snaps, Chats, and Search.

NCOSE and other industry experts are still seeing evidence of minor-aged accounts being suggested sexually suggestive and explicit content. Even brand-new minor-aged accounts, without having conducted any searches, are suggested sexually suggestive and explicit content.

Instead of prioritizing Snapchat’s safety by removing risky features, blurring explicit content, and ensuring all safety tools for minors, Snap shifted the burden to caregivers by launching and updating the Family Center (a tool NCOSE and other industry experts have repeatedly found ineffective).

Snapchat also claimed to have strengthened friending protections by increasing the requirement for mutual friends between 13-to-17-year-olds and other users, aiming to limit connections with unfamiliar individuals further. However, this claim is highly dubious as Snapchat still fails to define the threshold for “enough mutual friends,” making this feature’s effectiveness uncertain. NCOSE and other child safety experts have found that even brand-new minor-aged accounts are still suggested to random users, including potentially predatory adults, indicating gaps in the platform’s friending protections.

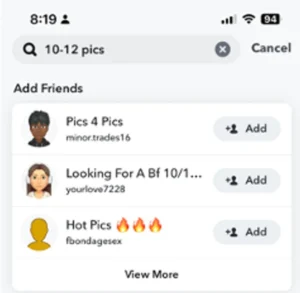

The image below was captured just days before the January 2024 hearing after searching for “10-12 pics.” Although this search should not have returned any results, unfortunately this was not the case.

Not only are Snapchat’s policies and products deceptive – so are their practices.

- Only a few days prior to the January 2024 hearing, Snapchat announced its official support of the Kids Online Safety Act (KOSA), making it the first social media to support the legislation. However, soon after Snap announced its support, it was revealed that Snapchat, like Meta, had long been funding Net Choice, whose funds are used to support Anti-KOSA legislation.

- In March 2024, Snapchat hosted a series of Parent safety panels, touting Snapchat’s dedication to child online safety and parents through their many features and tools. Yet Snapchat failed to discuss the rampant sexual abuse and exploitation that occurs daily on their platform because of the inadequacy of their safety features and tools.

Fast Facts

#1 location where online enticement of minors occurred (NCMEC, 2024)

#1 platform used for sextortion (Canadian Centre for Child Protection, November 2023)

Top 5 most reported platforms that CSAM offenders use to contact children (Suojellaan Lapsia, Protect Children, February 2024)

#1 platform where 9-17-year-olds reported having an online sexual experience with someone they thought was an adult (Thorn Report, November 2023)

Similar to:

Updates

Stay up-to-date with the latest news and additional resources

Bark Review of Snapchat

Recommended Reading

Resources

- Resource for Teens to remove their sexually explicit content TakeItDown.NCMEC.org

- Resource for Adults to remove image-based sexual abuse StopNCII.org

- Social Media Victims Law Center: Snapchat Assessment and one-pager: "Snap Knows"

- The Hill (article by Lina Nealon) Congress must force Big Tech to protect children from online predators

![[PART 1/4] Snapchat test with shocking results](https://endsexualexploitation.org/wp-content/uploads/scrolling2death.jpg)