A Mainstream Contributor To Sexual Exploitation

Music Porn For Everyone

Sexually explicit images, sadistic content, and networks trading child sex abuse material on its platform prove Spotify is out of tune with basic child safety measures and moderation practices.

Take Action

Porn on Spotify?? Child predators on Spotify?? CSAM-sharing networks on Spotify??

Yes, that’s right – and it’s gotten worse!

We are not talking about suggestive album covers or “racy” lyrics.

When NCOSE first started investigating Spotify for the 2023 Dirty Dozen List, we were genuinely shocked at how pervasive hardcore pornography and sexual exploitation were on the popular streaming app. Media outlets and other child safety advocates were also elevating this problem when Spotify garnered even more scrutiny due to a high-profile case of a child who was groomed and exploited through the platform when predators used playlist titles as a form of “chat,” asking the child to upload sexually explicit material of herself as Spotify thumbnails.

Incomprehensibly, not only has Spotify not taken any significant action to stem sexual exploitation on its platform in the past year, the evidence suggests it has gotten even worse!

In fact, NCOSE has found what appears to be a network of individuals – minors and adults trading, sharing, soliciting, and posting hardcore pornography, what looked to be child sexual abuse material (CSAM), deepfake pornography of female celebrities, and even self-harm imagery. Yet the platform still doesn’t have a straightforward way to report these crimes and harms to the app – an industry standard that could literally save children’s lives!

While Spotify did update its policies in October 2023 to prohibit “repeatedly targeting a minor to shame or intimidate” and “sharing or re-sharing non-consensual intimate content, as well as threats to distribute or expose such content” – words on paper mean nothing without enforcement. Despite these updates, Spotify’s failure to effectively implement both old and new policies seems to have led to an increase in harms.

Pornography (including content that normalizes sexual violence, child sexual abuse, and incest) is still easily found on Spotify in the form of thumbnails graphically depicting sexual activity and nudity, as well as “audio pornography” (recordings of sex sounds or sexually explicit stories read aloud) and in “video podcasts.”

Spotify claims to prohibit sexually explicit content, but clearly this policy is very poorly enforced or too vaguely defined. For example, in February 2024, Spotify launched a new category of audiobooks, “Spicy Audiobooks,” and introduced the Spice Meter – a brand new feature that categorizes audiobooks’ ‘spiciness’ level, allowing users (including kids) to choose from sexually suggestive pieces to pornographic audiobooks “with nothing left to the imagination.”

And by and large, content filtering (even through parental controls) does not work.

Spotify assures parents: “We have designed Spotify to be appropriate for listeners 13+ years of age.” Clearly, they have much more work to do to turn this lie into a truth!

Review the proof we’ve collected, read our recommendations for improvement, and see our notification letter to Spotify for more details.

Our Requests for Improvement

- Implement detection technology to proactively detect violative imagery content, grooming, and child sexual exploitation and promptly remove all users who engage in this behavior and all content used in grooming cases

- Develop reporting category for child sexual exploitation and CSAM and make reporting procedures for ALL content (album covers, podcasts, podcast videos, transcripts, titles, canvas, etc.) easily accessible from both the mobile and desktop apps

- Implement a strict explicit content filter: block for minors, blur for adults. Only caregivers can adjust settings, applying to all content types on the platform

- Default all video content to OFF for minor-aged accounts and have it locked under parental controls

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Hardcore Pornography and Sexually Explicit Visual Content Easily Found

Spotify’s Platform Rules prohibits “sexually explicit content,” which they say “includes but may not be limited to pornography or visual depictions of genitalia or nudity presented for the purpose of sexual gratification.” However, despite these policies, such content is easily found on Spotify.

Inexplicably, Spotify also places audio pornography in children’s radio stations, and even suggests it to minor-aged users after listening to a children’s podcast or song.

Here’s an inside look at Spotify’s backward logic (and failing algorithms):

- A child listens to “Julian at the Wedding”- ‘a joyful story of friendship and individuality’

- Spotify suggests in ‘up next’: “December 14: Cum in Your White Gown – An Erotic Christmas Calendar”

News outlets and influencers have also been raising concerns about the pornographic content in thumbnails, as well as in audio content.

- Spotify Has a Porn Problem — Here’s What Parents Need to Know, Bark, January 27, 2023,

- What’s Going On With the Hardcore Porn Images on Spotify?, Vice, July 26, 2022

- Spotify Overrun with Pornographic Images + Audio Content, Loudwire, August 2, 2022

- Hey Spotify, Children Should NOT Be Exposed To This. Fix This Now., The Comments Section, December 21, 2022

- If you search “music videos” on Spotify, dozens of pornographic tracks appear featuring sexually explicit album covers, Twitter, November 29, 2022

Content Promoting Deepfake Pornography, Sexual Violence, and Abuse

Spotify’s platform rules prohibit “Content that promotes manipulated and synthetic media as authentic in ways that pose the risk of harm.” However, using a minor-aged account with the explicit content filter on, NCOSE researchers were exposed to graphic deepfake pornography of female celebrities within a larger collection of pornography and suspected CSAM.

Content normalizing or trivializing illegal activities such as bestiality and sexual abuse of minors was being solicited through profile and playlist descriptions as well as within podcasts – both of which violate Spotify’s policies.

CSAM-Sharing Networks and Grooming of Children

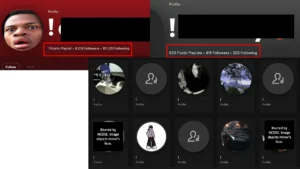

In February 2024, just as NCOSE was completing its research on Spotify, one of the researchers searched “!” and stumbled upon hundreds of profiles and harbored playlists within what was clearly an organized network.

The network contained hundreds of suspicious Spotify profiles which appeared to be dedicated to the soliciting or sharing of sexually explicit imagery of adults and minors. For example, one user had embedded their Instagram, Snapchat, and Discord profiles within their Spotify profile description and bio. Another account embedded their email address followed by a sentence asking other users to tag them in their playlists and vice a versa, to either ask for or send sexually explicit imagery of themselves or others.

In addition to high indicators of actual child sexual exploitation, NCOSE found a concerning amount of content in which it was not clear whether actual child sexual abuse was involved, but which certainly hinted at and fetishized child sexual abuse.

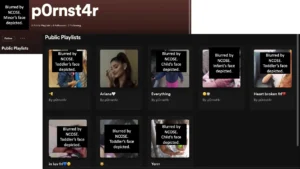

For example, the search “p0rn” returned a profile called “p0rnst4r” in which the playlist cover art was infant, toddler, and prepubescent children depicted. This profile was also within the network NCOSE identified, suggesting the user’s involvement in or promotion of child sexual abuse—specifically the sexual abuse of infants and toddlers.

In January 2023, a case of an 11-year-old girl being groomed and sexually exploited on Spotify made news headlines. Sexual predators communicated with the young girl via playlist titles and encouraged her to upload numerous sexually explicit photos of herself as the cover image of playlists she made.

- Warning over ‘sickening’ sexual grooming on Spotify as mother reveals how her 11-year-old daughter was tricked into uploading explicit pictures by paedophiles, Daily Mail, 01/06/2023

- Claims schoolgirl, 11, was groomed on Spotify, BBC, 01/13/2023

- Stockport MP raises Spotify grooming case in parliament after girl, 11, posted explicit pictures of herself, Manchester Evening News, 01/12/2023

- ‘The bottom of the world fell away when I found my daughter, 11, was groomed on Spotify’, Mirror, 01/18/2023

Survivor-advocate Catie Reay recently released a TikTok showing evidence of grooming on Spotify, such as playlists that tag other users and ask them to send sexually explicit content of themselves.

- ⚠️ PSA ⚠️ for parents with minors using Spotify!!! When we say it’s happening everywhere… We literally mean EVERYWHERE., TikTok, January 3, 2023

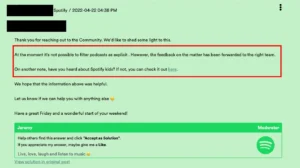

Yet despite all of this evidence, Spotify still doesn’t have a straightforward, in-app method to report potential crimes and violative content – an industry standard that could literally save children’s lives!

When our researchers attempted to report suspected CSAM to Spotify, they had to follow a convoluted process of email verification using a code before submitting a report…And even then, there was no option to specifically indicate CSAM directly to the platform. A clear reporting mechanism for CSAM is industry-standard and there is no excuse for Spotify’s neglect in creating this basic measure that could literally save a child’s life if addressed appropriately.

This is extremely concerning considering we raised cumbersome and insufficient reporting processes in our letter to Spotify informing them of their place on the 2023 Dirty Dozen List (Spotify ignored our request to meet with them).

As of February 2024, Spotify still lacks a direct, in-app reporting option for ANY content.

Below are the types of content and categories missing from Spotify reporting options:

- Child sexual abuse material (CSAM) and child sexual exploitation

- Album cover art that may include hardcore pornography, genitalia, etc.

- Text in profile or playlist descriptions (how sexual grooming and CSAM sharing occurs)

- Podcasts (audio, video, text, etc.)

- Songs

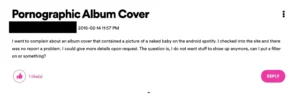

Inadequate “Explicit Content” Filter Exposes Children to Porn

Spotify offers an “explicit content filter,” which they assure parents will block “content which may not be appropriate for teens.” (Note: If caregivers want to allow or block anything with an explicit tag for their kids, they need to pay more for the Premium Family plan. And even then – the filter doesn’t work…keep reading)

Content which is blocked by the filter is marked with an “E.” However, NCOSE researchers found that the majority of pornographic content on the platform is not marked explicit, and therefore not blocked by the filter.

Spotify also requires uploaders to mark content as explicit, offering users no means of protection when uploaders fail to follow Spotify’s policies.

Further, Spotify’s explicit content filter does not apply to text in titles, lyrics, or transcripts, (Yes! Kids can read on Spotify!), or visual imagery such as profile pictures, album and playlist cover art images, or video podcasts, canvases, or Clips.

For example, using a minor-aged account with the explicit content filter on, NCOSE researchers searched “porn” and the first suggestion was a video podcast displaying graphic hentai pornography promoting and normalizing prostitution, misogyny, and sexual abuse.

We have demonstrated how thumbnails can often be sexually explicit or highly inappropriate for teens, and the same is true for descriptions which can detail sexual encounters.

Parent, Caregiver, and Teen Reviews Demonstrating Harm on the App

Below are just a few reviews about the dangers of Spotify.

A parent took to Spotify’s feature suggestion page in 2012 asking for parental controls. Eight years AND 92 pages of comments later, Spotify said they implemented parental controls but really, they only launched Spotify Kids. While Spotify offers an explicit content filter, total caregiver control is only available to premium Family Plan Subscribers.

Spotify’s answer… “You can’t.”

Fast Facts

68% of teens have used Spotify for streaming services over the last 6 months, with 44% of teens opting to subscribe/pay for Spotify services

30.5% of music streaming subscribers worldwide have a subscription with Spotify – almost double the share subscribed to Apple Music.

Spotify consistently flies under the radar and is slow to action. It took Spotify EIGHT years to implement ‘parental controls,’ AKA Spotify Kids and for Premium Family Plan Subscribers.