A Mainstream Contributor To Sexual Exploitation

"Do more with your money"

That shouldn’t apply to buying sex and paying for abusive content. This peer-to-peer payment app appeals to pimps, predators, and pedophiles looking for a covert way to conduct criminal activity.

Take Action

Updated 7/12/24: A new report from Thorn and NCMEC released in June 2024, Trends in Financial Sextortion: An Investigation of Sextortion Reports in NCMEC CyberTipline Data, analyzed more than 15 million sextortion reports to NCMEC between 2020 and 2023. The report found:

- Cash App (25.7%) is the #1 payment method mentioned in sextortion reports, followed by gift cards (25.6%), PayPal (17.8%), and Venmo (9.4%).

- Mapped over time, Cash App and gift cards have increased slowly as the dominant payment methods in sextortion reports relative to all other payment apps.

- Despite being the most commonly mentioned payment platform in sextortion reports examined in this study, there were no electronic service provider (ESP) reports of sextortion from Cash App (Block Inc.) to NCMEC in this analysis, while competitor PayPal Inc. (which includes both Venmo and PayPal) did make sextortion reports.

Updated 7/2/24: After being named to the 2024 Dirty Dozen List, Cash App is now hiring an Anti-Human Exploitation and Financial Crimes Program Manager. NCOSE is hopeful this will be a first step toward other improvements on the platform, as the new role involves “leading, designing and implementing a program focused on combating human exploitation on the Cash App platform with a focus on preventing the sale of child sex abuse material (CSAM) and payments which involve human trafficking,” including “implementing tools to enhance detection capabilities.” Cash App began seeking to fill this role in June, just a couple months after NCOSE sent the platform a letter asking them to implement proactive CSAM, grooming, and trafficking detection and moderation features, among other recommendations.

On Cash App, illegal and exploitative transactions are all too common

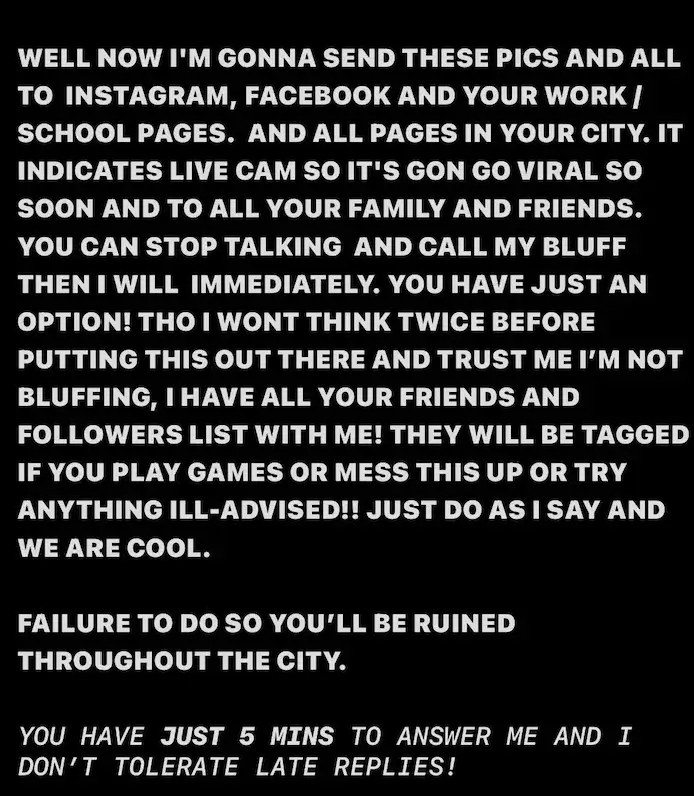

15-year-old Adrian* met a teenage “girl” on Snapchat and began exchanging sexually explicit photos. His face appeared in every picture he sent. She never showed her face…

An hour into their messaging, he received the following photo in his Instagram direct messages, along with the message “Cash App me at [$Cashtag].”**

*Name changed to protect identity.

**A $Cashtag is the unique identifier attached to each Cash App account. The below image is an actual screenshot taken from Reddit.

Popular payment transfer platform Cash App is consistently ranked by law enforcement and other experts as the top payment platform used for sextortion, buying and selling of child sexual abuse material (CSAM), prostitution, sex trafficking, and a host of other crimes.

Cash App’s very design appeals to bad actors. The anonymity, quick transfer capabilities, and lack of meaningful age and identity verification allow illegal transactions to fester. In fact, a former Cash App employee quipped: Every criminal has a Square cash app account.”

Recent reports and news articles show Cash App’s role as a mainstream facilitator of sexual exploitation and abuse. For example, in 2021, the senior director at Polaris, the anti-trafficking non-profit responsible for the U.S. trafficking hotline said, “…when it comes to sex trafficking in the U.S., by far the most commonly referenced platform is Cash App…It is probably the most frequently referenced financial brand in the hotline data.” Cash App responded in the same article saying that it rejects all payments associated with sex trafficking and other crimes…

That’s hard to believe when the 2023 Federal Human Trafficking Report found that between 2019 and 2022, Cash App was the most identified payment platform used in commercial sex transactions, followed by PayPal and Venmo.

The Department of Justice also frequently publishes indictments and sentencing detailing Cash App’s use to facilitate the commercial sexual exploitation of minors.

Even popular culture elevates Cash App as the preferred payment method for buying sex!

As the most preferred peer-to-peer money transfer app among teens and adults alike, Cash App has even greater responsibility to substantively change its very structure and operations to ensure a safe and legal environment.

Until Cash App is no longer crawling with criminals, take caution with this platform!

Our Requests for Improvement

- Implement robust age and identity verification at account sign-up, and retroactively for all existing accounts.

- Require adult sponsorship for minor-aged accounts at sign up. Peer-to-peer transactions should fall under parental controls and adult sponsorship.

- Default minor-aged accounts to the highest safety setting. Minor-aged accounts should be private, their $Cashtags not publicly searchable, and must be friends with individuals requesting to send or receive money from teen accounts.

- Implement proactive CSAM, grooming, and trafficking detection and moderation features, in addition to publishing an annual transparency report detailing accuracy of proactive detection and moderation, user reports, and removal rates.

- Begin reporting CSAM to the National Center for Missing and Exploited Children (NCMEC) Cyber Tipline.

An Ecosystem of Exploitation: Grooming, Predation and CSAM

The core points of this story are real, but the names have been changed and creative license has been taken in relating the events from the survivor’s perspective.

An Ecosystem of Exploitation: Grooming, Predation and CSAM

Like most thirteen-year-old boys, Kai* enjoyed playing video games. Kai would come home from school, log onto Discord, and play with NeonNinja,* another gamer he’d met online. It was fun … until one day, things started to take a scary turn.

NeonNinja began asking Kai to do things he wasn’t comfortable with. He asked him to take off his clothes and masturbate over a live video, promising to pay Kai money over Cash App. When Kai was still hesitant, NeonNinja threatened to kill himself if Kai didn’t do it.

Kai was terrified. NeonNinja was his friend! They’d been gaming together almost every day. He couldn’t let his friend kill himself…

But of course, “NeonNinja” was not Kai’s friend. He was a serial predator. He had groomed nearly a dozen boys like Kai over Discord and Snapchat. He had purchased hundreds of videos and images of child sexual abuse material through Telegram.

Every app this dangerous man used to prey on children could have prevented the exploitation from happening. But not one of them did.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Sexual Grooming, CSAM Buying and Selling, and Child Sexual Exploitation

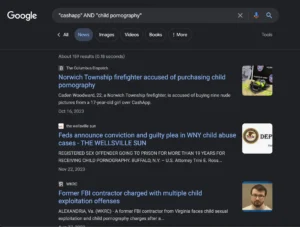

Cash App’s insufficient age and identity verification measures have made it a hotspot for child sexual abuse and exploitation, including sexual grooming and CSAM trading. On February 27, 2024, a Google News search for “Cash App” + “child pornography” (the current legal term for CSAM) returned 159 articles detailing cases in which Cash App was used for buying/selling CSAM, sexual grooming of minors, and sexual extortion, compared to 36 results for competitor Venmo.

Search was conducted on February 27, 2024

Search was conducted on February 27, 2024

Here are just a few press releases from the Department of Justice of recent indictments and sentencing detailing Cash App’s use to facilitate the commercial sexual exploitation of minors:

- “Former FBI Contractor Uses Popular Video Game Platform to Solicit Preteens for Child Sexual Abuse Material,” November 7, 2023

- “Westfield Child Predator Sentenced to 38 Years in Federal Prison for Seeking Sex with a 14-Year-Old and Trafficking in Child Sex Abuse Materials,” September 27, 2023

- “New Jersey Man Sentenced to 15 Years in Federal Prison After Grooming Minor Online and Transporting Her Across State Lines via Uber for Sex,” August 30, 2023

Several reports featured predatory adults asking for “small funds” because Cash App’s limit for non-verified accounts is $250 daily and $1,000 monthly. However, a former Cash App employee revealed that Cash App does not place limits on the number of accounts an individual can have on a single device. As such, bad actors can easily use multiple unverified accounts to buy and sell CSAM at scale.

Predatory adults and minors often meet and interact on social media and messaging platforms prior to exchanging funds on Cash App. Many apps that are currently on the Dirty Dozen List or have been in previous years are used by predators to groom minors and exchange sexual imagery before moving to Cash App.

Buying and Selling of CSAM:

On Cash App, predatory adults can buy and sell CSAM with relative anonymity and ease. While Cash App’s own Terms of Service note that users can remove content at any time, Cash App states, “we [Cash App] have no obligation to monitor any Content.” This “Wild West” approach provides a safe haven for predators to operate anonymously and with low risk.

A March 2023 report revealed that former Block employees (Block is the owner of Cash App) estimated that 40-75% of Cash App accounts they reviewed were fake. Many of the accounts employees reviewed had more than a dozen linked accounts. The report also showed just how easy it is to create and recreate accounts even if one of those accounts is banned. One former employee joked, “I mean honestly, we would all joke and we would just call it like ‘the web of lies.’”

Further, despite longstanding problems with CSAM buying and selling on the platform since its inception in 1998, Block has never submitted any reports of CSAM to the National Center for Missing and Exploited Children’s (NCMEC) Cyber Tipline. Meanwhile, Cash App’s competitor PayPal has been reporting to NCMEC since at least 2019.

Examples of cross-platform CSAM buying and selling involving the use of Cash App include:

- In January 2024, two adults were charged with production of child sexual abuse material (CSAM) after FBI agents found evidence that the two offenders were producing CSAM of an 11-12-year-old girl and exchanging CSAM images for small funds on Cash App.

- In November 2023, a former FBI contractor pleaded guilty to production and receipt of CSAM through Discord, in some cases coerced through Cash App payments.

- In October 2023, a man pleaded guilty to receipt of child pornography (i.e., CSAM) after he paid a minor girl via Cash App to send him CSAM through Snapchat.

- In September 2023, a man was charged with child exploitation and possession of child pornography (i.e., CSAM) after he posted and sold CSAM on Twitter, with at least one payment via Cash App.

Sexual Grooming and Exploitation:

- A February 2024 New York Times investigation into parent-managed social media accounts of their gymnast and dancer daughters revealed how moms posted their $Cashtag in their daughters’ profiles for fans to send money. Parents profited from the hyper-sexualization of their daughters, and male fans frequently demanded CSAM or asked parents to engage in abusive acts on their daughters in direct messages.

- In June 2023, research published by the Stanford Internet Observatory, “Cross-Platform Dynamics of Self-Generated CSAM,” documented underage users posting $Cashtags in social media bios/profiles such as on Twitter, Instagram, or Discord, which predatory adults used to pay minors for sexually explicit imagery.

- A 2022 Forbes investigation “These TikTok Accounts Are Hiding Child Sexual Abuse Material In Plain Sight” revealed how minors on TikTok posted their $Cashtags during livestreams in which they undressed themselves or offered sexually explicit imagery.

- In September 2023, a 29-year-old man was arrested after investigators discovered he was selling child sexual abuse material (CSAM) for small sums of money using Cash App and advertising the imagery on multiple Twitter accounts.

Sexual Extortion:

Recent research has cited Cash App as one of the top digital payment platforms in cases of sextortion. For example, a 2022 study by the Canadian Centre for Child Protection (C3P) found Cash App to be the second most reported digital payment app identified by victim-survivors as being involved in their experiences (PayPal was #1). The FBI has released warnings and given presentations naming Cash App as one of the most frequently used digital payment apps in sextortion cases.

Quotes from Recent News Articles

“At first, the caller [predator] asked for her [13-year-old minor female] Cash App information and then for sexual photos and videos. The girl’s friend recorded a moment of the call when the scammer told [the minor female] that she and her friend needed to perform sexual acts on each other and tape it ‘so they can prove they don’t have any drugs inside their private parts.’”

- Hillsborough County Sheriff’s Office warns of ‘terrible’ sextortion scam reaching teens, 10 Tampa Bay, January 24, 2024, https://www.wtsp.com/article/news/crime/hillsborough-county-sextortion-scam/67-529f5ea5-0de0-46a1-846b-81fdc645f6d2

“The victim sent the blackmailer Cash App payments of almost $2,000 for the next four months. The blackmailer repeatedly threatened to go public with the photos but never did.”

- Sextortion schemes spike in Florida as people are duped into sharing explicit photos, ABC Action News, July 6, 2023, https://www.abcactionnews.com/money/consumer/taking-action-for-you/sextortion-schemes-spike-in-florida-as-people-are-duped-into-sharing-explicit-photos (IG + Snapchat)

Prostitution, Sex Trafficking

Cash App is being used to facilitate prostitution and sex trafficking among adults and minors. The 2023 Federal Human Trafficking Report found that between 2019 and 2022, Cash App was the most identified payment platform used in commercial sex transactions, followed by PayPal and Venmo. Cash App has also been involved in notable sex trafficking cases like the Matt Gaetz case.

In January 2024, investigators discovered an online sex trafficking ring in which payments were facilitated through Cash App. According to investigators, the sex trafficker was booking hotels in each city, posting images of sex trafficking victims on prostitution site “SkiptheGames,” and then using Cash App for the transactions.

A 2022 Forbes investigation, “For Sex Traffickers, Jack Dorsey’s Cash App is King,” outlined law enforcement’s concern with the uptick in sex trafficking cases involving Cash App. Forbes reported, “Searching a police database of sex ads for ‘Cash App’ in Waco returned 2,200 ads, compared to 1,150 for PayPal and 725 for Venmo… Similar data put together by the Arizona attorney general shows that between 2016 and 2021, there were 480,000 online sex ads where Cash App was listed as a form of payment, nearly double that of nearest rival Venmo at 260,000.” The Forbes investigation also reported nearly 900,000 results when Cash App was searched on Skipthegames.com, compared to 470,000 for PayPal and a similar number for Venmo.

NCOSE researchers replicated that search on Skipthegames.com on February 27, 2024 and found similar results for sex trafficking and prostitution ads over the last year. Searches for “Cash App” and “CashApp” collectively returned 203,500 results, compared to 64,000 for Venmo and 131,000 for PayPal.

NCOSE researchers also assessed sex trafficking and prostitution ads posted on Skipthegames.com for a one hour period on February 27, 2024 and again found Cash App mentioned the highest number of times (Cash App=23, Paypal=17, Venmo=15), as well as the highest number of times as the only payment option accepted (13 results Cash App only, 10 Cash App + another service).

Cash App’s failure to moderate and ensure safety on its platform with the required urgency results in the perpetuation of demonstrable harms.

Recent news articles detailing the use of Cash App for sex trafficking include:

- “Charlottesville man charged with running prostitution ring along East Coast,” WSLS, January 25, 2024

- “Municipal Court,” Salem News, January 12, 2024

- “Brooklyn man indicted in federal court for multiple kidnappings, rapes, and robberies,” U.S. Department of Justice, Press Release, August 24, 2023

- “Murders, manhunt and a prostitution ring: Okla. woman tied to statewide fugitive in deadly shooting saga,” WPDE, October 15, 2023

A March 2023 Hindenburg report found numerous songs describing Cash App as the preferred option to pay for prostitution and sex trafficking, including songs named after the app itself.

Even Cash App’s own employees have reported seeing patterns indicative of sex trafficking:

- “You see a lot of Lyft or Uber rides late, always late at night like between 11:00 PM and 5:00 AM, multiple rides in one night, things like that.”

- “You’ll see things like hotel purchases, and you’ll see (the device) travelling. So it’ll go like Cleveland, Ohio, and like a Motel 6 and then Columbus, Ohio, and Holiday Inn and then the next day they’re in Cincinnati, and then the next day they’re in Kentucky, and then they shoot over to Virginia, and you watch it travel.”

Unenforced Policies

Cash App’s policies on paper require users to be at least 13 years old, require users 13-17 to have an adult sponsor, and state that when users “attempt to cash out, send, or receive a payment using Cash App, you will be prompted to verify your account with your full name, date of birth, and address for security purposes.”

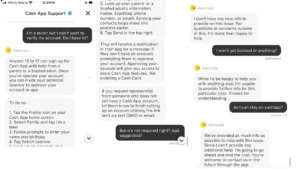

However, at no point were NCOSE researchers asked to verify their account by providing a street address when sending, receiving, or cashing out money during peer-to-peer (P2P) transactions. Despite Cash App stating that users must verify their age, Cash App doesn’t require users to upload any identifying documents or verify their Social Security number to confirm the user’s age.

NCOSE researchers were able to create an adult account, add a debit card that did not match the name of the Cash App account, and send, receive, and cash out funds without verifying their account.

When Cash App launched Cash App Teens in September 2021, they stated that Cash App was making financial transactions safe for teens between the ages of 13 and 17 who had the approval of a parent or guardian. But the reality is teens don’t even need to have an adult sponsor to use Cash App’s P2P transaction services. In fact, users don’t need to verify their age or ID at all when using P2P transaction services on the app.

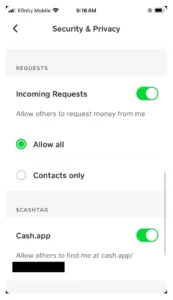

To make matters worse, all Cash App accounts are public by default, meaning that anyone can search a user’s $Cashtag. Additionally, all incoming “friend” and transaction requests are set to allow all.

Cash App’s default account settings

Cash App bot response to question about using platform without verifying age or identity

Cash App bot response to questions about whether minors need adult sponsor

Fast Facts

Most preferred peer-to-peer (P2P) money transfer app for teens (41%) and adults (52%)

Top payment platform used in commercial sex transactions 2019 - 2022 (Human Trafficking Institute, 2023).

Cash App has never submitted a report to NCMEC’s Cyber Tipline.