A Mainstream Contributor To Sexual Exploitation

Capture and Share The World's Moments

For countless children and adults that includes their worst moments. Grooming, child sexual abuse materials, sex trafficking, and many other harms continue to fester on Instagram.

Updated 10/11/24: Ahead of the House Energy and Commerce Committee’s markup of KOSA in September 2024, Meta announced new Instagram Teen Accounts with defaulted safety settings, which NCOSE asked for when we placed Meta on the 2024 Dirty Dozen List, including:

- Private Accounts: Teen accounts will automatically be set to private so that the teen must approve new followers and people who don’t follow them can’t see their content or interact with them.

- Restricted Messaging & Interactions: Teens can only be messaged, tagged, or mentioned by people they follow or are already connected to.

- Sensitive Content Restrictions: Teen accounts will automatically be set to the highest content restrictions, to limit their exposure to inappropriate content.

And teens under 16 will need parental permission to change their privacy and safety settings! You can read more about this policy change in our blog and press statement.

Updated 7/12/24: A new report from Thorn and NCMEC released in June 2024, Trends in Financial Sextortion: An Investigation of Sextortion Reports in NCMEC CyberTipline Data, analyzed more than 15 million sextortion reports to NCMEC between 2020 and 2023. The report found:

- Instagram (60%) is the #1 platform where perpetrators threatened to distribute sextortion images, followed by Facebook (33.7%).

- Instagram (81.3%) is the #1 platform where perpetrators actually distributed sextortion images out of reports that confirmed dissemination of the images on specific platforms, followed by Snapchat (16.7%) and Facebook (7.8%).

- Instagram (45.1%) is the #1 platform where perpetrators initially contacted victims for sextortion, followed by Snapchat (31.6%) and Facebook (7.1%).

- Of reports that mentioned a “secondary location” where victims were moved from one platform to another, 14% named WhatsApp, 6.6% named Instagram, and 2.9% named Facebook.

- Over the last two years, both Facebook and Instagram reports of sextortion to NCMEC have shifted from a pattern of rapidly submitting reports within days of an incident to more recently having a median delay of over a month between sextortion events and their reports.

Tasha, a single mother of two, was on the brink of granting her 14-year-old’s yearlong plea for an Instagram account. Yet, a simple Google search for ‘child safety on Instagram’ unraveled a stark and unsettling picture. The flood of alarming headlines left no room for doubt:

“It’s like dropping your kid off in a high crime area,” “Pimps glorifying sexual violence,” “algorithm promoted child sexual abuse material,” “100,000 kids sexually harassed daily,” “pedophile problem,” “a marketplace for child predators,” and “vast pedophile network.”

Her daughter’s request was firmly denied.

Disturbingly, these headlines are not only part of *Tasha’s story; they are indicative of the reality of Instagram’s catastrophic failure to protect its youngest users.

Instagram, a platform celebrated for its ability to “Capture and Share the World’s Moments,” has paradoxically become a haven for predators, facilitating grooming, child sexual abuse, sextortion, and sex trafficking with alarming notoriety.

Instagram is consistently highlighted as the most egregious Meta platform, with news articles and research reports documenting the extensive harms it facilitates: pedophile networks sharing child sex abuse material, the platform where CSAM offenders first contact children, exploitative algorithms promoting children to adults, sex trafficking, sextortion, and image-based sexual abuse.

Here’s a highlight reel of some of Instagram’s notable moments as a top exploiter:

- CSAM Offenders: #1 social media platform where CSAM offenders reported finding and sharing CSAM (child sexual abuse material) and #1 platform offenders used to first establish contact with a child.

- CSAM Sharing: The Stanford Internet Observatory referred to Instagram as “the most important platform for these [CSAM sharing] networks with features like recommendation algorithms and direct messaging that help connect buyers and sellers.”

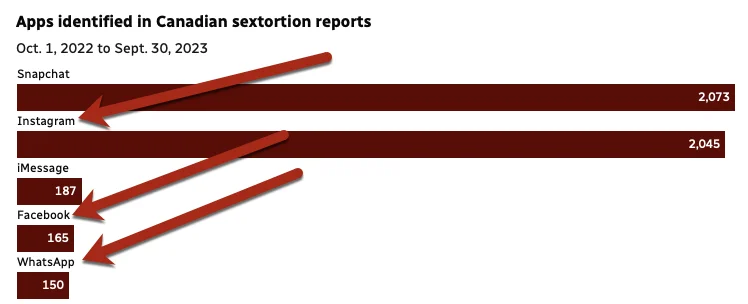

- Sextortion: #2 platform for the highest rates of sextortion (Instagram: 41%, Snapchat: 42%), with roughly 80% of reports mentioning Instagram or Snapchat (Canadian Centre for Child Protection, November 2023).

- Overall Exploiter: #1 platform where minors reported potentially harmful experiences on every one of Bark’s Annual Report categories for second year in a row: severe sexual content, severe suicidal ideation, depression, body image concerns, severe bullying, hate speech, severe violence.

Yet, in December 2023, Meta made the devastating decision to move to end-to-end encryption, effectively blinding itself to literally tens of millions of cases of child sexual abuse and exploitation, image-based sexual abuse, and any other criminal activity. This is an unprecedented move of negligence considering Meta’s consistent ranking as the top exploiter. Previously, Meta submitted by far the highest number of CSAM reports annually to NCMEC’s Cyber Tipline—now, nearly all of these reports will be lost.

Instead of dedicating its seemingly limitless resources to making substantive changes that would truly protect children (as well as adult users), Meta’s decision to implement end-to-end encryption (E2EE) is estimated to result in the loss of 92% of CSAM reports from Facebook and 85% from Instagram.

Meta rolled out numerous safety updates before the Congressional hearing, reflecting yet another instance of reactive and partial responses to longstanding advocacy efforts. Nevertheless, these updates still fail to sufficiently tackle the significant harms perpetuated by Meta’s platforms, particularly in safeguarding the well-being of children and vulnerable adults.

For example, despite knowledge of multiple cases of 16-17-year-olds dying by suicide due to Instagram sextortion schemes, Meta refuses to provide 16-17-year-olds with the same life-saving features awarded to 13-15-year-olds—such as blocking unconnected accounts from messaging minors, and preventing all minors from seeing sexually explicit content in messages.

In a July 2020 internal chat, one Instagram employee asked, “What specifically are we doing for child grooming (something I just heard about that is happening a lot on TikTok)?” The response from another employee was, “Somewhere between zero and negligible. Child safety is an explicit non-goal this half.”

It’s clear that even four years later, the concern of Meta’s Instagram is still “somewhere between zero and negligible.”

Our Requests for Improvement

We believe that Instagram is too dangerous for children and should be a 17+ app. At the very least, Instagram should make the following changes to stem exploitation:

- Implement client-side detection for CSAM for all end-to-end encrypted spaces across Meta platforms. Remove, ban, and report all accounts.

- Revise the definition of "teen" to encompass all minors aged 13–17 and ensure all available privacy and safety features are defaulted to the highest level and apply to all teens 13–17 across all Meta platforms. (Note: NCOSE believes that all Meta platforms have been proven so unsafe for children, they should be 17+ apps)

- Set all minor-aged accounts’ “followers” and “following” lists to “always remain private,” and provide adults (18+) the option to keep "followers" and "following" lists private (as is possible on Facebook) to prevent sextortion and image-based sexual abuse.

- Ban and remove all deepfake pornography and nudifying profiles, ads, posts, and links.

- Establish transparent and easily accessible reporting features for child sexual abuse material (CSAM) and instances of child sexual exploitation (grooming, sextortion, etc.).

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Instagram is a top virtual destination for grooming, sextortion, sex trafficking, and more

For years, Instagram has been on the top of nearly every list outlining the most dangerous apps for teens. Reports of sextortion, sexual grooming, and sex trafficking on Instagram are documented at frequent and alarming rates:

- Instagram is the #2 platform for the highest rates of sextortion (Canadian Centre for Child Protection, November 2023).

- Sextortion training materials were found on Instagram (2024).

- Instagram is the #2 parent-reported platform for sexually explicit requests to children (Parents Together report Afraid, Uncertain, and Overwhelmed: A Survey of Parents on Online Sexual Exploitation of Children, survey of 1,000 parents, April 2023).

- Instagram correlated with higher rates of parent reports of children sharing sexual images of themselves – a form of child sexual abuse material (Parents Together, April 2023).

- Instagram is the #2 location where victims of sex trafficking were recruited (Human Trafficking Institute, 2022).

- Instagram is the #2 location where children were recruited in cases of online enticement (NCMEC, 2024).

Canadian Centre for Child Protection, November 2023

Reports of minors dying by suicide because of sextortion are devastatingly common. From October 2021 to March 2023, the FBI and Homeland Security Investigations received “over 13,000 reports of online financial sextortion of minors. The sextortion involved at least 12,600 victims—primarily boys—and led to at least 20 suicides.”

In the last year, law enforcement agencies have received over 7,000 reports of online sextortion of minors, resulting in over 3,000 victims who were mostly boys, with more than a dozen victims who have died by suicide. As the #2 platform for the highest rates of sextortion, just barely trailing behind Snapchat, Instagram is at the heart of this epidemic.

It is incomprehensible that Meta fails to protect 16 and 17-year-olds, as exemplified by the tragic loss experienced by numerous families who lost their 16 or 17-year-old sons to sextortion schemes on Instagram—situations where recent protections, awarded only to teens aged 13-15-years-old, likely wouldn’t have offered protection.

The following conversation is based on real cases of sexual extortion on Instagram in which multiple 17-year-old boys died by suicide as a result. The loss of these 17-year-olds’ lives underscores the fact that Meta’s latest messaging updates, which are exclusively available to users aged 13 to 15, would still fail to offer life-saving protection.

Trigger warning.

~5:00pm

A 17-year-old boy, *Alex, met a teenage girl, *Macy, on Instagram and they began chatting. The conversation quickly escalated to Macy asking Alex for sexually explicit images. Unbeknownst to Alex, Macy was a sextortionist.

~8:30pm

- Alex: “Is this a scam?”

- “Macy” aka the predator: “No, no of course not.”

- Alex: *Sends sexually explicit imagery to the “teenage girl.”

- Macy: “I have screenshot all ur followers and tags can send these nudes to everyone and also send your nudes to your Family and friends Until it goes viral…All you’ve to do is to cooperate with me and I won’t expose you”

- “Are you gonna cooperate with me…Just pay me rn” [right now] And I won’t expose you”

- Alex: “How much”

- Macy: “$1000”

- Alex: *Pays “Macy” $400.

- Macy: “That’s not what I asked for. Pay me more. Now.”

~10:00pm

- Alex: “I don’t have any more money.”

- Macy: “Haha. I love this game.”

- “Enjoy your miserable life while I make these pics go viral”

- Alex: “Please I only have $50 left in my Cash App. Its all my savings.”

- Macy: “Give it up then. Or else you know what happens”

~12:00am

- Alex: *Pays the $50 in his Cash App

- Macy: “Still not enough loser…These pics are going viral…Oops…too late. [upside down emoji]…I just sent them out but you’ll never guess to who…”

- Alex: “I’m going to kill myself rn…Bc of what you did to me”

- Macy: “Good…Do that fast…Or I’ll make you do it…I will make u commit suicide…I promise you I swear”

~1:30am

Alex’s parents said goodnight to him at 8:00pm and woke up to find their son gone. Only seven hours after meeting “Macy,” Alex died by suicide.

Child sexual exploitation and abuse and CSAM trading on Instagram

Last year, when NCOSE named Instagram to the 2023 Dirty Dozen List, we revealed how our researchers quickly came across dozens of accounts with high markers of likely trading or selling child sexual abuse materials. Unfortunately, accounts like and reports of CSAM and CSAM sharing networks have only increased in the last year.

The Stanford Internet Observatory (SIO) called Instagram “the most important platform for these [CSAM sharing] networks with features like recommendation algorithms and direct messaging that help connect buyers and sellers.”

SIO’s June 2023 report revealed the identification of 405 accounts advertising “self-generated” CSAM and numerous “probable buyers.” When researchers reported these accounts to authorities, research stated that “hundreds of new SG-CSAM accounts were created, recreated, or activated on” Instagram, and “linked to the network” by hashtags or other content.

According to the New Mexico State Attorney General, in 2023, state investigators were able to easily identify and map “hundreds of Instagram users currently advertising the sale of CSAM, with roughly 450,000 followers between them.” Further, when one of the largest accounts was removed from Instagram, in response to a search for the banned account, “Instagram recommended an alternative account, which also sells CSAM.”

However, Meta knew of these problem years before these reports were published. In fact, a September 2020 email reveals Meta’s pervasive problems with respect to CSAM and other sexually explicit content: “[W]hen you search for these terms, there are no results under the ‘hashtag’ tab, but there are endless results under the ‘Top Accounts’ and ‘Accounts” tab, and almost all are violating.”

Evidence of child sexual abuse and exploitation on Instagram:

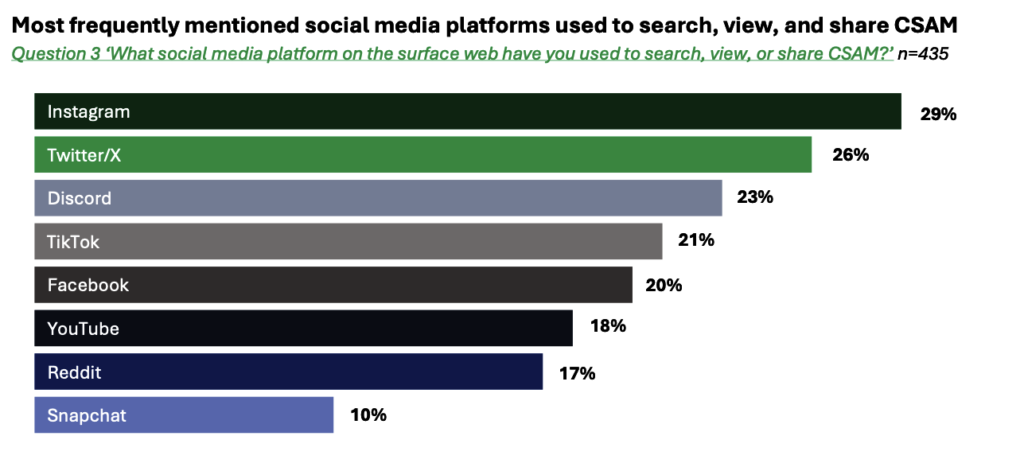

- Tech Platforms Used by Online Child Sex Abuse Offenders (Suojellaan Lapsia, Protect Children, February 2024)

- Instagram is the #1 social media platform where CSAM offenders reported finding and sharing CSAM; Facebook is in the top 5.

- Instagram is the #1 social media platform used by CSAM offenders to attempt to establish first contact with a child.

Captured from Tech Platforms Used by Online Child Sex Abuse Offenders, Suojellaan Lapsia, Protect Children, February 2024

- Facebook (9%), Instagram (8%), and Messenger (9%) are all among the top 5 platforms where minors reported having an online sexual interaction with someone they believed to be an adult.

- Meta-owned Facebook (13%), Instagram (13%), and Messenger (13%) are tied as the #2 platform where most minors reported having an online sexual experience.

- 75% of minors who have shared their own SG-CSAM use Instagram daily.

- 58% of minors who have reshared SG-CSAM of others use Instagram daily.

- 56% of minors who have been shown nonconsensually reshared SG-CSAM use Instagram daily.

Proliferation of Pedophile Networks

Instagram’s exploitative algorithms and recommendation systems connecting predatory adults and minors were widely exposed in 2023. When the New Mexico Attorney General referred to Meta as the “world’s ‘single largest marketplace for pedophiles,’” it’s easy to see why.

On Instagram, certain searches trigger a pop-up box with the warning “May contain images of child sex abuse,” along with a clarification that this material is illegal. Disturbingly, the pop-up box also contains a button labeled “view results anyway.” While it’s hard to believe, it is true. Instagram provided users with the option to view content flagged by its tools as potentially containing illegal child sex abuse imagery.

For years, NCOSE and other advocates like Collective Shout and Defend Dignity have been bringing the frequent reports of harm to Instagram’s attention—such as the rampant problem of parasitic accounts that collect photos of young children and collect them for fetishization and pedophile networks. However, a 2023 New Mexico State Attorney lawsuit confirmed that Instagram was listening – and deliberately failed to act. In December 2019, Meta employees circulated a Sunday Times article (detailing NCOSE’s joint campaign #wakeupinstagram) that focused on Instagram’s algorithm recommending objectionable content.

- “One individual in the article “specifically calls for [Instagram] to stop recommending children’s accounts to anyone. They have a case study of a parent who set up an account to showcase her daughter at gymnastics and was horrified that this was then potentially promoted to other people.”

- The email [internally] further notes the existence of Instagram users under the age of 13 and “[t]he ease with which a stranger can direct message a child on Instagram and the lack of proactive protections in place.”’

Meta did not implement default messaging features preventing strangers from messaging minors for another three years.

Unfortunately, even though Instagram claimed to have improved their default messaging settings, they remain largely ineffective.

Roughly five years later, in February 2024, a New York Times investigation into parent-managed social media accounts of their gymnast and dancer daughters revealed how prevalent this issue still is on Instagram. The report detailed how parents profited from the hyper-sexualization of their daughters, and male fans frequently demanded CSAM or asked parents to engage in abusive acts on their daughters in direct messages. The report also found that moms would post their $Cashtag in their daughters’ profiles for fans to send money (Cash App is also a 2024 Dirty Dozen List target).

Meta’s internal research and insights even found their features to perpetuate such harms – and again, the company did nothing to prevent it from happening:

- “Over one-quarter (28%) of minors on Instagram received message requests from adults they did not follow, indicating a significant risk of unwanted or potentially harmful contact (2020).

- Meta knew that “inappropriate interactions with children aka child grooming” was “out of control” on Instagram (2020).

- Meta’s internal research found that the feature ‘People You May Know (PYMK)’ contributed to 75% of cases involving inappropriate adult-minor contact (2021).

- One out of every eight users under the age of 16 reported experiencing unwanted sexual advances on Instagram in last seven days (2021).

End-to-End Encryption (E2EE) Will Allow CSAM and Other Crimes to Thrive Undetected

Meta’s decision to implement end-to-end encryption was considered a devastating blow to global child protection efforts due to its potential to create an environment conducive to the proliferation of child sexual abuse material (CSAM) and online grooming. While E2EE is has yet to be defaulted on Instagram Direct, “secret chats” and opt-in forms of E2EE exist on the platform.

Meta’s adoption of end-to-end encryption (E2EE), despite being the largest reporter of CSAM, submitting over 27 million reports to NCMEC in 2022, posits Meta as a protector of predators, privacy, and profit – not children. This approach endangers vulnerable populations and severely undermines Meta’s testament and responsibility for enhancing online child safety.

Through E2EE, messaging content is inaccessible to anyone, including Meta itself, unless a message is reported by a user. Without the ability to monitor communications for such harmful behaviors, Meta may be rolling out the welcome mat for predators to operate without fear of detection or intervention.

The move to E2EE on Instagram Direct comes despite Meta’s knowledge that users are unlikely to report content in Instagram Direct in the first place. A 2021 internal study on tracking progress of reducing “bad experiences” on Instagram found that “51% of Instagram users had a bad or harmful experience on Instagram in the last 7 days…” and that “only 1% of users reported the content or communication, and that only 2% of that user reported content is taken down. In other words, for every 10,000 users who have bad experiences on the platform, 100 report and 2 get help.”

Professor Hany Farid, developer of PhotoDNA, the CSAM detection and removal technology used by Meta and other online platforms said that Meta could be doing more to “flag suspicious words and phrases . . . – including coded language around grooming . . . This is, fundamentally, not a technological problem, but one of corporate priorities.” NCOSE couldn’t agree more.

For example, Meta could implement content detection technology that would work in E2EE areas of the platform by only scanning content at the device level – placing content detection mechanisms directly on users’ devices rather than on the platforms’ servers. By implementing E2EE across its platforms, Meta effectively closes the door on proactive measures to identify and prevent the circulation of CSAM, as well as the grooming of minors by predators.

In 2022, Instagram floated the idea of implementing a nudity detector within Instagram Direct that would scan images on an individual’s device, using Apple’s iOS detection technology. Meta stated that they would never have access to the image or the messaging contents: “This technology doesn’t allow Meta to see anyone’s private messages, nor are they shared with us or anyone else. We’re working closely with experts to ensure these new features preserve people’s privacy while giving them control over the messages they receive.”

Seems commonsense, no? It’s baffling that Meta failed to follow through on such a crucial feature especially considering their official move to default E2EE across all Meta platforms.

Refusing to implement viable detection technology, despite its potential to safeguard children from exploitation and abuse, is nothing short of negligent.

Meta made the move to E2EE despite internal research and communications showing that…

- Over one-quarter (28%) of minors on Instagram received message requests from adults they did not follow, indicating a significant risk of unwanted or potentially harmful contact (2020).

- In a July 2020 internal chat, one employee asked, “What specifically are we doing for child grooming (something I just heard about that is happening a lot on TikTok)?” The response from another employee was, “Somewhere between zero and negligible. Child safety is an explicit non-goal this half” (2020).

- Meta’s internal research found that the feature ‘People You May Know (PYMK)’ contributed to 75% of cases involving inappropriate adult-minor contact (2021).

- The majority of Instagram users under the age of 13 lie about their age to gain access to the platform, acknowledging the danger of relying on a user’s stated age…“[s]tated age only identifies 47% of minors on [Instagram]…” Moreover, “99% of disabled groomers do not state their age . . . The lack of stated age is strongly correlated to IIC [inappropriate interactions with children] violators.” (2021).

- One out of every eight users under the age of 16 reported experiencing unwanted sexual advances on Instagram in last seven days (2021).

- Roughly 100,000 children per day experienced sexual harassment, such as pictures of adult genitalia on Meta platforms (2021).

- Sexually explicit chats are 38x higher on Instagram than Facebook.

- Disappearing messages (secret chats that are encrypted) were listed as one of the “immediate product vulnerabilities” that could harm children, because of the difficulty reporting disappearing videos and confirmation that safeguards available on Facebook were not always present on Instagram.” Instagram Direct and Facebook Messenger STILL do not have a clear-cut option to report CSAM or unwanted sexual messages (2020).

Image-based sexual abuse (IBSA) and “AI Pornography” aka Synthetic Sexually Explicit Material (SSEM) on Instagram

In addition to the many harms perpetuated against children, we know children are not the only ones at risk on Instagram’s platform. Instagram is increasingly being called out as a hub for image-based sexual abuse – the nonconsensual capture, posting, or sharing of sexually explicit images. In 2022, the Center for Countering Digital Hate found that Instagram failed to act on 90% of abuse sent via DM to high-profile women.

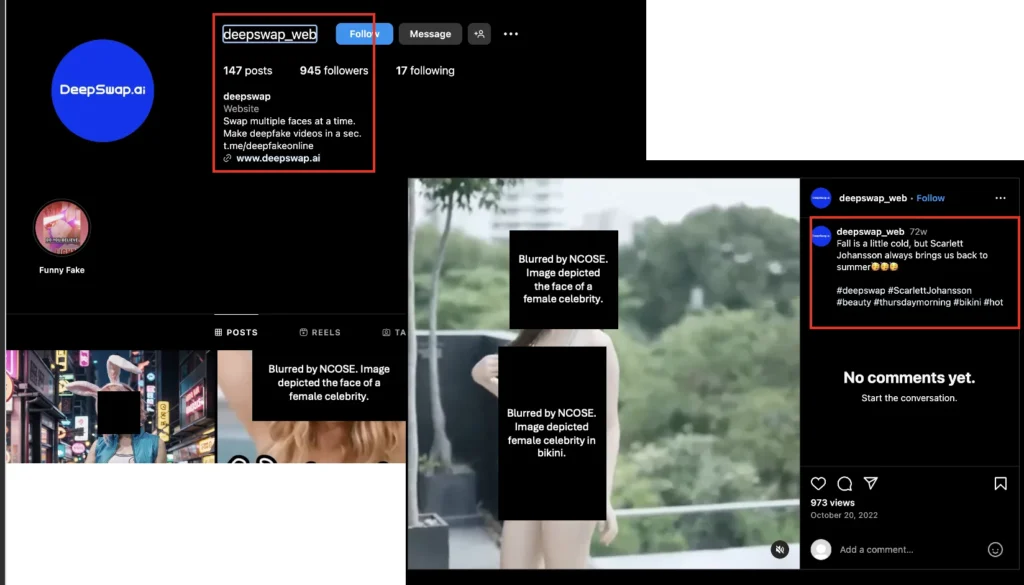

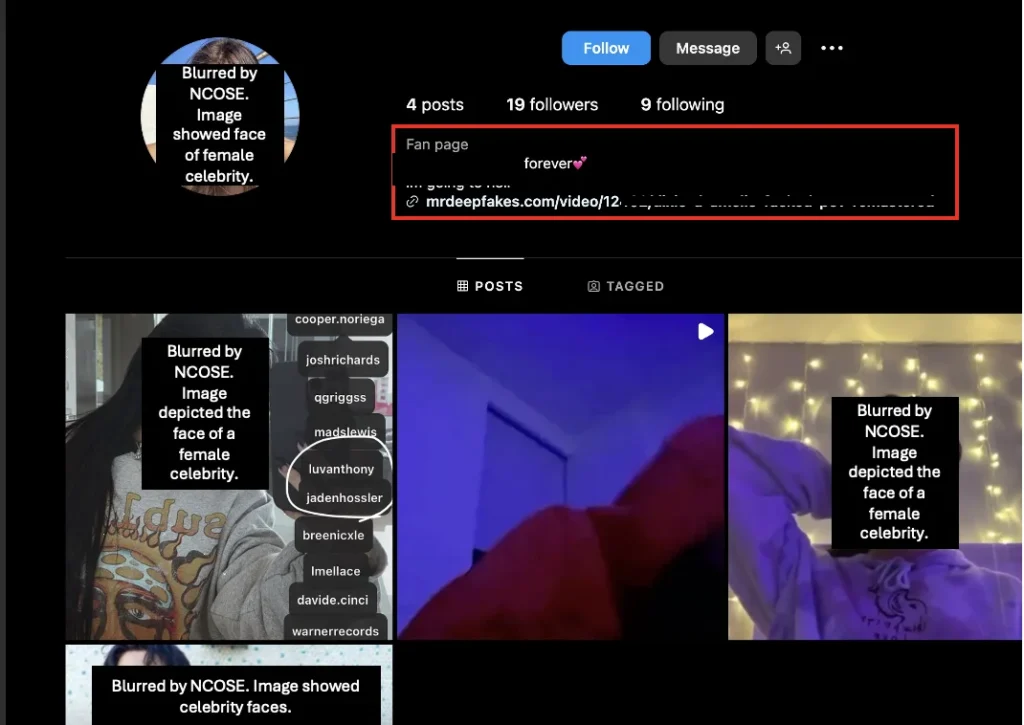

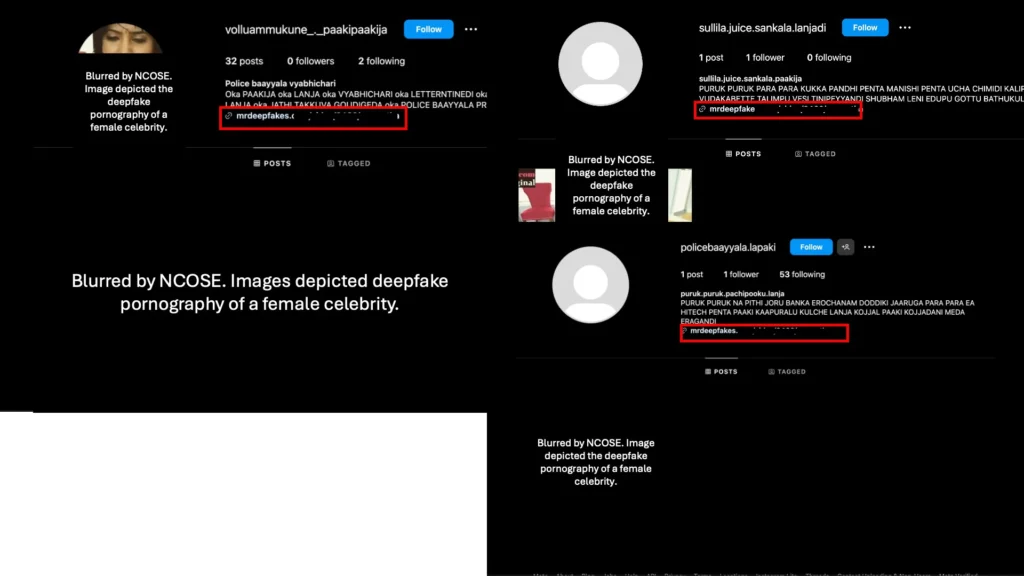

More recently, a March 2024 NBC article revealed that Facebook and Instagram had allowed deepfake nude ads of popular celebrities when they were minors, like Jenna Ortega, to run on their platforms. When NCOSE researchers conducted searches for nudifying apps, they found numerous examples of profiles promoting nudifying apps such as DeepSwap.ai. DeepSwap.ai is also available on the Apple App Store (Apple is a 2024 Dirty Dozen List target).

NCOSE researchers also found profiles containing deepfake pornography of famous female celebrities as well as direct links to Mr.Deepfakes – the most notorious deepfake pornography site in the United States.

Fast Facts

#1 social media platform where CSAM offenders reported finding and sharing CSAM, and through which the offenders first established contact with a child

#2 platform where minors have had a sexual experience with an adult

Instagram and Facebook ran deepfake pornography ads of 16-year-old celebrities in March 2024, just three months after disbanding its Responsible AI team

Similar to Instagram

TikTok

Recommended rEADING

Instagram Connects Vast Pedophile Network

Updates

Stay up-to-date with the latest news and additional resources

More Resources

- Resource for Teens to remove their sexually explicit content off Instagram TakeItDown.NCMEC.org

- Resource for Adults to remove image-based sexual abuse off Instagram StopNCII.org

- Collective Shout: We reported 100 pieces of child exploitation content to Instagram – They removed just three

- Protect Young Eyes: Instagram Adds New Parental Controls. We’re Underwhelmed.

- The Hill (article by Lina Nealon) Congress must force Big Tech to protect children from online predators

- 20 Years of Facebook: A Danger to Society