A Mainstream Contributor To Sexual Exploitation

“Twitter was asked to take [child sexual abuse material] down and Twitter said ‘we’ve looked at it and we’re not taking it down.’” - Judge at the United States Court of Appeals for the Ninth Circuit

Updated 6/22/2023: Twitter has claimed to improve its child sexual abuse material detection system after a report by the Stanford Internet Observatory exposed how dozens of hashed images of CSAM on Twitter failed to be identified, removed, and prevented from being uploaded. However, Twitter has yet to confirm whether they are still using PhotoDNA or any other type of CSAM detection technology. NCOSE researchers will be carefully monitoring the situation on this app. NCOSE kept Twitter on the Dirty Dozen List this year as there were clear indicators that CSAM and child exploitation, in general, was increasing on Twitter since Elon Musk took over—despite his promises that tackling CSAM was priority #1.

Take Action

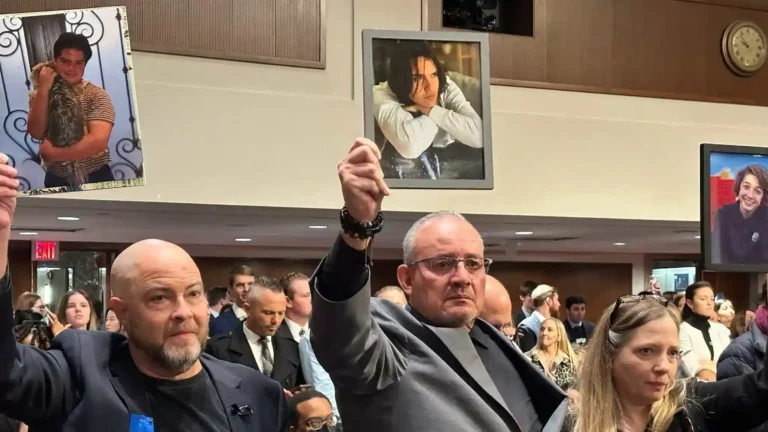

When John Doe was thirteen, a sex trafficker posing as a sixteen-year-old girl had groomed John and his friend into sending sexually explicit photos and videos of themselves (i.e. child sexual abuse material, or “child pornography”). A compilation of these videos was consequently posted to Twitter. There, it gathered 167,000 views and was retweeted more than 2,000 times. It was also passed around by people in John’s school, eventually driving John to contemplate ending his life.

John and his mother contacted Twitter multiple times, begging the company to take the child sexual abuse material down and even sending photos of John’s ID, proving he was a minor. Only to be met with this chilling response:

“We’ve reviewed the content, and didn’t find a violation of our policies, so no action will be taken at this time.”

The NCOSE Law Center and The Haba Law Firm are representing John Doe and his friend in a lawsuit against Twitter. The case, John Doe #1 and John Doe #2 v. Twitter, argues that the company’s conduct in the above story violated numerous laws, including knowingly benefiting from the sex trafficking of the plaintiffs, possessing child sexual abuse material, knowingly distributing child sexual abuse material (“CSAM”), and other offences.

Learn more below about this and other cases of exploitation on Twitter. Survivors deserve justice!

Will Elon Musk Make Good on His Promise to Prioritize Addressing Child Sexual Exploitation?

When Elon Musk purchased Twitter in 2022, he declared that “removing child exploitation is priority #1.” While this was a heartening statement, Musk has taken many alarming actions since which give reason for extreme skepticism. In fact, most experts agree that Musk’s actions since purchasing Twitter have so far served to make the crime of child sexual exploitation worse.

A devastating case of a boy who was recently kidnapped and sexually assaulted multiple times after being groomed “in public view” on Twitter would indicate the experts are sadly right. The boy’s mother says that “Twitter and law enforcement failed to effectively intervene despite an abundance of information posted online. They’re demanding answers.”

Musk cut the Twitter Inc. team dedicated to handling child sexual exploitation to half its former size. He also stopped paying Thorn, the child protection agency which provided the software Twitter relies on to detect child sexual abuse material, and stopped working with Thorn to improve this technology.

The National Center for Missing and Exploited Children (NCMEC) also reported that, following Musk taking over the company, Twitter declined to report the hundreds of thousands of accounts it suspended for claiming to sell CSAM without directly posting it. A Twitter spokesperson stated that they did this because such accounts did not meet the threshold for “high confidence that the person is knowingly transmitting” CSAM. However, NCMEC disagrees with that interpretation of the rules and asserts that tech companies are also legally required to report users that only claim to sell or solicit CSAM.

In the John Doe #1 and John Doe #2 v. Twitter case, the company continues to claim 100% legal immunity for its actions in facilitating the child sexual exploitation of John Doe and his friends. Is this Musk’s stance? How will he proceed to make good on his promise to address child sexual exploitation on Twitter, as “priority #1”?

NCOSE is watching.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Child-, Rape-, and Incest-Themed Pornography

Child-, Rape-, and Incest-Themed Pornography

CSAM Trading

Child Sexual Abuse Material Trading

NCOSE Letter to Twitter Executives in 2018

NCOSE Letter to Twitter Executives in 2018

Fast Facts

Musk cut off Thorn, the child protection agency which provided the software Twitter relies on to detect child sexual abuse material

Investigations have shown Twitter users looking to sell and trade CSAM are still easily found

Twitter is playing “kick the can” on publishing a Transparency Report which used to be published twice yearly.

Twitter is the online platform where young people were most likely to have seen pornography.

More Actions You Can Take Today

Lawsuit Against Twitter

Updates

Stay up-to-date with the latest news and additional resources

A 13-year-old boy was groomed publicly on Twitter and kidnapped, despite numerous chances to stop it

Recently, the 9th Circuit Court of Appeals affirmed the dismissal of child-pornography and sex-trafficking claims brought by the NCOSE Law Center against Twitter, on behalf of survivors who were exploited on the platform as minors. The court ruled that Section 230 of the Communications Decency Act (CDA 230) gives civil immunity to technology companies for child sexual abuse material—even though this was never Congress’s intention in passing CDA 230.

This decision was made in spite of the fact that Twitter confirmed in writing to the survivors that it reviewed the child sexual abuse material and had decided to leave it in place on the platform. This means Twitter knowingly possessed and broadly distributed the illegal material.

This ruling, while not the final outcome, highlights the urgent need to pass the EARN IT Act. The EARN IT Act clarifies that CDA 230 does not give technology companies immunity for knowingly facilitating the distribution of child sexual abuse material. For too long, CDA 230 has been misinterpreted by some courts, absolving tech companies of any accountability for their actions and robbing survivors of a path to justice.

Congress must send a clear, unambiguous message that CDA 230 does not give a free pass for child sexual abuse!

This case alleges that Twitter violated the federal sex trafficking statute, 18 U.S.C. §§ 1591, et seq., failed to report child sexual abuse material, 18 U.S.C. § 2258A, and received and distributed child pornography, 18 U.S.C. § 2252A. Read the full complaint here.