A Mainstream Contributor To Sexual Exploitation

Apple’s record is rotten when it comes to child protection

This Big Tech titan refuses to detect child sex abuse material, hosts dangerous apps with deceptive age ratings and descriptions, and won’t default safety features for teens.

Take Action

Updated 6/5/24: A recent Wall Street Journal article reported on a bug in Apple’s parental controls that allowed a child’s device to easily circumvent web restrictions and access pornography, violent images, and drug content. Despite being reported to Apple multiple times over the past three years, the bug was never fixed, leading some to feel like “the system meant to protect Apple’s youngest users…[is] an afterthought.” After being contacted by the reporter, Apple said they would fix the bug in the next software update.

Apple has more resources, power, and influence than many nations. Its products are unparalleled in quality and popularity, its engineers among the best in the world. A whopping 87% percent of US teens own iPhones, making Apple – in effect – their gatekeeper to the online world.

Therefore, Apple has all the more responsibility to be the global leader in ensuring technology not only affords privacy but also protects the people using their products, especially youth.

Yet at a time when child exploitation is at an all-time high and accelerating at alarming rates, Apple has not only failed to make common-sense changes to further safeguard their young users but has arguably set back global efforts to end sexual exploitation.

One of the greatest tragedies for survivors of child sex abuse, for families who have lost children due to that trauma, and for overall efforts to end this crime, was Apple’s decision to abandon plans to detect child sex abuse material on iCloud. Apple made this decision despite the fact that 90% of Americans assert Apple has a responsibility to detect this illegal content.

While Apple did expand protections for children aged 12 and under this year, blurring sexually explicit content and filtering out pornography sites, it inexplicably refuses to turn on the same safeguards for teenagers. This choice can only be described as negligent when sextortion of teens is proliferating at such alarming rates that the FBI and other child safety groups have been issuing repeated warnings about the phenomenon.

Apple’s recent policy choices have created dangerous precedents that may be used by other tech companies as justification to also turn a blind eye to CSAM and to deny teens greater protections, leaving countless young people at risk of child sex abuse, sextortion, grooming, and countless other crimes and harms.

Since the App Store was placed on the 2023 Dirty Dozen List, there have been no improvements. In fact, it may have gotten worse as our researchers have recently found apps for creating deepfake pornography (some rated 4+!), and the App Store continues to host dangerous apps like Wizz that have been proven to be used largely for the sextortion of minors. App age ratings and descriptions remain extremely deceptive, and despite knowing the age of account holders, Apple still suggests and promotes sex-themed 17+ apps to children (including kink, hookup, adult dating, and “chatroulette” apps that pair random users).

For how many more years must families, policymakers, safety advocates, and survivors beg Apple to use its near-limitless resources to act ethically and potentially prevent egregious harm and untold trauma?

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

This is a composite story, based on common survivor experiences. Real names and certain details have been changed to protect individual identities.

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

I once heard it said, “Trust is given, not earned. Distrust is earned.”

I don’t know if that saying is true or not. But the fact is, it doesn’t matter. Not for me, anyway. Because whether or not trust is given or earned, it’s no longer possible for me to trust anyone ever again.

At first, I told myself it was just a few anomalous creeps. An anomalous creep designed a “nudifying” technology that could strip the clothing off of pictures of women. An anomalous creep used this technology to make sexually explicit images of me, without my consent. Anomalous creeps shared, posted, downloaded, commented on the images… The people who doxxed me, who sent me messages harassing and extorting me, who told me I was a worthless sl*t who should kill herself—they were all just anomalous creeps.

Most people aren’t like that, I told myself. Most people would never participate in the sexual violation of a women. Most people are good.

But I was wrong.

Because the people who had a hand in my abuse weren’t just anomalous creeps hiding out in the dark web. They were businessmen. Businesswomen. People at the front and center of respectable society.

In short: they were the executives of powerful, mainstream corporations. A lot of mainstream corporations.

The “nudifying” bot that was used to create naked images of me? It was made by codes hosted on GitHub—a platform owned by Microsoft, the world’s richest company. Ads and profiles for the nudifying bot were on Facebook, Instagram, Reddit, Discord, Telegram, and LinkedIn. LinkedIn went so far as to host lists ranking different nudifying bots against each other. The bot was embedded as an independent website using services from Cloudflare, and on platforms like Telegram and Discord. And all these apps which facilitated my abuse are hosted on Apple’s app store.

When the people who hold the most power in our society actively participated in and enabled my sexual violation … is it any wonder I can’t feel safe anywhere?

Make no my mistake. My distrust has been earned. Decisively, irreparably earned.

Our Requests for Improvement

- Detect CSAM on iCloud and develop "frictionless" user reporting mechanisms throughout Apple products and features (especially iMessage) for Apple's review (NCOSE is aligned with Heat Initiative in these requests)

- Turn on Communication Safety for teens by default and provide warnings and resources prior to sending or viewing sexually explicit content

- Block all sexually explicit content for children 12 and under on Apple's products

- Reform the App Store to ensure appropriate and accurate app age ratings and descriptions

- Remove exploitative and dangerous apps from the App Store and cease to advertise inappropriate apps to children

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

Child Sex Abuse Material on Apple iCloud

Note: The National Center on Sexual Exploitation supports Heat Initiative‘s targeted campaign against Apple for its refusal to detect child sex abuse material on iCloud. Please be sure to visit their website and to support their campaign.

One of the greatest tragedies for survivors of child sex abuse, for families who have lost children due to that trauma, and for overall efforts to end sexual exploitation, was Apple’s decision to abandon plans to detect child sex abuse material on iCloud.

From a product liability perspective, it’s perplexing that Apple wouldn’t take more precautions given that 87% of teens own an iPhone and that iPhones routinely dominate the top 10 best-selling phones globally every year: thereby making it highly likely iPhones are disproportionately used to record, share, and obtain child sex abuse material. While Heat Initiative identified 94 publicly available cases of CSAM being found on Apple products, with images and videos including infants to toddlers to teens, those numbers are likely much higher given the popularity of iPhones.

Viewed through the lens of prudent business practice, this decision appears illogical when 90% of Americans assert Apple has a responsibility to detect CSAM.

And most importantly, from an ethical and human rights perspective, it’s unconscionable that Apple would ignore the pleas of survivors of child sex abuse, their families, and dozens of child safety experts and psychologists, and instead bend to the backlash of privacy absolutists who fight even the most rudimentary child protection measures at all costs.

Apple has essentially abandoned child sex abuse survivors who have endured unimaginable trauma through the abuse itself. They also suffer every moment that the crime scene content circulates on Apple products. They are re-abused each time those images and videos are viewed again. Children who’ve suffered this crime must live for the rest of their lives with the knowledge their content is freely traded, used to groom other children, and recruit more offenders. And even if there has not been physical, in-person abuse of a minor, having CSAM content of themselves in the digital world (even if they are the ones who produced and shared it or – as is increasingly the case – their image is used to generate CSAM content through AI), is still deeply traumatic and dangerous, even leading to grooming, sex trafficking, sextortion, or death by suicide due to the mental and emotional distress.

Apple elevates the protection of predators’ privacy concerns over victim-survivors’ rights to privacy and pushes a false narrative popular with Big Tech that individual privacy is incompatible with child protection.

When Apple announced its decision not to detect CSAM on iCloud, the company also noted that expanding Communication Safety was how it would further child protection. While Communication Safety is a necessary tool to ideally prevent children 12 and under from creating new, harmful content, it doesn’t help teens (more on that below), nor does it address the issue of adult perpetrators who are sharing and storing known CSAM on iCloud. Current and potential predators will not be receiving prevention warnings and would likely bypass them even if they did. So, these tools, while important, do nothing to stop perpetrators from perpetuating this terrible crime on and through iCloud. Prevention measures must not be conflated with actual detection and reporting of illegal child sex abuse content.

The National Center on Sexual Exploitation stands with Heat Initiative and the dozens of other child safety organizations and leaders calling on Apple to reverse the disastrous decision not to detect CSAM.

Leaving Teens at Risk of Sexual Exploitation

Apple refuses to default nudity blurring tools and pornography filters for teens.

After NCOSE advocated for this change for more than a year, Apple finally expanded and defaulted Communication Safety for users 12 and under and blocked pornography websites for that age group as well (Why wasn’t this implemented years ago..?) . While we did applaud this move publicly, in truth it was a great disappointment that it took so long for Apple to implement such a common-sense feature when dating app Bumble has been defaulting nudity blurring for years for their adult users. We assert that access to sexually explicit content should be completely blocked – with no option to view or send – for all minors, but certainly for children 12 and under. And we remain discouraged that Apple walked back the decision to alert parents of kids 12 and under if nude images were sent or viewed. We were told that Apple was concerned that children who may be questioning their sexuality may be “outed” to unsupportive parents. While this is a stated concern, Apple could have chosen not to share the context of the content with parents – but rather alert them to a potentially life-altering action by their young child and provide resources and guidance on how to navigate such a situation. One can also make the strong argument that Apple is actively undermining parents’ fundamental responsibility to protect their children.

What we utterly fail to comprehend is why Apple refuses to default Communication Safety for teenagers or to offer warning messages – especially when Apple is not detecting CSAM on iCloud and noted that Communication Safety expansion was its answer to child protection.ix

It appears that Apple does not want to protect teenagers from potentially creating and receiving CSAM and other sexually explicit content (including of and from adults). Perhaps Apple doesn’t consider 13 – 17-year-olds “kids” and doesn’t believe they deserve basic protections in the digital world as they rightly receive offline? If this is Apple’s position, it is unconscionable and in conflict with the laws of every state and federal government defining when childhood ends.

When pressed on this decision, Apple told us that they heard from experts that sexting is “healthy” for teens. Apple should not default to such fringe opinions which will always exist. Perhaps Apple is not aware of the sobering statistics of teenagers falling prey to grooming, sextortion, sex trafficking, deepfake pornography creation and sharing, severe bullying due to shared sexually explicit content, and a host of other crimes and harms which often start with sexting. Sextortion of teens is proliferating at such alarming rates that the FBI and other child safety groups have been issuing repeated warnings about the phenomenon. Through the simple action of turning on Communication Safety for teens, Apple could play a major role in preventing and intervening in potentially life-altering situations and educating teens on the many risks (and often illegality) of sharing sexually explicit content and providing resources if they are on the receiving end of such content.

The damaging effects to teens’ development when viewing hardcore pornography have been well-researched: the evidence of harm is irrefutable. Filtering out pornography sites and apps like X and Reddit that allow pornography is a simple step Apple could take to further safeguard teens.

Dangerous and Deceptive App Store Practices and Policies

Since Apple has decided neither to detect CSAM on iCloud nor to default nudity blurring features for teens, one would think that the very least Apple would do is provide families with accurate information about the apps available, outline the potential risks, and ensure that minors don’t have access to or advertisements for highly risky and age-inappropriate apps. To the contrary, Apple further endangers children by hosting dangerous apps on the App Store and failing to provide accurate age ratings and descriptions of apps.

With almost 90% of US teens owning an iPhone, Apple can rightly be called a primary “gatekeeper” to the online world. Caregivers trust and rely on Apple’s App Store age ratings and descriptions to determine what apps are safe and appropriate for their children to use. App age ratings also “trigger” several aspects of Apple’s parental controls (called Screen Time), blocking entire apps or content based on the designated age.

Yet at a time when child safety experts and mental health professionals – including the United States Surgeon General – are sounding the alarm that our kids are in crisis due in large part to social media, Apple’s app descriptions remain vague, hidden, and inconsistent: further jeopardizing our already-at-risk children.

Documented dangers on apps such as risky features, exposure to adult strangers (including predators), harmful content, illegal drug activity, concerns about healthy child development, easy access to explicit content, and most recently, an explosion of financial sextortion are not included in the current app descriptions. Even the social media and gaming apps that have been exposed as particularly rife with predatory activity, sexual interactions, and pornographic content (videos, images, language) are labeled 12+ for “infrequent or mild mature or suggestive themes” and “infrequent mild language.” US federal law requires children to be at least 13 years old to use social media and games not specifically designed for youth.

Furthermore, despite knowing the age of the account holder (based on Apple ID), Apple App Store suggests and promotes sex-themed 17+ apps to children apps (including kink, hookup, adult dating, and “chatroulette” apps that pair random users) which they can download by clicking a box noting they are 17+. Apple does not enforce its own Developer Guidelines, which state that ads must be appropriate for the app’s age rating, exposing children to mature in-app advertisements that reference gambling, drugs, and sexual role-play for apps rated 17+, even when the app is rated 9+ or 12+. And Apple lacks a system to report apps that fail to adequately explain the types of content a user might experience.

NCOSE placed the App Store on the 2023 Dirty Dozen List for the reasons noted above. Yet nothing has improved from May 2023 – March 2024. In fact, more evidence of App Store negligence has come to the fore.

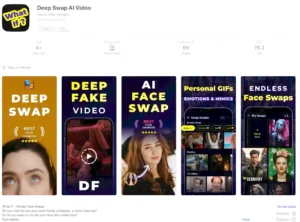

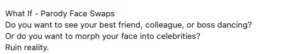

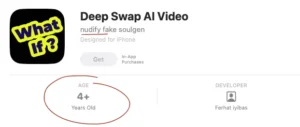

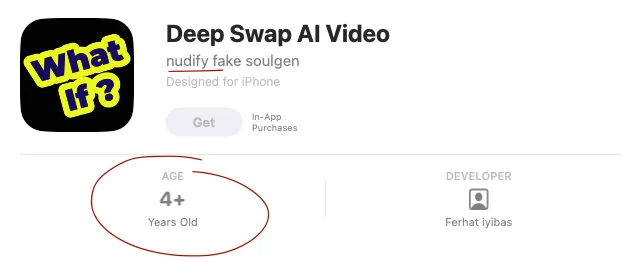

NCOSE has found that Apple hosts multiple “nudifying apps” – apps used to create deepfake pornography without the victim’s knowledge. Some of these apps are even rated age 4+. Here is just one example: https://apps.apple.com/us/app/deep-swap-ai-video/id6451139277

Known deepfake pornography apps DeepSwap, SoulGen, and Picso are also available on Apple App store. Although their app descriptions do not promote their tools for creating nonconsensual sexually explicit images, their advertisements on Facebook and elsewhere highlight this exact capability:

Everyone should be concerned about the rapid metastasis of “nudifying” technology, which disproportionately affects children and women. Stories about nudify apps that are being weaponized by teen boys against their female classmates are growing more common. This is child sex abuse content and image-based sexual abuse content – yet these tools are on the App Store, and our researchers found 10 of these apps in less than one minute. Why is Apple not devoting its immense resources to ensure apps like this do not make it to the App Store? Who is reviewing these apps and deciding that a 4+ rating is appropriate?

Apple also inexplicably reinstated Wizz to the App Store less than two weeks after removing it when NCOSE alerted Apple to the rampant sextortion and extensive pornography that multiple, reputable entities uncovered on the app. The Network Contagion Research Institute found it was the #3 app for sextortion and the fastest-growing social media platform for sextortion. It seemed that all it took for this exploitative app to get back on the App Store was a name change and some updated, insufficient policies. As of March 12, 2024, Google has not reinstated this app to the Play store.

Apple also hosts Telegram, which multiple law enforcement agencies and cyber security specialists are calling the “new dark web.” The extent of and the gravity of the criminal activity on this platform is staggering and must be removed from the App Store. Telegram is also on this year’s Dirty Dozen List: https://endsexualexploitation.org/telegram.

In addition to hosting apps built for exploitation, Apple’s descriptions of other, more mainstream, popular apps are grossly insufficient and deceptive: so much so in fact that famed tech expert and creator of PhotoDNA (a tool used to identify and “hash” or “fingerprint” known CSAM as a way for tech companies to prevent or remove this illegal content), Hany Farid, has started the App Danger Project to elevate customer reviews of apps on Apple App Store and Google Play warning of child sex abuse and grooming – something Apple should be doing.

Apple does not adequately alert families and children to what and to whom they may be exposed online.

Using just Snapchat as an example, child safety organization Thorn’s most recent survey found that 21% of 9-17-year-old users of Snapchat had a sexual interaction on the platform…. 14% with someone they believed to be an adult. That’s 1 out of 4 kids having a sexual interaction. Yet Apple rates Snap 12+ (despite it being a 13+ platform) and describes it thus:

Interactions with adults – let alone sexual interactions – are not even listed as a risk. This is gross negligence by Apple for an app that has been noted by experts as the top app for sextortion alone.xxiii Snap’s own research has found that two-thirds of Gen Z have been targeted for sextortion!xxiv

Perhaps not as dangerous as sexual interactions with adults and other minors, but still potentially harmful, is the content kids find on Snap’s more public sections such as Discover.

A few weeks ago, it took less than 10 minutes on a new account set to age 13 for one of our researchers to organically find the following videos and images in Snapchat’s Discover section (we’d be happy to provide Apple with screenshots):

- “What women want in bed” with extremely graphic language, suggestions to “take control and grab us,” and alcohol promotion to “set the mood”

- A video of a woman pole dancing with the heading “My New Job”

- A teen girl grabbing a teen boy’s private area while in the bathroom

20 more minutes led our researcher to:

- “Kiss or slap” a video series where young women in thongs ask men and other women to either kiss them or slap them (usually on the behind)

- Girls in dresses removing their underwear

- Clothed couples simulating sex on beds

- Teenage-looking girls doing jello shots

- Cartoons humping each other

And this is after Snapchat promised NCOSE and publicly shared that they made major improvements to rid Discover of inappropriate, sexualized content.

“Infrequent/mild sexual content”? We vehemently disagree with this description.

We could provide countless examples of dangerous interactions and harmful content not only for Snapchat but for other 4+ and 12+ App Store-rated apps such as Instagram, TikTok, Roblox, Spotify, etc. We have done so and have offered again to do so, but Apple has refused to meet with us since we put the App Store on the Dirty Dozen List after two years of advocacy pleading for simple, common-sense changes.

NCOSE researchers have also found countless examples of inappropriate age ratings for apps. For example, Cash App, known for sex trafficking, grooming, and CSAM, is rated 4+ and the top 10 search results for ‘VPN’ in the App Store were rated 4 + (including IPVanish – which Pornhub now uses after its VPNhub was removed from the App Store in December: coincidentally only a few weeks after NCOSE’s CEO highlighted this fact at a Congressional briefing).

Inappropriate ads targeted at children is also a long-standing problem that has been raised by countless advocates with Apple over the years that has not been fixed. Ads for apps that are 12+ like TikTok and apps 17+ (including kink, chatroulette apps, hook-up, dating, and gambling apps) are being directly advertised to kids 12 and under and show up as suggested apps. The same applies to users 12+. When a NCOSE researcher searched “Snapchat” they received app recommendations such as “Oops: One Night Stand.” Clearly Apple’s Developer Guidelines,xxvii which state that ads must be appropriate for the app’s age rating (section 1.3 Kids Category) are not being enforced.

And why are apps like the one below being advertised to anyone on the App Store?

Apple has immense influence on apps – all of whom resist removal from the App Store as noted in this anecdote from an unredacted lawsuit against Meta in which an Apple employee’s child was solicited by a predator. The internal Meta document notes that a Meta employee raised concerns that their insufficient child protections could put Facebook at risk of penalization by Apple: “This is the kind of thing that pisses Apple off to the extent of threatening to remove us from the App Store.”

In addition to NCOSE and ally Protect Young Eyes (who have been calling on Apple to fix app ratings since 2019), other leading child safety organizations, such as 5Rights and Canadian Centre for Child Protection, and several US state attorneys general have also called out the App Store to fix app ratings. Apple has ignored us all.

US Attorneys General Demanding Apple Change TikTok Age Rating from 12+ to 17+

Fast Facts

87% of US teens own an iPhone; 88% expect an iPhone to be their next phone

90% of Americans say Apple has a responsibility to identify, remove, and report child sexual abuse images and videos

App Age Ratings are a critical “trigger” for Apple’s parental controls (Screen Time); the ability for children to download or use certain apps or view content is often based on the app age rating

The App Store promotes and suggests sex-themed 17+ apps to children and teen accounts that minors are able to download by clicking a pop-up box affirming they are of age – despite Apple knowing their birthday on the account

App Store hosts "Nudify” apps that pornify pictures and “Chatroulette-style apps” that pair users with strangers and are known to be swamped with sexual predators