The Problem

Updated 3/12/24: Thousands of women, including Twitch streamers and gamers, have filed copyright complaints to remove deepfake pornography videos from Google’s search results, with a recent Wired article noting over 13,000 copyright complaints covering almost 30,000 URLs from a dozen popular deepfake websites. NCOSE calls on Google to do more to combat the spread of deepfake pornography by removing specific websites entirely from search results, something we’ve been asking Google to do since 2021, similar to what they did with ‘slander sites’ that same year.

Updated 9/19/2023: Google took a monumental step forward by automatically blurring sexually explicit images in Google Images search results! Considering that Google Image searches make up 62.6% of all daily Google Searches (roughly 5.3 billion Google Image searches per day), adults and minors will be protected from accidental pornography exposure like never before! Google also took positive steps to improve and simplify their process for reporting and removing image-based sexual abuse. These are the latest wins in a long series of Google policy changes, spurred by NCOSE’s advocacy since 2013.

Google also formally thanked us for our contributions in influencing their safety improvements, stating: “Google is proud to implement improved protections to help people control their personal explicit images and their online experience. We appreciate the feedback from survivors and subject matter experts like the National Center on Sexual Exploitation that help improve practices around online safety.”*

Read our blog HERE for more information about these recent changes

Google Search: A Complicated Case of Corporate Influence

Google (including its parent company Alphabet and related subsidiary companies) is a vast corporate empire, and many working within it care deeply about fighting sexual abuse and exploitation. Yet, at the same time, we know that exploitation still occurs through Google/Alphabet’s many tools, often due to blind spots in policy or practice that—if fixed—could prevent countless abuses worldwide.

Examples of Google Combating Sexual Exploitation:

Over the years, Google has taken important steps forward to combat sexual exploitation, often in response to NCOSE advocacy, such as improving Chromebook safety for students, establishing policies to remove apps and ads promoting “compensated sexual relationships (i.e. sugar dating)” from GooglePlay, ceasing pornographic Google Ads, expanding WiFi filtering tools, and improving the visibility of the SafeSearch filtering option in Google Images.

Google staff have also met with survivors of sex trafficking to learn how their products affect their daily lives, not something every corporation agrees to do.

We thank and applaud Google for these many improvements.

So why are they on the Dirty Dozen List again?

With great power comes great responsibility.

Given Google’s vast impact and abundant resources, it is necessary to raise awareness when poorly constructed policies and practices cause real-world harm to survivors and facilitate sexual abuse and exploitation.

How Google Search Currently Fails Survivors:

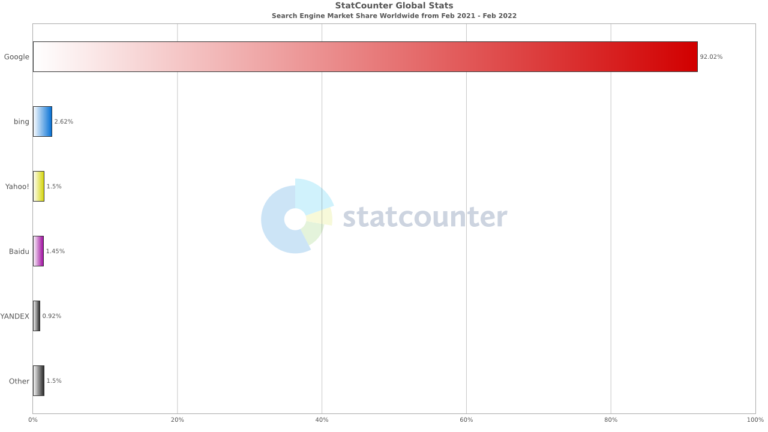

Google Search is a tool that provides access to global news and entertainment sources—and it’s unsurprising that as of February 2022, it held the leading market share (92%) of the global search engine market.

Unfortunately, Google Search facilitates access to real and depicted content of sexual abuse, including graphic videos of sex trafficking, child sexual abuse, and nonconsensually recorded/shared content, as well as illegal and socially damaging-themed content.

NCOSE researchers found videos that appear to depict survivors of the criminally- indicted GirlsDoPorn sex trafficking ring within the very first related Google Search result. Some of these videos included titles listing the survivors’ full names.

Furthermore, while it is well-known the pornography industry, as a whole, routinely fails to effectively verify age or consent of performers – there are several pornography sites that have come under particular scrutiny through recent government investigations, victorious lawsuits, survivor testimony, and investigative reporting to be hosting child sex abuse material, sex trafficking (i.e., criminal) content.

Furthermore, while it is well-known the pornography industry, as a whole, routinely fails to effectively verify age or consent of performers – there are several pornography sites that have come under particular scrutiny through recent government investigations, victorious lawsuits, survivor testimony, and investigative reporting to be hosting child sex abuse material, sex trafficking (i.e., criminal) content.

So, it’s particularly concerning that Google continues to drive users to these sites – Pornhub, OnlyFans, XVideos, XHamster, and others – that are likely hosting criminal content. These sites are often listed on the first page. Google is driving up the profits of these (and other) pornography companies and likely leading people to view criminal acts for their own pleasure furthering the exploitation of survivors.

Google Search drives people to pornography sites when they search for themes of rape, racism, incest, sexual abuse of minors, and other illegally-themed or socially damaging-themed pornography.

When users search for illegal-, violent-, incest-, and racist-themed pornography like “forced sex porn,” “drugged porn,” “white supremacist porn,” “hidden camera porn,” “Asian slave porn,” “leaked”/ “hidden camera porn,” “teen porn,” etc. Google Search yields pornography sites.

We’ve raised this concern with before and have asked Google to surface articles, resources, or commentary on the harms of racist or rape pornography—yet Google Search serves up the exploitation itself.

In fact, when the NCOSE Law Center was reviewing the content for the Dirty Dozen List and doing its due diligence, this review resulted in a report to NCMEC as one of the rape-themed websites under the results for “forced sex porn” displayed apparent CSAM on its homepage (see screenshot of search results page in “proof” section – no images). These websites are advertising and hosting real, not simulated, rape. There is absolutely no reason rape-themed searches should be yielding any pornographic sites and it is irresponsible for Google to permit these websites to be indexed in Google Search at all.

While the specific search for “rape porn” no longer leads to porn sites on the first page the way that it did a few months ago when we last raised this concern with Google – hardcore porn sites still show up on subsequent pages. This is not good enough. Google should not surface any results for any combination of terms indicating rape-themed pornography and banning websites which clearly and openly advertise rape content from Google Search altogether, and should consider at the very least removing pornography sites for other violent and socially-damaging terms from the first results page.

Insufficient reporting and removal mechanisms for nonconsensual pornography

Google has yet to prioritize survivor-centered practices to remove nonconsensual sexual content from search results, thereby leaving many victims to live with on-going trauma and extensive consequences to their daily lives as their abuse is replayed over and over online. Google’s reporting mechanisms for such content are overly complicated, retraumatizing, and insufficient.

Unfortunately, the National Center on Sexual Exploitation has witnessed this firsthand in the course of serving survivors of sex trafficking and other sexual abuses whose assaults were recorded and uploaded online.

Though we know Google is reviewing its reporting systems with the goal of making them more intuitive and less cumbersome, Google is currently making a policy choice to, by default, believe the hosts or uploaders of content, over the people depicted and being harmed in the content. In other words, the onus is on the survivors to prove consent wasn’t given (which is not possible), rather than place the burden on those posting the material to prove consent was obtained.

Who has more to lose? Google and those hosting the material or survivors?

NCOSE Law Center Analysis of the Insufficient Google Reporting Mechanism

In general, when a survivor comes to the NCOSE Law Center and shares that content depicting their abuse is circulating online, the content removal and takedown process requires hundreds of hours writing, sending, and following up with various web hosting providers and websites. And even still, this content does not come down easily. Even when websites and web hosting providers are put on notice that the content contains Child Sexual Abuse Material (CSAM) or depictions of sex trafficking, they are often reluctant to remove the content, refuse to accept responsibility, or ignore the requests altogether.

Even Google, with the newly rolled out nonconsensual explicit or intimate personal image reporting portal, requires the reporting party to provide the full name of the individual in the content, the URL(s) of the webpages that are displaying the content, the Google Search result URL(s), as well as screenshot(s) of the content being reported. Although we understand it is critically important that Google can identify the content requested for removal, the process of taking screenshots is extremely triggering to survivors, as well as those assisting victims with the removal process. It also requires the survivor to duplicate and increase circulation of their own abuse. The harm outweighs the potential usefulness of this requirement – and we urge Google to explore other ways to obtain the same result.

As part of the report, the survivor can indicate that the content in question was uploaded without consent and/or depicts sex trafficking or child sexual abuse material. Once the request is submitted, Google will review the content to determine if it violates their policies. In our experience, the results can take several days to yield a response—in the meantime, the survivor’s images remain easily accessible online, thereby leaving the survivor to suffer on-going trauma and vulnerability to sextortion, harassment, and other life-altering consequences like losing a job, getting kicked out of school, or losing friendships as people may not know the context of the images. And even then, it may be inexplicably determined by Google moderators that the content does not violate their terms and conditions (despite the survivor’s testimony).

How can Google better determine consent than the person who was harmed? Even law enforcement and other experts often can’t tell if an image or video depicts consensual acts as no one can see who else is behind the camera threatening them to look like “they enjoy it” or know what threat was used to coerce the creation of the video.

Notably, copyright infringements appear to be handled more swiftly and thoroughly by Google than image-based sexual abuse.

As noted above, the process to report these images is extremely cumbersome, and it is very difficult for moderators to be able to know whether consent has been given. But we do want to commend Google on advances made in deduping violative nonconsensual images through hashing technology and for delisting violative urls. We understand that Google does try to detect and remove copied versions of this content in Search, but that modified images may evade detection (at least until more refined technology to detect alterations is created). Google also added an “explicit content filtering” checkbox feature their reporting form last year – which does mitigate the need for users to keep resubmitting removal requests.

What is Image-based Sexual Abuse?

Image-based sexual abuse (IBSA) is a broad term that includes a multitude of harmful experiences, such as nonconsensual sharing of intimate sexual images (sometimes called “revenge porn”), pornography created using someone’s face without their knowledge (or deepfake pornography), nonconsensual recording or capture of intimate nude images (this can include so-called “upskirting” or surreptitious recordings in places such as restrooms and locker rooms via “spycams” etc.), and more.

Often, such materials are used for sextortion for additional sexual abuses, including sex trafficking, or to advertise prostitution and sex trafficking victims.

A 2017 nationwide study found that 1 in 8 American social media users have been targets of the distribution of sexually graphic images of individuals without their consent. Women were significantly more likely (about 1.7 times as likely) to have been targets of nonconsensually distributed pornography compared to men.

Experiencing image-based sexual abuse can cause long-lasting trauma. The Cyber Civil Rights Initiative conducted a survey of victims of nonconsensual distribution of explicit photos in 2012. Of the victims surveyed, 93% reported that they had suffered significant emotional distress due to their pictures being posted online. This can include high levels of anxiety, PTSD, depression, feelings of shame and humiliation, as well as loss of trust and sexual agency. The risk of suicide is a very real issue for victims and there are many tragic stories of young people taking their own lives as a result of this type of online abuse.

What Can Google Do to Fix the Problem?

Improvements Google must pursue:

- Institute a survivor-centered reporting and removal process by immediately removing reported content and blocking the ability for it to resurface while they investigate the report.

- As a matter of policy, Google should honor removal requests of sexually explicit content unless the uploader can affirmatively prove consent. This would also lift the likely traumatic burden on Google moderators to try to determine whether the rape scene they’re looking at is real or simulated.

- When age/consent cannot be affirmatively proven, not only should the Google Search result be removed and prevented, but Google should also require the platforms hosting the materials to remove them or risk negative page ranking consequences.

- Make it easier for users to find Google’s reporting mechanism for nonconsensual sexually explicit materials.

- Create different options to identify violative content in order to decrease the burden on survivors to screenshot their own abuse when making a report.

- Strengthen the hashing system for content deemed to be criminal or nonconsensual to prevent it from resurfacing again and continue to work with other companies to strengthen the technology industry as a whole in responding to and preventing such content from surfacing (Google has created two tools to hash for nonconsensual images: Content Safety API and CSAI Match that they share with others free of charge!).

- Adjust its algorithms so that search terms like “white supremacy porn,” “forced porn,” “drugged porn,” “incest porn,” “teen porn,” and “choking porn,” etc., lead to resources and educational information instead of pornography websites depicting videos of sexual abuse.

- As a policy matter, only allow pornographic websites which employ meaningful age and consent verification to surface results and utilize marketing tools via Google Search.

*Google’s quote expressing their gratitude to NCOSE was made after the original publish date of the blog and retroactively added.

Proof

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections.

POSSIBLE TRIGGER.

Nonconsensual Content Still Surfacing in Google Search

Nonconsensual Content Still Surfacing in Google Search

Racist, Incest, and Abuse Themed Porn

Racist, Incest, and Abuse Themed Porn

Google Surfacing Known Hubs of Nonconsensual Content

Google Surfacing Known Hubs of Nonconsensual Content

Take Action

Help educate others and demand change by sharing these on social media:

If you or someone you know has had materials shared online without consent, get immediate help here:

U.S. victims of nonconsensual pornography may turn to CCRI’s Crisis Helpline at 844-878-2274 to talk with counselors, 24 hours a day, 7 days a week. They also have a comprehensive online removal guide to address content on Facebook, Instagram, Twitter, Tumblr, Google, Snapchat, and other platforms.

For International Victims, CCRI provides links to resources for Australia, Brazil, Israel and Palestine, Pakistan, South Korea, Taiwan, and United Kingdom.

More Information & Resources:

NCOSE Law Center

Helpful Tips for Victims of Revenge Porn

5 Steps To Take If You're Being Sextorted

5 Steps To Take If You're Being Sextorted

Learn More5 Steps To Take If You're Being Blackmailed Online

5 Steps To Take If You're Being Blackmailed Online

Learn MoreUpdates

Stay up-to-date with the latest news and additional resources

Progress

As progress is made in the efforts to protect families, help survivors, and expose the truth, you'll be able to find those details here.

Google's Progress Over the Years:

Google Defaults K-12 Chromebooks and Products to Safety

Google Chromebooks and products for K–12 will be Defaulted to Safety for all under the age of 18. Google is also launching a new age-based access setting, making it easier for school administrators to tailor the experience for users for services like YouTube, Photos, and Maps.

Read More2021Google Image Search Defaulted SafeSearch for Users Under 18

Now parents can request removal of minors’ pictures in Google Images. Also, Google placed the SafeSearch feature at a higher level in the settings, making it easier to find and turn on.

Read More2021Google Play Store Prohibits "Compensated Sexual Acts" apps

VICTORY! Google Prohibiting Prostitution Advertisements and is Re-Categorizing Sexually Violent Content

Read More2021Google Images Improves Search, Decreases Unwanted Porn Exposure

Google changed its policies and algorithms on Google Images to decrease exposure to hardcore pornography for users looking up unrelated or innocent terms. Now, Google Images will return educational drawings for most anatomical search terms.

Read More2020Safe Search

In 2018, NCOSE met with Google executives and asked for Safe Search to be put in the top right corner of Google Images. Google agreed and placed it in the top right of Google Images. Now more users will know the filter is available to them.

In 2018, NCOSE met with Google executives and asked for Safe Search to be put in the top right corner of Google Images. Google agreed and placed it in the top right of Google Images. Now more users will know the filter is available to them.

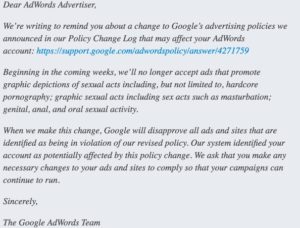

Google AdWords Prohibits Explicit Content

In June 2014, Google enacted policies for AdWords to no longer accept ads that promote graphic depictions of sexual acts or ads that link to websites that have such material in them.

In June 2014, Google enacted policies for AdWords to no longer accept ads that promote graphic depictions of sexual acts or ads that link to websites that have such material in them.

GooglePlay Cleaned Up

In 2013, Google announced that pornographic and sexually explicit apps would no longer be allowed in GooglePlay. This policy was enforced, with hundreds of apps removed from the app store, in March 2014.

In 2013, Google announced that pornographic and sexually explicit apps would no longer be allowed in GooglePlay. This policy was enforced, with hundreds of apps removed from the app store, in March 2014.

Robust Family Safety Center

Google regularly updates and improves the Family Safety Center with great tools and ways to protect children from exploitation and other online dangers. Check it regularly!

Google regularly updates and improves the Family Safety Center with great tools and ways to protect children from exploitation and other online dangers. Check it regularly!