5.3 billion Google searches per day are now safe!

Think back to the first time you, your child, or someone you care about was exposed to pornography.

If the experience you’re imagining tracks with available data, there’s a good chance the exposure was unintentional.

What was the effect of that first unintentional exposure? Did it burn distressing images that couldn’t be erased into your/your loved one’s mind? Did it set you/them on a path toward a destructive pornography addiction?

Now that you’ve imagined that experience … imagine it didn’t happen. Imagine wiping away all the negative effects that sprang from stumbling on pornography that first time.

This imaginary alternate reality can now be the actual reality for all future generations.

How? … you may ask.

Because you joined us in calling on Google to prioritize safety—and they listened!

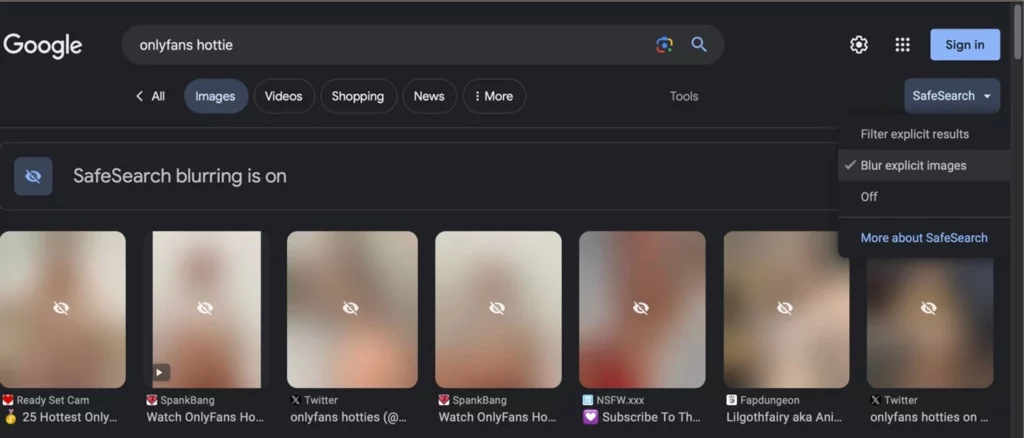

Google Automatically Blurs Sexually Explicit Images for All Users

Last February, we reported Google’s decision to soon begin automatically blurring all sexually explicit images in Google Images search results—something NCOSE and our supporters (like you!) have been asking them to do for a decade. Our contacts at Google reached out to us directly to let us know the change has officially been implemented! They also formally thanked us for our contributions in influencing their safety improvements, stating:

“Google is proud to implement improved protections to help people control their personal explicit images and their online experience. We appreciate the feedback from survivors and subject matter experts like the National Center on Sexual Exploitation that help improve practices around online safety.”*

The number of people protected by this change is truly astronomical. Google has overwhelming market share, with roughly 93% of global Internet searches being conducted on Google. And Google Images accounts for 62.6% of all Google searches, amounting to over 5.3 billion searches per day. That’s 5.3 billion Google searches per day that will no longer accidentally expose people to pornography!

Thanks to the automatic blurring, it is now the case that the only people who will be exposed to pornography on Google Images are those who actively and intentionally opt-in to see it. This is crucial because numerous studies show that, more often than not, youth viewing of pornography and/or a person’s first exposure to pornography is unintentional (see here, here, and here). Further, a 2021 UK study showed that more children are exposed to pornography on search engines than on dedicated pornography sites.

To all who have followed NCOSE, signed our actions, donated, or otherwise partook in our campaigns against corporations—we have one question for you:

How does it feel to be a hero for all future generations?

How does it feel to know that, thanks to your actions, children and adults now have a real fighting chance to live their lives free from the scourge of pornography?

YOU have done this, and we couldn’t be more grateful!

What Exactly Has Changed and Why Is It Important

Prior to this update, Google was already automatically filtering out all sexually explicit search results (not just in Google Images) when a user was signed in to an under 18 account. We convinced them to do this together in 2021, when you partook in our Dirty Dozen List campaign against Google Chromebooks. However, the new automatic blurring for all users is still extremely important, for many reasons.

First of all, as it is possible to use Google without being signed into an account, and as children have been known to lie about their age on online accounts, many children would be missed by the filtering setting.

Secondly, even adults should not be bombarded with pornography against their will; it is arguably a form of sexual harassment. And although you helped us influence Google to improve their algorithms in 2020, reducing the likelihood that innocent searches would surface pornography, the algorithm was not perfect and it was still possible to stumble on pornography without looking for it.

We are overjoyed that, after years of asking Google to automatically blur sexually explicit images, they have finally agreed. Given Google’s enormous power and overwhelming market share, their action sends a strong message to tech companies across the world that they can and should default to safety!

That’s Not All! Google Updates Reporting/Removal Process for Image-Based Sexual Abuse (IBSA)

Automatically blurring sexually explicit images is not the only positive change Google has made. They have also updated their reporting and removal process for non-consensually created or shared sexually explicit images—a form of image-based sexual abuse (IBSA).

NCOSE has been calling on Google to adopt a more survivor-centered approach to IBSA reporting and removal for almost a decade, and while there is still work to be done, the changes they have made are progress worth celebrating.

Simplified Reporting Process

Reports for IBSA are now filed through a single form that guides survivors through the process, rather than forcing survivors to navigate multiple forms with confusing (and at times contradictory) language. Survivors can now report up to 1,000 URLs in the same form.

Google will now also notify survivors when their report has been received, and if there are any status updates—e.g. if any action has been taken or if Google needs further information to investigate the case.

Allowing Individuals to Revoke Consent

Google now allows removal requests for sexually explicit imagery that was initially created/shared consensually, but for which the survivor has since revoked consent. This is an incredible change which allows survivors to take back control of their lives.

However, Google does not allow removal requests in cases where the survivor is currently receiving financial compensation for the material. Unfortunately, it can be difficult to prove that a survivor is not receiving compensation, as sex traffickers often monetize the material through online profiles that appear to be under the survivor’s control but are not.

For example, OnlyFans is a platform where it is often assumed that pornography performers have complete independence and are creating their own content—but even this platform has come under fire for sex trafficking. One 2020 Study analyzed 97 Instagram accounts of OnlyFans “creators” for indications of sex trafficking, and found that 36% of these accounts were classified as “likely third-party controlled.”

Option to Remove Pornographic Content from Searches Containing Your Name

Google now permits someone to request that pornographic content be filtered out of search queries that include their name—even if they themselves are not depicted in pornographic content.

For example, if Amanda Fields (hypothetical individual) discovers that searches for her name return pornographic images of another woman, she (or someone who is authorized to speak on her behalf) can report the images and request that Google prevent them from surfacing when someone searches the name “Amanda Fields.”

What Do We Still Want Google to Do?

While Google’s updates to its IBSA reporting/removal are positive, they have a long way to go to be truly survivor-centered. We continue to press on Google to make the following changes:

Remove reported IBSA during the investigation process

When IBSA is reported, Google should immediately remove the content and block the ability for it to resurface while the report is being investigated. Currently, Google leaves the reported content up throughout the entire investigation process. That time period can have devastating ramifications, as people are able to download and re-share the material on other platforms, making it virtually impossible for survivors to ever get it permanently taken down.

Honor removal requests unless consent is affirmatively proven

Google’s current policies place the onus on survivors to prove consent wasn’t given, rather than requiring those who posted the reported material to prove consent was obtained. This burden of proof must be switched. Just as with in-person sexual relationships, when someone says “no,” we do not say “prove you really mean that.” Consent should never be assumed as the default, least of all when someone is already stating they do not consent.

As a matter of policy, Google should honor removal requests of sexually explicit content unless the uploader can affirmatively prove consent. In the absence of affirmative proof, not only should Google remove the material from search results, but they should also require the platforms hosting the material to remove it or risk negative page ranking consequences.

*Google’s quote expressing their gratitude to NCOSE was made after the original publish date of the blog and retroactively added.