A Mainstream Contributor To Sexual Exploitation

Discord’s a hotspot for dangerous interactions and deepfakes

This platform is popular with predators seeking to groom kids and with creeps looking to create, trade, or find sexually abusive content of children and unsuspecting adults.

Take Action

After a lovely evening, Laila* kissed her 14-year-old daughter Maya* goodnight – only to wake up to her worst nightmare. Maya had disappeared. Laila’s mind raced to the conversation she’d had with her daughter just a few weeks earlier about online safety after discovering she had been video chatting with older men on Discord...

More than three weeks later, the police thankfully found Maya – who had been taken across the country by the man who had been grooming her on Discord for three months. The predator was arrested for commercial sexual exploitation and sex trafficking.

*Name changed to protect identity.

Sadly, Maya’s story is not unique. Discord enables exploiters to easily contact and groom children. Predators take advantage of Discord’s dangerous designs to entice children into sending sexually explicit images of themselves….a form of child sex abuse material (CSAM, the more apt term for child pornography).

Pedophiles also use Discord not only to obtain CSAM from children themselves, but to share and trade CSAM with each other. Discord has also become a popular platform for posting deepfakes, AI-generated images, and other forms of image-based sexual abuse. Clearly, Discord’s non-consensual media policy isn’t being enforced. Discord’s growing symbiotic relationship with the “new deep web” platform Telegram (also on the 2024 DDL) is only serving to accelerate and augment the amount of abusive content flowing between and circulating on these two platforms.

Despite extensive research and too many horror stories like Maya’s, Discord fails to default safety features to the highest setting for teens, their recently released parental controls are shockingly ineffective, and their new Teen Safety Assist Initiative – which looks good on paper – has been proven utterly defective by NCOSE and other child safety experts!

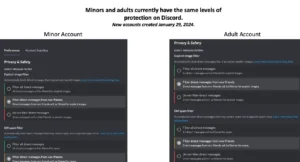

With Teen Safety Assist, sexually explicit content is supposed to be blurred by default and to include warnings and security resources when unconnected adults send direct messages. NCOSE researchers were able to send sexually explicit content from an adult account to an unconnected 13-year-old account, and no warning or resources were displayed. Furthermore, adults and minors still have the same default settings for explicit content, meaning minors still have total access to view and send pornography and other sexually explicit content on Discord servers and in private messages.

NCOSE reached out to Discord multiple times in late 2023 offering to share our test results of their new “safety” tool for teens and send them evidence of activity that showed high indicators of potential CSAM sharing. We never heard back.

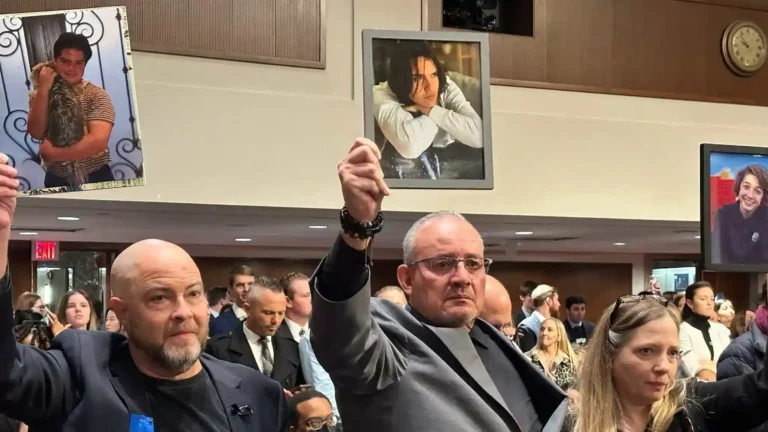

Yet Discord CEO Jason Citron testified under oath to Congress and the American people in his verbal and written testimonies during the Senate Judiciary Committee Hearing on Child Online Harms (January 31) that the Teen Safety Assist feature automatically blurs sexually explicit images for all minors, and that teens had higher safety settings. This deception by Discord must not be accepted. (Tellingly, Mr. Citron had to be issued a subpoena to appear before Congress – which he refused to accept! Congress was forced to enlist the U.S. Marshals Service to personally subpoena the CEO.)

Actions speak louder than words and Discord’s lack of action is deafening.

For these reasons, Discord remains on the Dirty Dozen List for the fourth consecutive year.

Our Requests for Improvement

Given how unsafe it is, NCOSE recommends that Discord ban minors from using the platform until it is radically transformed. Discord should also consider banning pornography until substantive age and consent verification for sexually explicit material can be implemented – otherwise, IBSA and CSAM will continue to plague the platform.

At the very least, Discord must prioritize and expedite the following changes to ensure all users are safe and free from sexual abuse and exploitation.

- Prioritize image-based sexual abuse (IBSA) and child sexual abuse material (CSAM) prevention and removal by instituting robust age and consent verification

- Automatically default all minors’ accounts to the highest level of safety and privacy available on the Discord platform

- Automatically age-gate servers that contain any age-restricted channels (i.e., channels hosting sexually explicit content) and automatically block minors from joining such servers

-

Add the following features to Parental Controls

- Friend requests (regardless of age) with no friends in common must be parent approved

- Friend requests from server/group members/individuals with ISP addresses in other countries or states must be approved by parent

- Parent receives name and notification when adult requests to friend teen or when teen requests to friend an adult

- Platform limits number of friend requests teen can send or receive from adults or strangers weekly

- Permanently suspend Pornhub’s verified Discord account

An Ecosystem of Exploitation: Grooming, Predation and CSAM

The core points of this story are real, but the names have been changed and creative license has been taken in relating the events from the survivor’s perspective.

An Ecosystem of Exploitation: Grooming, Predation and CSAM

Like most thirteen-year-old boys, Kai* enjoyed playing video games. Kai would come home from school, log onto Discord, and play with NeonNinja,* another gamer he’d met online. It was fun … until one day, things started to take a scary turn.

NeonNinja began asking Kai to do things he wasn’t comfortable with. He asked him to take off his clothes and masturbate over a live video, promising to pay Kai money over Cash App. When Kai was still hesitant, NeonNinja threatened to kill himself if Kai didn’t do it.

Kai was terrified. NeonNinja was his friend! They’d been gaming together almost every day. He couldn’t let his friend kill himself…

But of course, “NeonNinja” was not Kai’s friend. He was a serial predator. He had groomed nearly a dozen boys like Kai over Discord and Snapchat. He had purchased hundreds of videos and images of child sexual abuse material through Telegram.

Every app this dangerous man used to prey on children could have prevented the exploitation from happening. But not one of them did.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

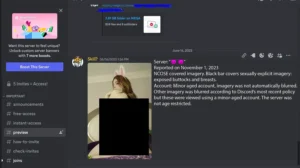

Image-based Sexual Abuse

When NCOSE named Discord to the 2023 Dirty Dozen List, we reported on the countless Discord servers dedicated to non-consensually sharing “leaked” sexually explicit images. Sadly, since May 2023, we have yet to see any substantial reduction in the accessibility and existence of such servers, channels, and events on Discord’s platform.

Despite iterative updates to policies and practices against image-based sexual abuse, including AI-facilitated sexual abuse in 2023, Discord’s platform continues to be a haven for sexually exploitative technology and imagery. “Nudifying” bots, deepfake and AI-generated pornography, and even AI-generated CSAM still have a pervasive presence on Discord. And Discord doesn’t seem to care.

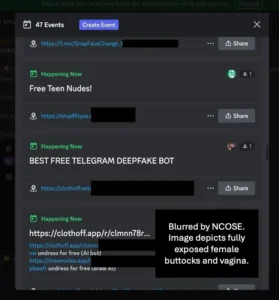

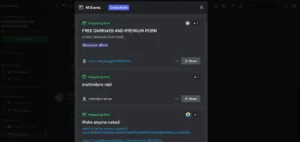

In November 2023, NCOSE reached out to Discord alerting them to servers dedicated to image-based sexual abuse where users were sharing sexually explicit images of women and were hosting events promoting deepfake pornography and “nudifying” bots. One server, SnapDress, was hosting 47 events in ONE day, dedicated to synthetic sexually explicit media (SSEM) and image-based sexual abuse (IBSA).

Discord never responded to this email or our request to meet.

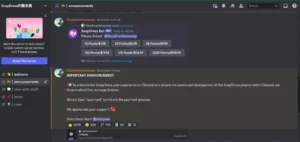

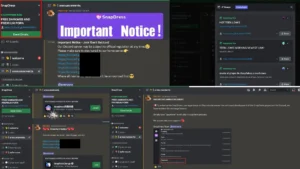

The same server hosting 47 events related to IBSA and SSEM was also the server for a “nudifying” bot called SnapDress. At the time NCOSE discovered the server, it contained over 24,000 members. Discord had banned the nudifiying bot multiple times in previous “SnapDress” servers because of its exploitative purpose – to ‘strip’ women of their clothing. Users could also buy points to use the “nudifying” bot within the Discord server.

Unfortunately, with each removal, the SnapDress server owners continued to recreate new servers, advising members to join the new group in the event the current server was banned. Clearly, Discord’s reliance on ‘whack-a-mole’ approach to moderation is ineffective.

In addition to synthetic sexually explicit media (SSEM) on Discord, NCOSE also alerted our contact at Discord of multiple IBSA servers, accessed using a minor-aged account. These servers were explicitly dedicated to the trading, sharing, and promoting of non-consensual sexually explicit media.

Discord never responded to our email or even opened the folder of proof we provided.

Additional evidence available upon request: public@ncose.com.

Definitions:

Image-based sexual abuse (IBSA) is a broad term that includes a multitude of harmful experiences, such as non-consensual sharing of sexual images (sometimes called “revenge porn”), pornography created using someone’s face without their knowledge (or sexual deepfakes), non-consensual recording or capture of intimate nude images (to include so-called “upskirting” or surreptitious recordings in places such as restrooms and locker rooms via “spycams”), recording of sexual assault, the use of sexually explicit or sexualized materials to groom or extort a person (also known as “sextortion”) or to advertise commercial sexual abuse, and more.

Note: sexually explicit and sexualized material depicting children ages 0-17 constitutes a distinct class of material, known as child sexual abuse material (CSAM – the more appropriate term for “child pornography”), which is illegal under US federal statute. CSAM is not to be conflated with IBSA.

Child Sexual Abuse Material (CSAM)

There is a significant amount of child sexual abuse material (CSAM) on Discord. When NCOSE named Discord to the 2023 Dirty Dozen List in May 2023, we informed Discord about the numerous experiences our researchers had in which they encountered potential child sexual abuse material (CSAM). For example, in what seemed to be a server for troubled teens, NCOSE researchers were immediately, inadvertently, exposed to dozens of images and links of what appeared to be child sexual abuse material. The images and links contained disturbing titles and comments by other users. The blatant abuse was alarming, some of which had been on the server for over two months.

Our researchers immediately reported the server to the National Center for Missing and Exploited Children (NCMEC), who responded and processed our report, making it available for FBI Liaisons in less than 24 hours. As of March 2024, Discord has yet to provide any further correspondence than an automated reply for this account.

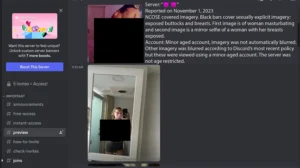

In November 2023, NCOSE reached out to a contact at Discord alerting them of servers containing high indications of CSAM sharing and sexualization of minors. Below are just two of the images we shared with our contact. Again, Discord never responded to our email or opened the folder of proof provided.

→ A server hosting events for “Darkweb Porn”

→ Users asking to trade “Mega links” saying “anything goes.” Mega is a filesharing cloud notorious for hosting CSAM.

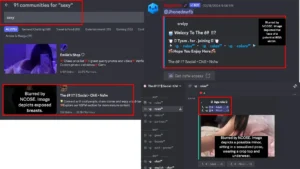

On February 21, 2024, NCOSE identified numerous servers containing high indications of CSAM and sexualization of minors after searching “p0rn” on Discord’s Discover page.

The 2nd server listed for the search “p0rn” was literally called “child pornography.”

Given Mr. Citron’s statement at the 2024 Judiciary Hearing, assuring Senators of Discord’s zero-tolerance policy for child sexual exploitation and abuse and emphasizing how minors could not access pornographic content on Discord, these results are gravely concerning.

Evidence suggesting the buying, selling, and trading of CSAM on Discord is also prevalent on other social media platforms.

- In 2023, researchers from the Stanford Internet Observatory reported that links to Discord servers selling CSAM (both muti-party and single party sellers) were advertised on Instagram with servers containing hundreds or thousands of users.

- A February 2024 report by the Protect Children Project on the technology pathways used by CSAM offenders revealed that Discord was reported by almost 1/3 (26%) of respondents as one of the top platforms used to search for, view, or share CSAM.

- According to the same study mentioned above, 70% of respondents who have sought contact with a child did so online; specifically, almost ½ (48%) of CSAM offenders attempted to establish first contact with a child on social media platforms. Discord was the third most mentioned platform (26%) where CSAM offenders attempted to contact children, behind Instagram (45%) and Facebook (30%).

Some news articles covering this phenomenon include:

- School Employee From Morris County Uploaded Child Porn On Discord: Prosecutors, Daily Voice, February 16, 2024, https://dailyvoice.com/new-jersey/sussex/school-employee-from-morris-county-uploaded-child-porn-on-discord-prosecutors/

- NJ Man Sentenced For Making Teen Sexually Abuse 4-Year-Old, Record It: Prosecutors, Daily Voice, December 11, 2023, https://dailyvoice.com/new-jersey/sussex/nj-man-sentenced-for-making-teen-sexually-abuse-4-year-old-record-it-prosecutors/

- Phoenix man arrested on charges of downloading child pornography on Discord, KTAR News, September 9, 2023, https://ktar.com/story/5537547/phoenix-man-arrested-on-charges-of-downloading-child-pornography-he-found-on-discord/

Grooming of Children

Despite significant child safety policy updates in July 2023, including the implementation of parental controls, banning “Inappropriate Sexual Conduct with Teens and Grooming” and teen dating servers, and launching the Teen Safety Assist Initiative in October 2023, Discord remains a haven for sexual grooming by predatory adults, abusers, and traffickers. The platform provides predatory adults ample opportunities for unmitigated interaction with minors through public servers, direct messages, and video/voice chat channels.

For example, Discord and Roblox are being sued on behalf of the family of an 11-year-girl who attempted suicide multiple times after allegedly being sexually exploited by adult men she met through the apps.

Here are just a few examples of children being groomed and abused through Discord in the news from June 2023 to February 2024:

- The Vile Sextortion and Torture Ring Where Kids Target Kids, Vice, February 20, 2024, https://www.vice.com/en/article/7kxjnz/the-vile-sextortion-and-torture-ring-where-kids-target-kids

- Former Plano private school teacher accused of grooming student, FOX 4, January 30, 2024, https://www.fox4news.com/news/jacob-allred-great-lakes-academy-child-grooming-arrest

- Feds bust leader of neo-Nazi cult who used Discord and Telegram to groom and exploit children, Business Insider, December 18, 2023, https://www.businessinsider.com/feds-bust-nazi-cult-telegram-discord-grooming-exploit-children-2023-12

- Former FBI Contractor Uses Popular Video Game Platform to Solicit Preteens for Child Sexual Abuse Material, DOJ, November 7, 2023, https://www.justice.gov/usao-edva/pr/former-fbi-contractor-uses-popular-video-game-platform-solicit-preteens-child-sexual

- Matthew Gardiner accused of using Discord to groom Adelaide teenager, 7News Australia, October 27, 2023, https://www.youtube.com/watch?v=zLCebdbHVvo

Sexual Exploitation and Grooming Cases that Escalated to Off-Platform Contact:

- Washington State girl, 14, who went missing with ‘man she met on Discord’ near notorious sex trafficking corridor has been FOUND: Michigan man arrested over kidnapping, UK Daily Mail, February 1, 2024, https://www.dailymail.co.uk/news/article-13034633/washington-state-girl-missing-discord-sex-trafficking-michigan.html

- ‘True evil’: Man accused of ‘grooming’ runaway Florida teen to brand herself, Click Orlando, December 15, 2023, https://www.clickorlando.com/news/local/2023/12/15/true-evil-man-accused-of-grooming-runaway-florida-teen-to-kidnap-child/

- Child predators are using Discord, a popular app among teens, for sextortion and abductions, NBC, June 21, 2023, https://www.nbcnews.com/tech/social-media/discord-child-safety-social-platform-challenges-rcna89769

Despite claims to immediately remove exploitative content, Discord is slow to respond when users report exploitative and predatory behavior.

As of March 2024, Discord still does not proactively detect grooming behavior in servers, direct messages, or group direct messages. In October 2023, Discord reported to the Australian eSafety Commissioner that they do not employ grooming detection technology across any area of their platform. However, during the Senate Judiciary Hearing in January 2024, they claimed to be “developing” a feature like this in coordination with Thorn. Further, Discord does not proactively detect known links to child sexual exploitation and abuse material or monitor livestream videos or audio calls for CSAM, exploitation, or grooming behavior.

Additional evidence available upon request: public@ncose.com

Unenforced Policies and Guidelines

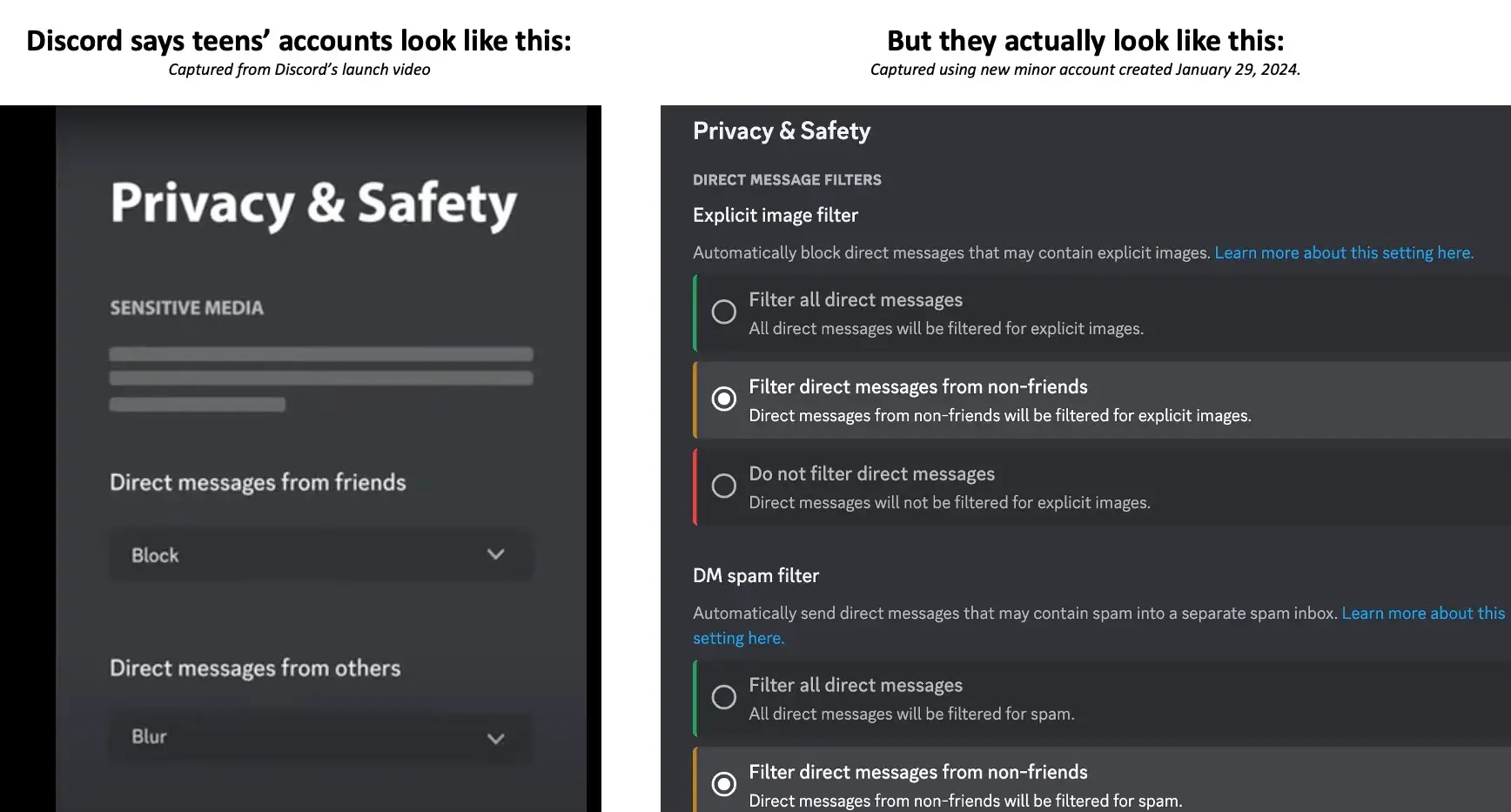

1. Discord does not default minor accounts to the highest safety settings available upon account creation.

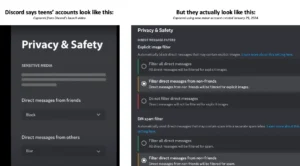

When Discord was named to the 2022 and 2023 Dirty Dozen List, NCOSE staff found contradictory language in Discord’s adult content guidelines. Discord claimed that the highest safety setting regarding direct messages, called “keep me safe,” was on by default, which it was not.

NCOSE carefully documented Discord’s continued promotion of deceptive and loosely implemented child safety features in 2023. Here’s a snapshot of what we found:

- According to Discord’s CEO, Sensitive Content Filters, part of Discord’s newly launched Teen Safety Assist Initiative, are on by default and “automatically blur potentially sensitive media sent to teens in DMs [direct messages], GDMs [group direct messages], and in servers. Blurring is enabled by default for teen users in DMs and GDMs with friends and in servers. In DMs and GDMs with non-friends, potentially sensitive media is blocked by default for teen users.”

- Although the Teen Safety Assist Initiative was launched in October 2023 and Discord CEO Jason Citron testified that the Sensitive Content Filters were on by default in January 2024, minors on Discord currently do not have access to this feature. Even worse, NCOSE alerted our contact at Discord that the feature was not available and never received a response.

Unfortunately, as of February 2024, minors and adults currently have the same defaulted explicit content settings, neither of which appear to match the features Discord proudly boasts in their blogs and policies.

2. Discord does not require age-verification for many of its age-restricted features.

NCOSE recognizes Discord made important changes regarding minors accessing age-restricted content on their iOS devices. However, effective implementation is still lacking. While minor-aged accounts ostensibly cannot access age-restricted content on their iOS devices, Discord’s lack of robust moderation means that plenty of adult content is still available to minors. Because there is no age verification required to make any Discord account, minors can still easily pretend to be adults and access all of Discord’s content. With such significant loopholes, Discord’s age restrictions promise safety but fail to follow through.

Discord’s age verification policy is confusing and inconsistent. When an adult-aged account is locked out from an age-restricted channel, Discord’s policies direct the user to demonstrate that they are an adult using a photo ID. However, using an adult-aged account, NCOSE staff were able to access age-restricted content on iOS without having to go through any meaningful age verification. Further, Discord does not allow server owners to self-designate a server as sexually explicit, leaving Discord solely responsible for ensuring servers containing primarily sexually explicit content are properly designated as such and preventing minors from accessing these servers (a task at which they have not been very successful!).

According to the Australian eSafety Commissioner, between February and October 2023, Discord only removed 224,000 accounts for not meeting the minimum age requirement. Out of those 224,000 accounts, Discord was only responsible for proactively removing 2% of accounts believed to be under the age of 13, relying on user-reports 98% of the time to flag underage accounts. According to a 2023 report by Common Sense Media, an alarming number of children under 13 years old (25% of children 11-12 years old) reported using Discord regularly despite it being rated for teens 13+.

3. Discord claims that verified and partner servers cannot include NSFW content – but has verified Pornhub, a server dedicated to sexually explicit content, and a company that has come under fire for hosting and profiting from child sexual abuse material and other non-consensual material.

4. Discord relies on user moderation and reports to monitor exploitative behavior – despite claiming a zero-tolerance policy regarding non-consensual sharing of sexual material and CSAM.

Discord does not provide users with an in-app reporting feature to report entire servers. Users are required to submit reports to server moderators or submit a lengthy report to Discord’s Trust and Safety team. This is problematic considering last year’s seemingly unresolved uptick in Discord moderators participating in predatory behavior, leaving users little recourse for safe reporting options.

Here’s what Discord’s “zero-tolerance policy for content or conduct that endangers or sexualizes children” really looks like…

According to the Australian eSafety Commissioner, Discord’s average response time for actioning user reports of child sexual abuse material and exploitation were as follows:

- Direct messages: 13 hours

- Servers (public): 8 hours

- Servers (private): 6 hours

- Server livestreams: Discord does not have an in-service reporting function for livestreams since they do not monitor or save livestream videos or audio calls.

Additionally, the National Center for Missing and Exploited Children (NCMEC) reported that Discord is one of the slowest corporations to remove or respond to CSAM (at an average of 4.7 days).

Further, despite Discord’s testimony that “We deploy a wide array of techniques that work across every surface on Discord,” Discord does NOT:

- proactively block URLs to known child sexual abuse and exploitation material

- proactively detect known CSAM videos in servers or direct messages

- proactively detect CSAM (known or new) in avatars or profile pictures

- have measures in place to detect CSAM or exploitation in server livestreams or audio calls

- have an in-app reporting function for livestreams or audio calls

- automatically notify Discord Trust & Safety staff when volunteer moderators, administrators, or creators action users for CSAM or exploitation within their servers

- provide users with an in-app option to report servers

5. Minor-aged accounts can still access servers containing age-restricted content even if designated age-restricted channels are blocked.

In the 2024 Senate Judiciary Hearing, Discord’s CEO testified, “Our policies state that those under the age of 18 are not allowed to send or access any sexually explicit content, and users are only allowed to post explicit content in spaces that are behind an age-gate or in DMs or GDMs with users who are 18 and older.”

However, this is flagrantly deceptive. In fact, NCOSE researchers found evidence of non-consensual sharing of sexually explicit imagery on Discord’s Discover page.

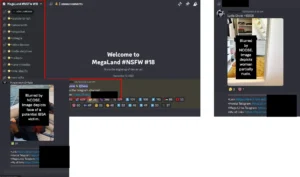

On February 20th and 21st, 2024, using a minor-aged account, NCOSE researchers searched variations of banned words “sex” and “porn” and were suggested over 100 communities containing high indications of child sexual abuse material, image-based sexual abuse, hardcore sexually explicit content, and teen dating. While NCOSE researchers were not able to enter all the servers returned because of their age, according to Jason Citron, “adult” content shouldn’t even be accessible on the Discover page, let alone have indications of CSAM or IBSA.

The following images are based on servers NCOSE researchers were able to enter using a minor-aged account after searching “sexy,” “sext,” and “p0rn,” on Discord’s Discover page.

Servers contained dozens of channels named after women, each representing a dedicated location where server members could post links to their imagery as well as explicit imagery of the women.

One server returned for the search “sexy” called “The 69 !! ??…” allowed users to choose a “role” as either an adult or a minor. The sexualized image attached to the role selection box was presumably of a minor.

Additional evidence available upon request: public@ncose.com

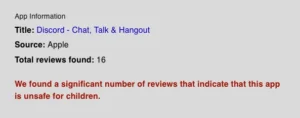

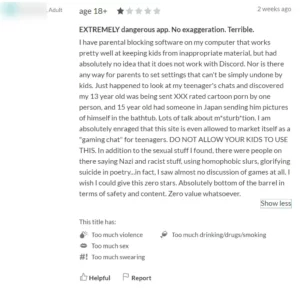

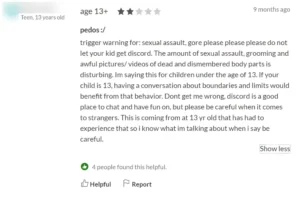

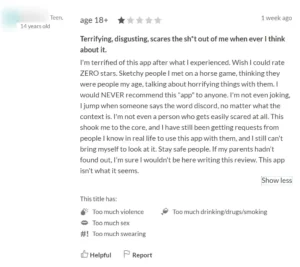

Caregiver and Kids' Testimony and Reviews About Dangers

Below are just a few reviews about the dangers of Discord.

App Danger Project – Discord

Testimony of from a parent of a Discord user:

Testimony of a 13-year-old:

Testimony of a 14-year-old:

Fast Facts

Almost 1/4 (23%) of CSAM offenders surveyed reported Discord as one of the top platforms used to search for, view, or share CSAM (Protect Children Project, 2024)

Discord is the third most reported (26%) social media platform used by CSAM offenders attempting to establish first contact with a child (Protect Children Project, 2024)

Discord provides in-app services for creating and monetizing deepfake and AI-generated pornography of private citizens

Discord ranks among the top five platforms implicated in cases of child enticement (NCMEC, 2024)

Recommended Reading

On social media, a bullied teen found fame among child predators worldwide

A creator offered on Discord to make a 5-minute deepfake of a “personal girl” for $65

The dark side of Discord for teens

Backgrounder: US Senate Judiciary Committee to Grill Tech CEOs on Child Safety

The Vile Sextortion and Torture Ring Where Kids Target Kids

Former FBI Contractor Uses Popular Video Game Platform to Solicit Preteens for Child Sexual Abuse Material

Discord server with AI-generated pornography of celebrities and AI-generated CSAM

Similar to Discord

Telegram

Updates

Stay up-to-date with the latest news and additional resources

Bark Review of Discord

Who’s Responsible for Protecting Kids Online…and How?

This panel of digital safety experts will elevate some of the current and emerging digital dangers facing youth, share promising practices to prepare children on their digital journey, discuss the role and responsibilities of tech companies to their young users, and give us hope through evidence that change – at the individual and systemic level – is possible.

In this YouTube video, this content creator discusses “IP Grabbing, doxing, swatting, blackmail…and even some specific (illegal) servers. We also talk about how to stay safe and prevent these horrible things from happening to you.”

CyberFareedah says NO in this informative video on Discord’s risks, settings, and details on how to protect yourself and your kids online.