A Mainstream Contributor To Sexual Exploitation

Legitimizing Exploitative Enterprises

The “world’s largest professional network” provides prostitution and porn companies a platform, allows promotion of deepfake tools, and is rampant with sexual harassment.

Take Action

Updated 5/15/24: After the Daily Mail published an article featuring LinkedIn’s placement on the Dirty Dozen List for allowing promotion of “undressing apps” used to create deepfake pornography, LinkedIn removed nudifying bot ads and articles from the platform. As of May 2024, NCOSE was unable to find any more posts promoting nudifying apps on LinkedIn.

Deepfake porn is so prolific that it is affecting hundreds of thousands of women, from schoolgirls to Taylor Swift and even popping up unchecked on professional networking site LinkedIn.

LinkedIn is “the world’s largest professional network,” with more than 1 billion members seeking connections “to make them more productive and successful.” But the platform legitimizes and normalizes companies that facilitate and profit from sexual exploitation. Pornography and prostitution sites like Aylo (formerly MindGeek, the parent company of Pornhub), OnlyFans, and Seeking (formerly Seeking Arrangement) are allowed on LinkedIn, despite the fact that extensive evidence of child sexual abuse material (CSAM), sex trafficking, image-based sexual abuse (IBSA), and other nonconsensual content has been found on each of these sites. These are not businesses like any other, and therefore must not be treated as such on a professional network like LinkedIn.

LinkedIn also allows promotion of “nudify” apps used to create deepfake pornography, actually providing instructions and tools for how to “undress” unsuspecting women and girls.UK Daily Mail reported on LinkedIn hosting deepfakes after speaking with NCOSE.

LinkedIn also allows promotion of “nudify” apps used to create deepfake pornography, actually providing instructions and tools for how to “undress” unsuspecting women and girls.UK Daily Mail reported on LinkedIn hosting deepfakes after speaking with NCOSE.

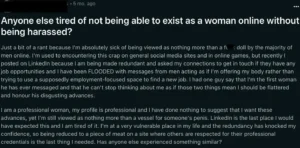

Furthermore, LinkedIn has a major problem with sexual harassment, with one survey reporting 91% of women received romantic or sexual advances on LinkedIn. NCOSE researchers even found a profile photo that was male genitalia – a form of harassment known as cyberflashing. Not only is the harassment itself highly problematic, but due to LinkedIn’s ineptness at suppressing this type of behavior the company is in effect pushing women out of one of the (if not the) primary online space to share professional achievements, build networks, and seek employment.

Lastly, service providers have noted that LinkedIn has been used as a platform for sex trafficking and/or on which they had their pornography/sexually explicit images non-consensually distributed!

LinkedIn must get out of the business of promoting exploitative enterprises and step up efforts to ensure it is a safe space for women.

Our Requests for Improvement

- Cut ties with companies that facilitate or profit from sexual abuse and exploitation, in particular Aylo (formerly MindGeek, owner of Pornhub), OnlyFans, and Seeking. LinkedIn must block all accounts and posting for these entities, enforcing its policy on illegal, dangerous, and inappropriate commercial activity.

- Immediately remove any content (posts, articles, etc.) promoting tools used for the creation of deepfake pornography, and improve automated detection tools to proactively prevent similar content from being posted in the future, enforcing the policy on nudity and adult content.

- Implement improved blocking/reporting tools, including ability to block an entire company and block/report LinkedIn members outside one’s network without having to view their profile page. (Note: When trying to report a profile picture depicting male genitalia, NCOSE received a message saying, “You don't have access to this profile. The profiles of members who are outside your network have limited visibility. To access more member profiles, continue to grow your network.”)

- Consider a “two-strikes you’re off LinkedIn” policy. Investigate accounts that have been blocked by multiple users.

- Specify in LinkedIn’s Transparency Report how much content that violates policies (e.g., harassment or abusive, violent or graphic, pornography/nudity, child exploitation) is proactively detected and removed by LinkedIn’s automated detection tools and how much is removed after user reports.

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

This is a composite story, based on common survivor experiences. Real names and certain details have been changed to protect individual identities.

An Ecosystem of Exploitation: Artificial Intelligence and Sexual Exploitation

I once heard it said, “Trust is given, not earned. Distrust is earned.”

I don’t know if that saying is true or not. But the fact is, it doesn’t matter. Not for me, anyway. Because whether or not trust is given or earned, it’s no longer possible for me to trust anyone ever again.

At first, I told myself it was just a few anomalous creeps. An anomalous creep designed a “nudifying” technology that could strip the clothing off of pictures of women. An anomalous creep used this technology to make sexually explicit images of me, without my consent. Anomalous creeps shared, posted, downloaded, commented on the images… The people who doxxed me, who sent me messages harassing and extorting me, who told me I was a worthless sl*t who should kill herself—they were all just anomalous creeps.

Most people aren’t like that, I told myself. Most people would never participate in the sexual violation of a women. Most people are good.

But I was wrong.

Because the people who had a hand in my abuse weren’t just anomalous creeps hiding out in the dark web. They were businessmen. Businesswomen. People at the front and center of respectable society.

In short: they were the executives of powerful, mainstream corporations. A lot of mainstream corporations.

The “nudifying” bot that was used to create naked images of me? It was made by codes hosted on GitHub—a platform owned by Microsoft, the world’s richest company. Ads and profiles for the nudifying bot were on Facebook, Instagram, Reddit, Discord, Telegram, and LinkedIn. LinkedIn went so far as to host lists ranking different nudifying bots against each other. The bot was embedded as an independent website using services from Cloudflare, and on platforms like Telegram and Discord. And all these apps which facilitated my abuse are hosted on Apple’s app store.

When the people who hold the most power in our society actively participated in and enabled my sexual violation … is it any wonder I can’t feel safe anywhere?

Make no my mistake. My distrust has been earned. Decisively, irreparably earned.

Proof

Evidence of Exploitation

WARNING: Any pornographic images have been blurred, but are still suggestive. There may also be graphic text descriptions shown in these sections. POSSIBLE TRIGGER.

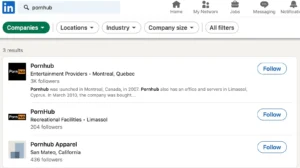

LinkedIn Provides a Platform to Sexually Exploitative Enterprises

LinkedIn continues to provide major sexual exploiter Pornhub and its parent company Aylo (formerly MindGeek) a platform, despite abundant evidence the company has profited from child sexual abuse material (CSAM), sex trafficking, image-based sexual abuse (IBSA), and other nonconsensual content. NCOSE sent LinkedIn an email on July 12, 2023 urging LinkedIn to cut ties with Pornhub and its parent company, but no action was taken. LinkedIn has failed to enforce their own policy prohibiting “any content that promotes or facilitates human trafficking, exploitation, or criminal sex acts, including escort, prostitution, or mail-order bride services.”

Aylo, the parent company of Pornhub, is facing 10 sex trafficking lawsuits filed since 2020 on behalf of 257 victims across the U.S. and Canada. The latest, filed in U.S. District Court in San Diego in October 2023 on behalf of 62 women, alleges “sex trafficking, human trafficking, racketeering and conspiracy to commit racketeering” based on Pornhub’s “partnership with GirlsDoPorn to ‘advertise, sell, market, edit, and otherwise exploit GirlsDoPorn’s illegal sex trafficking videos on its websites.’”

Additionally, last year, two class action lawsuits against Aylo were certified in federal courts – one in November alleging Aylo “systematically participat[ed] in sex trafficking ventures involving tens of thousands of children by receiving, distributing, and profiting from droves of child sexual abuse material (CSAM),” and another similar case in December. You can learn more about that case here. Most recently, Pornhub’s parent company admitted to profiting from sex trafficking, according to an announcement from the United States Attorney’s Office for the Eastern District of New York.

A series of damning videos released in 2023 by Sound Investigations revealed Pornhub’s knowledge of and indifference toward sexual exploitation on their platform. These videos, in which undercover investigators talked with Pornhub employees, exposed:

- A “loophole” that allows verified users to upload videos of people without having to show their faces, a feature exploited by rapists and sex traffickers

- Advertisers on Pornhub and other sites don’t have to verify the age, consent, or identity of people featured in pornographic advertisements

- Pornographic ads for their suite of pornography companies perform much better when younger-looking actors (i.e., “guys that look like 15”) are featured.

These investigations resulted in a letter being sent to Aylo by 26 state Attorneys General, demanding accountability from the platform for child sexual abuse material (CSAM) and other allegations. The following are further examples of sexual exploitation on Pornhub that made headlines in 2023:

- Times of San Diego: Pornhub Parent Company Sued by 61 Women for Videos Published Without Consent

- NBC 15: Pornhub branded ‘serial exploiter’ by watchdog following undercover investigation

- The Irish Times: Woman sues Pornhub claiming recordings of her being sexually abused appeared on the site

- Washington Examiner: Sex Trafficking victims call on judge to recuse himself in Pornhub criminal case

- ABA Journal: Citing allegations of ‘unimaginable suffering’ by child sex-abuse survivors, judge allows class action against Pornhub

Despite all this evidence, LinkedIn continues to provide Aylo a platform to post jobs. A NCOSE researcher found 88 jobs listed for Aylo on LinkedIn on February 6, 2024. That number was up to 99 by February 8:

LinkedIn also has an active profile for Pornhub. In addition, a search for “Pornhub” on LinkedIn found several profiles promoting the platform, including fake profiles using either AI-generated images or the face/likeness of a real person without their knowledge or consent. For example, one profile used the name of a cartoon character, Anarka Couffaine. Another used an explicit image as their profile photo.

LinkedIn provides a platform to other sexually exploitative companies like OnlyFans and Seeking as well. OnlyFans was placed on NCOSE’s 2023 Dirty Dozen List for evidence of child sexual abuse material (CSAM, the more apt term for child pornography), sex trafficking, child online exploitation, harassment, doxing, cyberstalking, and image-based sexual abuse. A NCOSE researcher found several articles openly promoting OnlyFans on LinkedIn:

- The 9 Best Ways To Promote & Advertise Your OnlyFans (April 7, 2023)

- How to Start an OnlyFans Without Followers? (September 12, 2023)

- How To Start an OnlyFans? A Beginner Step-By-Step Guide (October 20, 2023)

- OnlyFans AI: 11 Best OnlyFans Artificial Intelligence Tools To Use (November 7, 2023)

- How to Make Money on OnlyFans Without Showing Your Face (December 30, 2023)

- 7 Popular Fetishes On OnlyFans For Content Creation (February 3, 2024) [Warning: graphic language]

Seeking (formerly Seeking Arrangement) is a platform for “sugar dating,” another term for prostitution, and was featured on NCOSE’s Dirty Dozen List in 2020 and 2021 for targeting college students and people suffering from the economic uncertainty of COVID-19 to groom them to be sexually used by older, wealthier men. Seeking has a profile on LinkedIn, in violation of LinkedIn’s policy on illegal, dangerous, and inappropriate commercial activity.

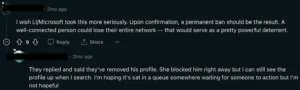

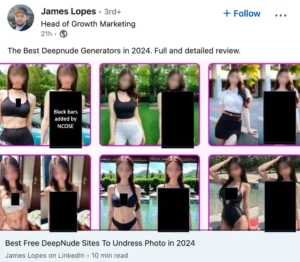

Promotion of Deepfake Pornography Tools, a Form of Image-Based Sexual Abuse (IBSA)

Updated 4.18.24: The UK Daily Mail reported on LinkedIn hosting deepfakes after speaking with NCOSE.

Image-based sexual abuse (IBSA) encompasses a range of harmful activities that weaponize sexually explicit or sexualized materials against the persons they depict. IBSA includes creating, stealing, extorting, threatening or actually distributing sexually explicit or sexualized content without the genuine consent of the depicted individuals and/or for the purpose of sexual exploitation. This includes “the non-consensual use of a person’s images for the creation of photoshopped/artificial pornography or sexualized materials intended to portray a person (popularly referred to as “cheap fake” or “deepfake” pornography).”

One way people create these deepfake sexually explicit images is through “nudify” apps that use AI to remove the clothes from any photo. LinkedIn facilitates the creation of IBSA content by allowing users to promote and publish articles about the best “nudify” apps, including detailed instructions for their use, in violation of their own policy on nudity and adult content, which prohibits “content that depicts, describes, or facilitates access to sexually gratifying material.” A NCOSE researcher easily found several articles on LinkedIn detailing the use of these “nudify” and AI undress tools:

- Top 7 Undress AI Apps To Remove Clothes With AI in 2024 (December 30, 2023)

- Best Free DeepNude Apps To Deepnudify Photo (January 11, 2024)

- 10 Image Nudifier To Nudify Photo Online (Paid & Free) (January 11, 2024)

- Best 10 Ai Clothes Remover App & Website [Free & Paid] (January 12, 2024)

- 8 Best AI Nudifiers in 2024 to Create AI Nudes Online (January 20, 2024)

- 12 Free Undress AI Tools in 2024: AI-Powered Nudifiers (January 22, 2024)

- 13 Free Undress AI Tools Of 2024 (Tested & Ranked) (January 31, 2024)

- AI Nudifiers: 10 Best Free AI Nudifiy Generators (2024) (February 5, 2024)

- 10 Best Free AI Nudifiers To Nudify Images (100 Tools Tested) (February 24, 2024)

These articles serve as “how-to” guides for sexual exploitation, explaining how to use these tools to take anyone’s image and create another image in which the subject is naked. One post promoting the use of these tools included before and after images of young-looking girls who had been virtually undressed.

Sexual Harassment and Stalking

Is LinkedIn the New Tinder? [2023 Study] (Passport Photo Online)

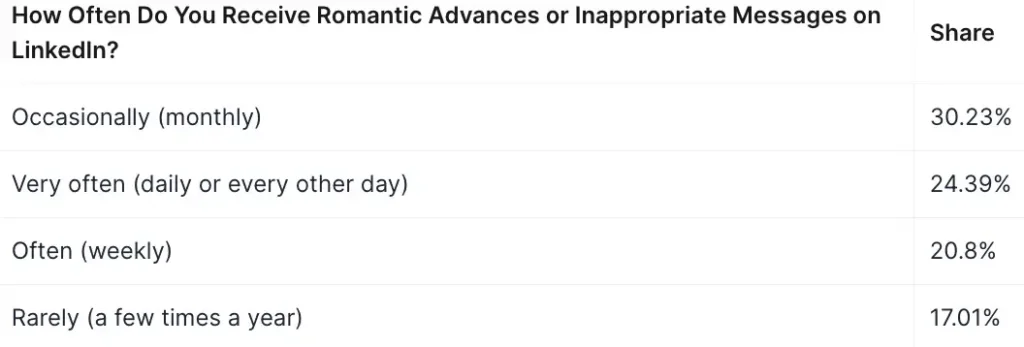

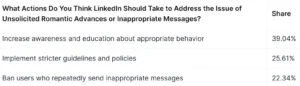

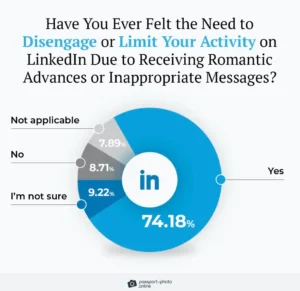

According to a July 2023 survey of over 1,000 women on LinkedIn in the U.S. titled, “Is LinkedIn the New Tinder? [2023 Study]”:

- About 91% of female LinkedIn users have received romantic advances or inappropriate messages at least once.

- Most out-of-line messages that slide into women’s DMs are propositions for romantic or sexual encounters (31%).

- Around 43% of females using LinkedIn reported (on multiple occasions) users who tried to get all flirty.

- Nearly 74% of women on LinkedIn have at least once dialed down their activity on the platform due to others’ improper conduct.

These results demonstrate that many women feel unsafe on LinkedIn, and by reducing their activity on the platform, women may have limited job and networking opportunities. LinkedIn’s automated detection tools for sexual harassment are clearly insufficient when this many women receive harassing messages. Additional findings from the survey are presented below.

Source: Passport Photo Online, Is LinkedIn the New Tinder? [2023 Study]

Source: Passport Photo Online, Is LinkedIn the New Tinder? [2023 Study]

Source: Passport Photo Online, Is LinkedIn the New Tinder? [2023 Study]

Source: Passport Photo Online, Is LinkedIn the New Tinder? [2023 Study]

NCOSE researchers conducted our own LinkedIn poll on sexual harassment on the platform from February 22 through March 7 of this year. Out of 319 respondents (233 women, 86 men), 23% said they had ever received unwanted sexually suggestive messages or interactions while using LinkedIn. Though not as high as the percentage found in the Passport Photo Online survey, this still represents a significant number of people experiencing sexual harassment on the platform. It is also important to note that our sample was much smaller, not representative, and included male respondents (most of whom said they had never received unwanted sexually suggestive messages on LinkedIn).

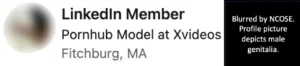

A NCOSE researcher found a LinkedIn profile photo that was male genitalia – a form of harassment known as cyberflashing. When trying to report this profile page, the researcher received a message saying, “You don’t have access to this profile. The profiles of members who are outside your network have limited visibility. To access more member profiles, continue to grow your network.”

Explicit profile picture on LinkedIn. Did not have access to view profile and could not block/report from search result list. Search conducted February 2024.

A NCOSE researcher found several examples on Reddit within the past year of women discussing inappropriate messages they received on LinkedIn, including unsolicited comments on their appearance and requests for marriage. One woman expressed her disappointment that “there isn’t one space where we can talk about our work and accomplishments without it becoming a dating site.” Some women discussed how they began to limit their use of the platform. Others explained incidents of men seeing them on a dating site or in real life and then finding them later on LinkedIn. Many women were stalked on LinkedIn by men they had previously dated or old coworkers. One woman lamented, “Blocking and reporting these men does nothing.”

(See screenshots from Reddit below. For more information, see PDF proof document.)

Sex Trafficking

The National Trafficking Sheltered Alliance (NTSA) conducted a national survey of 82 service providers and 39 law enforcement respondents to answer Protection Questions 16, 17, and 19 from the U.S. Department of State’s Request for Information for the 2024 Trafficking in Persons Report. With 29 questions in total, 25 were framed based on the questions of the TIP report itself, and the only alterations were to define or use more widely accepted terms in our field. Four additional questions addressed further field research for partners of NTSA in the full survey for service providers. NCOSE submitted questions for this survey regarding survivors of sex trafficking who had pornography/sexually explicit images made of them by either sex buyers or sex traffickers. Four service providers identified LinkedIn as a platform where victims they serve have been trafficked and/or had their pornography/sexually explicit images non-consensually distributed. (National Trafficking Sheltered Alliance, Survey for Submission to the U.S. Department of State 2024 Trafficking In Persons Report [Unpublished raw data].)

While NCOSE has not found evidence ourselves of sex trafficking on LinkedIn, this finding by NTSA is significant and we urge LinkedIn to further examine how its platform is being used for these crimes and to invest in sufficient detection and prevention tools.

Fast Facts

LinkedIn is among the 10 most used social networks in the United States. With 1 billion members worldwide, LinkedIn is the “world's largest professional network.”

Owned by Microsoft.

LinkedIn provides a platform for Aylo (formerly MindGeek, parent company of Pornhub) to post jobs. NCOSE found 99 jobs listed for Aylo on LinkedIn in February 2024.

About 91% of female LinkedIn users have received romantic advances or inappropriate messages at least once.

Nearly 74% of women on LinkedIn have at least once dialed down their activity on the platform due to others’ improper conduct.