Communications Decency Act

SECTION 230

Misinterpretations of Communications Decency Act (CDA) Section 230 have granted Big Tech blanket immunity for facilitating rampant sexual abuse and exploitation. Until we repeal Section 230, corporations have NO INCENTIVE to make their products safer.

The Greatest Enabler of Online Sexual Exploitation

Now a cornerstone of our online society, Section 230 of the Communications Decency Act (CDA), was laid in the early days of the Internet. In 1996, the primary aim of the CDA’s architects was to shield children from harmful content online. However, as a compromise to this emerging new tech industry Congress also included a special provision in the CDA—Section 230—to promote good faith efforts to moderate harmful third-party content. Section 230 was meant to ensure that moderation could not be used as a reason to hold platforms liable for things they missed or for all bad conduct by third-parties on their site. This was an attempt by Congress to balance the need for child protection online on the one hand, and encourage the growth of a new industry and new technology on the other.

Blanket Immunity

Ultimately, most of the CDA was struck down by the Supreme Court, but Section 230 was left standing. As a result, the child protection provisions were lost and only the web protection provision remained. This disrupted the balance intended by Congress. Today, Section 230 has been interpreted by courts as granting near-blanket immunity to social media giants and online platforms for harms occurring on their platforms, even when they are clearly acting in bad faith, whether knowingly, recklessly, or negligently. This has allowed them to profit from terrible crimes and abuses—sex trafficking, child sexual abuse materials, online grooming, and image-based sexual abuse—with impunity.

As a result, sexual abuse and exploitation has EXPLODED online. Without the threat of legal liability online platforms have NO INCENTIVE to invest in child safety or even remove heinous content they know about. It is time to restore the imbalance caused by Section 230. A lawless internet is no longer viable. It’s time to restore common sense and make online child protection possible. It’s time to repeal Section 230.

It is time to repeal Section 230 of the Communications Decency Act —we must end immunity for online sexual exploitation.

26 Words That Shaped The Internet

“No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider.”

– U.S. Code as 47 U.S.C. § 230

LEARN THE HISTORY: The Role of CDA Section 230 in Enabling Online Exploitation

CDA 230 of the Communications Decency Act (CDA) was originally designed to help protect children online in the early days of the internet. The title of CDA 230 that many forget is: “Protection for ‘Good Samaritan’ Blocking and Screening of Offensive Material.” However, this law—which is over 25 years old—has instead become a shield for Big Tech corporations, allowing them to evade accountability even as their platforms are blatantly used to facilitate egregious acts like sex trafficking, child sexual abuse, and image-based sexual abuse (IBSA).

These words were meant to encourage platforms to moderate harmful content without automatically opening themselves up to liability. Yet, through countless court rulings and misinterpretations, this clause has morphed into a blanket immunity that has even immunized platforms when they recklessly facilitate sex trafficking, child sexual abuse, and image-based sexual abuse.

How did we get here?

1996

Section 230 was added to the Communications Decency Act of 1996 primarily to allay concerns raised by the new Tech industry that if they moderate content on their websites they will automatically become responsible for all third-party content on their websites. At the time the tech industry was new and they argued such a threat of liability would completely crush the industry and stifle innovation. These concerns arose primarily because of a then-recent court decision out of New York:

Stratton Oakmont, Inc. v. Prodigy Services Co. held that a platform was liable for defamatory material published by a third party on its website because it moderated the content on its platform. These moderation efforts, the court reasoned, meant the platform took on responsibility for all the content on the site even when it was not clear whether the platform had any knowledge of the defamation.

In an attempt to resolve what most agree was not a good result in the Stratton Oakmont case, and to encourage moderation efforts, Congress added Section 230 to the CDA. Unfortunately, the Supreme Court later struck down all of the child protection provisions of the CDA as unconstitutional. So ultimately, all that remained of the Communications Decency Act was Section 230. Over time this well-intentioned legislation was twisted by a series of disastrous court decisions which some tech companies interpret to mean they do not have any responsibility to stop enabling or profiting from the crimes occurring on their platforms.

In 1996, Congress wanted to both encourage child protection while also encouraging the growth of the nascent Internet. But in 2025, the internet is ubiquitous and the tech industry is the largest, most profitable, and most influential industry in human history. It no longer needs protecting. But children have never received the protection they need. And the need to protect children from online harms is exponentially greater than it was in 1996 when even then, Congress recognized the dangers of the Internet to children.

It is time to update the law to present day realities. Why should Big Tech receive artificial government protection and immunity that no other commercial industry enjoys? It is time to take action. It is time for action to place people over profit.

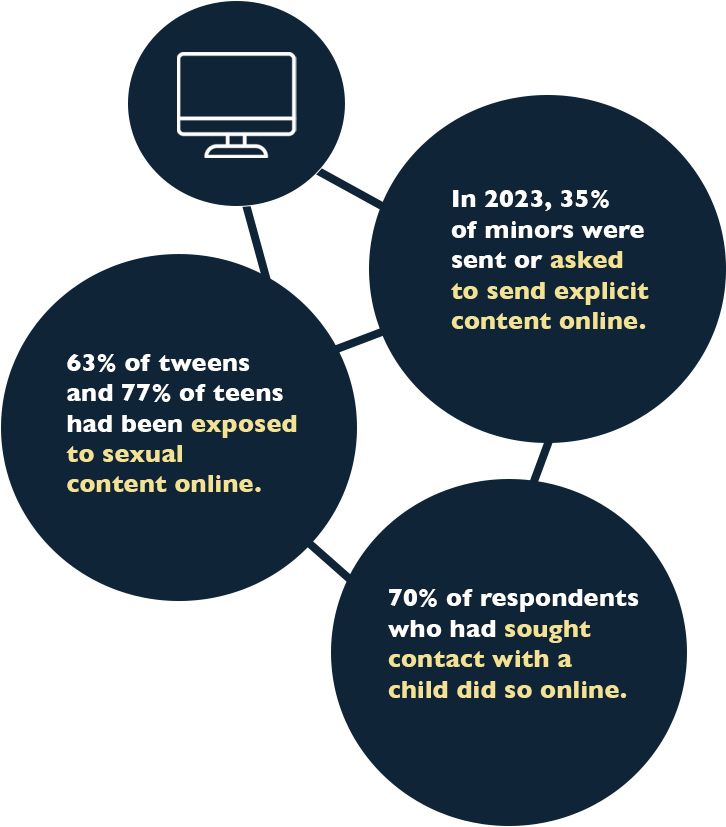

Exploding Exploitation in the Digital Age Due to CDA 230

Sex trafficking and online exploitation have soared in the digital age, fueled significantly by CDA Section 230. Here’s how:

Sex Trafficking: In the past, websites such as Backpage.com, notorious for hosting ads for sex trafficking, used Section 230 to avoid liability. Backpage’s business model thrived on the exploitation of women and children, earning nearly $51 million in California alone from prostitution advertising between 2013 and 2015. After years of relentless advocacy efforts, Backpage.com was taken down by the Department of Justice, but other platforms like Seeking.com (formerly Seeking Arrangement), Rubmaps, Pornhub, and more facilitate the sex trade (including cases of sex trafficking) without adequate accountability.

Online Predators: Social media platforms have been used by predators to groom and exploit children, with no significant legal repercussions for the companies. In fact, Big Tech companies are well aware that abuse happens on their sites yet year after year fail to meaningfully prevent it. For example, a study found that Facebook alone was responsible for 94% of online child grooming cases, also the New Mexico Attorney General found that Snapchat was “ignoring reports of sextortion, failing to implement verifiable age-verification, admitting to features that connect minors with adults.” Evidence of this willing ignorence or negligence abounds, as has often been highlighted through NCOSE’s Dirty Dozen List campaign.

Image-based Sexual Abuse: Section 230 has also been invoked in countless lawsuits brought by victims of “revenge porn” (more appropriately termed “image-based sexual abuse”) against hosting platforms like Reddit and Twitter. These sites have argued they cannot be held accountable for third-party content posted on their platforms, even if it is non-consensual and damaging to individuals.

Landmark Legislation – Still Not Enough

Recognizing these issues, Congress passed FOSTA-SESTA in 2018. This landmark legislation was intended to empower sex trafficking victims to file civil lawsuits against platforms and allow states to prosecute websites knowingly facilitating sex trafficking.

Analysis by ChildSafe.AI showed a substantial decline in sex buyer responses to online sex trade ads after FOSTA passed, translating to fewer people being exploited. By removing platforms that enabled traffickers to market victims, FOSTA disrupted a key tool for exploitation. This makes it harder for traffickers to find, groom, and profit from victims, weakening abuse mechanisms and creating a deterrent effect. The policy highlights how targeting online trafficking infrastructure can prevent exploitation before it starts.

While this was a significant step forward, FOSTA-SESTA is not enough.

A Groundswell for Change

No other industry enjoys such freedom from regulation or from accountability for the harm they cause. Bipartisan voices are rising—the status quo must change.

“They [Big Tech companies] refuse to strengthen their platforms’ protections against predators, drug dealers, sex traffickers, extortioners, and cyberbullies. Our children are the ones paying the greatest price … As long as the status quo prevails, Big Tech has no incentive to change the way they operate, and they will continue putting profits ahead of the mental health of our society and youth.”

Are you an NGO that supports

CDA REFORM?

Other voices calling for reform:

Rep. Cathy McMorris Rodgers

“They [Big Tech companies] refuse to strengthen their platforms’ protections against predators, drug dealers, sex traffickers, extortioners, and cyberbullies. Our children are the ones paying the greatest price … As long as the status quo prevails, Big Tech has no incentive to change the way they operate, and they will continue putting profits ahead of the mental health of our society and youth.”

U.S. Department of Justice

Senator Dick Durbin

Senator Lindsey Graham

Senator Sheldon Whitehouse

Learn More About the Harms of CDA 230

Playlist

Podcast: The Dirty Dozen List Presents CDA Section 230

The 2025 Dirty Dozen List is Here … And it Comes with a Twist!

2025 Dirty Dozen List Reveals 12 Survivors Prevented from Justice Due to Big Tech’s Liability Shield: Section 230

Senate Hearing is a Step Toward Ending Section 230 Once and For All

Twitter Lawsuit Reinforces Critical Need to Reform Section 230

What Teen Vogue Got Wrong About CDA 230, The Internet’s Liability Shield

FAQs

The tech industry has engaged in relentless fear mongering about the supposed disastrous effects of reforming CDA 230. In his opening statement at the May 22nd 2024 hearing, Ranking Member Pallone aptly called out this behavior, saying:

“I reject Big Tech’s constant scare tactics about reforming CDA 230. Reform will not break the Internet or hurt free speech. The First Amendment, not CDA 230, is the basis for our nation’s free speech protections and those protections will remain in place regardless of what happens to CDA 230.”

It is often erroneously argued that reforming Section 230 would set up an untenable situation where tech platforms would have to moderate content perfectly in real-time, or else be held liable for any harmful content that slipped through despite their best efforts. This is not true. Removing blanket immunity does not automatically equal liability. It simply means that, like any other industry, tech companies can be sued if a reasonable cause of action exists—for example, if negligence or recklessness on the part of the company led to the injury. Tech companies that perform due diligence need not fear liability.

Requiring the tech industry to invest in safety precautions and factor in liability risk to their business models and products design will not break the internet just like it has never broken any other industry. This is the most profitable industry on the planet, in the history of the world, and it is growing.

Online platforms would not be automatically liable for all third-party content, they would simply face potential liability when negligent or reckless, the same as any other industry. Whether they are liable or not would be decided in the court system.

At the same time, it’s important to remember that these companies CAN be doing much more to prevent abuse online. Right now they are simply not incentivized to, because they assume they’ll be granted immunity.

Internet companies are in the advertising/data mining business. Thus, constant and meticulous monitoring of third-party content is actually their business model. Additionally, the technology industry has made dramatic innovations in the past several years in the application of algorithms, blocking, and filtering. While some large platforms may not be able to monitor every third-party post, they can institute algorithms, filtering, and moderation practices that will catch a large portion of content facilitating sex trafficking. They can also improve by responding quickly and effectively to any reports of suspected commercial sexual exploitation.

We have laws penalizing frivolous lawsuits. In the federal court system, FRCP 11(b) prevents an attorney from filing documents promoting frivolous claims. If an attorney does so, the court can apply sanctions against the offending attorney and at times, against the client as well. States have similar laws. Therefore, repealing CDA 230 would not invite frivolous lawsuits. In fact, it would have the opposite effect by allowing legitimate lawsuits. Lawsuits with legitimate claims, where illegal actions can be tied to a company’s actions, will proceed. Right now, such legitimate lawsuits are often halted by Sec. 230 immunity.

No other industry enjoys CDA Section 230 protections and yet they are not crippled by frivolous lawsuits.

Repealing Section 230 will not hurt startups. Currently, Section 230, in providing immunity against federal antitrust claims, allows larger tech companies to remove competition. Reform would prevent them from doing so and thus, promote open competition. The Department of Justice is aware of how startups feel and notes any reforms to CDA 230 should “avoid imposing significant compliance costs on small firms.”

The federal government already regulates tech companies to some extent through the Digital Millennium Copyright Act. This act requires websites to remove copyrighted material, and it applies to both small and large companies online. If companies can effectively monitor for copyrighted information, then the technology exists to monitor for illegal material (such as CSAM).

Share Your Story

Help educate others and demand change by sharing this on social media or via email: