Telegram is a mobile and desktop messaging app popular due to its promise of heightened user privacy. Telegram is growing rapidly in popularity and reached 700 million average monthly users as of early 2023 (with India, Russia, and the US being the app’s leading markets in 2022). Based on the number of people who have downloaded the app, Telegram is ranked in the top ten most popular social networking platforms in the world.

The privacy features Telegram offers include encryption for all chats and end-to-end encryption for calls and “secret chats”. Unfortunately, despite its declared focus on user privacy, Telegram is facilitating a particularly egregious violation of privacy known as image-based sexual abuse.

What is Image-Based Sexual Abuse (IBSA)?

Image-based Sexual Abuse (IBSA) is the creating, threatening to share, sharing, or using of recordings (still images or videos) of sexually explicit or sexualized materials without the consent of the person depicted. IBSA denotes many variants of sexual exploitation, including recorded rape or sex trafficking, non-consensually shared/recorded sexually explicit content (sometimes called “revenge porn”), the creation of photoshopped/artificial pornography (including nonconsensual “deepfake” pornography), and more. Such materials are often used to groom or extort victims, as well as to advertise and commercialize sexual abuse and recordings of sexual abuse.

How Telegram Facilitates Image-Based Sexual Abuse (IBSA) and Child Sexual Abuse Material (CSAM)

Telegram currently takes no effective measures to prevent illegal and abusive sexual images from festering across the platform. They have no meaningful mechanisms for verifying the age and consent of people depicted in sexually explicit or suggestive content, and they have failed to prioritize survivor-centered reporting and response mechanisms. It is therefore no surprise that Telegram has become a hub for the trading of non-consensual image sexual images, non-consensual “deepfakes”, and even child sexual abuse material (CSAM, the more apt term for “child pornography”).

In February 2022, a BBC investigative piece shared the stories of survivors who suffered severe trauma after discovering that nude and/or sexually explicit images of themselves had been shared with thousands on Telegram. Sara (pseudonym), whose images were shared with a group of 18,000 members, said that she feared strangers in the street may have seen her images. She says, “I didn’t want to go out, I didn’t want to have any contact with my friends. The truth is that I suffered a lot.” Nigar, who discovered that a video of her having sex with her husband was shared on Telegram in a group with 40,000 members for purposes of blackmail, said “I can’t recover. I see therapists twice a week. They say there is no progress so far. They ask if I can forget it, and I say no.”

In both Sara and Nigar’s case, the abusive images were reported to Telegram, but the platform did not respond. This is not an uncommon experience. In some cases, Telegram’s lack of responsiveness to reports of abuse have led to survivors posting on social media in desperation. This is exemplified in the below screenshot of a Reddit post [survivor identifying information redacted]:

Sara and Nigar are far from the only ones. The BBC investigation stated: “After months of investigating Telegram, we found large groups and channels sharing thousands of secretly filmed, stolen or leaked images of women in at least 20 countries. And there’s little evidence the platform is tackling this problem.” Disturbingly, while the BBC was investigating these groups, they were also contacted by a Telegram user who offered to sell them a folder containing child sexual abuse material.

Similarly, a 2020 academic analysis found that the non-consensual sharing of nude or sexually explicit images was normalized and even celebrated in many Telegram groups. Quotes collected from members of the group demonstrate how openly they solicit the sharing of non-consensual images, including images that depict nonconsensual acts and images that were taken or shared non-consensually.

- “Rape videos anyone?”

- “Does anyone know *name* *surname* and have her hacked pics?”

- “The entry fee for the group is a picture of your ex!”

The analysis also found that users encouraged sharing the victims’ personal information alongside the pictures:

- “if you know the girls’ names don’t be shy and tell ‘em!”

- “please share the girls’ city when you send pics”

In cases where images were originally taken consensually but distributed non-consensually, the group members often wrongly blamed the victim for the image’s distribution, or said that the non-consensual distribution was justified:

- “If a girl sends the file, the receiver can do whatever he wants with it. It was the girl who agreed to share it!”

- “If some hoes voluntarily send around some pics, why should we be blamed?”

Telegram also facilitates the sharing of recorded sex trafficking, including the sex trafficking of children (the former being a form of IBSA and the latter being child sexual abuse material). For example, one 2020 news article reported:

Cho Joo-bin, a 24-year-old man, hosted online rooms on encrypted messaging app Telegram, where users paid to see young girls perform demeaning sexual acts carried out under coercion, according to South Korean police.

As many as 74 victims were blackmailed by Cho into uploading images onto the group chats, some of the users paid for access, police said. Officials suspect there are about 260,000 participants across Cho’s chat rooms. At least 16 of the girls were minors, according to officials.

Recordings of sex trafficked children qualify as child sexual abuse material. Child sexual abuse materials appear to be regularly shared or sold on Telegram. If Telegram is not adequately identifying and removing these heinous crimes against children, it is certainly not even beginning to scratch the surface of adult image-based sexual abuse.

Join Us in Calling on Telegram to Change!

Sign the petition here to urge Telegram to implement stronger measures against image-based sexual abuse.

*Continue reading after form

The Harms and Prevalence of IBSA

While the term Image-based Sexual Abuse has arisen in recent years, it describes a phenomenon that has been long-plaguing vulnerable populations, particularly women and LGBTQ+ individuals.

A 2017 U.S. survey conducted on Facebook found that, of the 3,044 participants, 1 in 8 had had sexually graphic images of themselves distributed or threatened to be distributed without their consent. Women were significantly more likely than men (about 1.7 times as likely) to have been victimized in this way. An Australian survey found that 1 in 10 women reported being a victim of “downblousing” and 1 in 20 women reported being a victim of “upskirting.” LGBTQ+ people are at higher risk for image-based abuse than heterosexual people, with 17% of LGBTQ+ internet users reporting that their intimate images were posted or threatened to be posted without their consent.

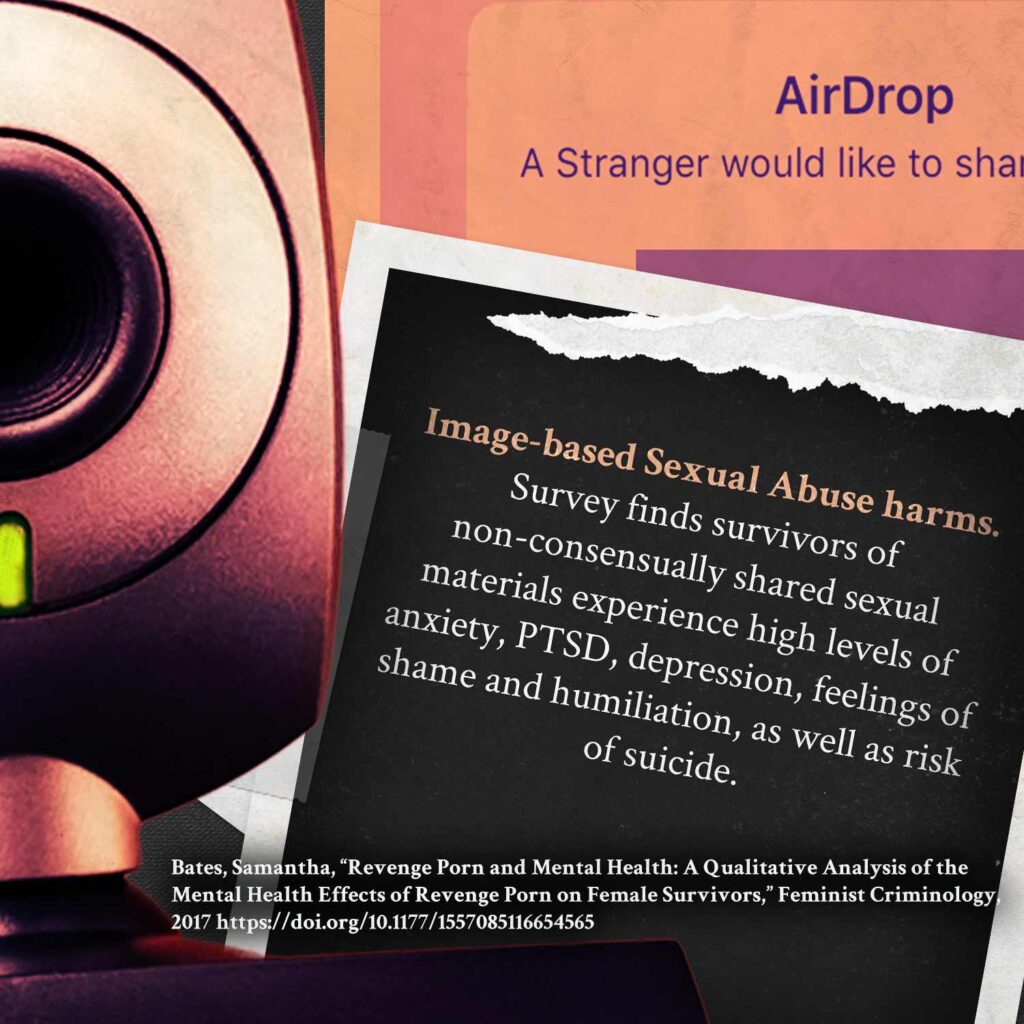

Image-based sexual abuse has a devastating impact on the mental health and well-being of its victims. The Cyber Civil Rights Initiative conducted an online survey regarding non-consensual distribution of sexually explicit photos in 2017. The survey found that, compared to people who hadn’t been victimized through IBSA, IBSA survivors had “significantly worse mental health outcomes and higher levels of physiological problems.” A previous analysis noted that such distress can include high levels of anxiety, PTSD, depression, feelings of shame and humiliation, as well as loss of trust and sexual agency. The risk of suicide is also a very real issue for victims of IBSA and there are many tragic stories of young people taking their own lives as a result. An informal survey showed that 51% of responding IBSA survivors reported having contemplated suicide as a result of the abuse.

One study that interviewed 75 survivors of various forms of IBSA from the UK, Australia and New Zealand reported how they described the impact the abuse had on their lives. The survivors reported feeling “completely, completely broken” and characterized their experiences as “life-ruining,” “hell on earth,” and “a nightmare . . . which destroyed everything.”

Victims of IBSA often also face the ordeal of having their personal information shared alongside these images. An informal survey of survivors of IBSA found that survivors often had their full name published alongside the explicit imagery (59%), or their social media/network information (49%), email (26%), phone number (20%), physical home address (16%) or work address (14%).

Our Requests for Telegram

The National Center on Sexual Exploitation is calling on Telegram to urgently implement much-needed changes that would more effectively combat the distribution of image-based sexual abuse on their platform.

Implement survivor-centered reporting and response mechanisms:

We are requesting that Telegram work with survivors and organizations like NCOSE to implement survivor-centered practices and reporting mechanisms specific to image-based sexual abuse. Telegram’s policies should require immediate removal across the platform when IBSA is reported. The default should be to believe the survivor unless sufficient documentation of consent can be provided by the uploader or hosting website. Telegram should implement simple, streamlined reporting that does not put undue burden on survivors.

Prohibit pornographic content:

We are requesting that Telegram adopt strong policies against hosting hardcore pornography and sexually explicit content, due to the impossibility of foolproof age and consent verification for such content. The policy against pornographic content should be accompanied with proactive moderation and filtering solutions to enforce it.

Develop an IBSA hashing system:

We are requesting that Telegram invest time and resources to work with other companies in developing an IBSA hashing system. Such a hashing system would prevent reported nonconsensual materials from being re-uploaded across all clientele platforms. Promising pilot programs for IBSA hashing are already underway and we are encouraging Telegram to meet with the experts conducting them.

Time’s up for Telegram ignoring image-based sexual abuse.

It is unconscionable that a platform which claims to care deeply about user privacy would allow countless women and children to have their privacy violated in such a horrific way, through the non-consensual sharing of their intimate images. Telegram must take action!