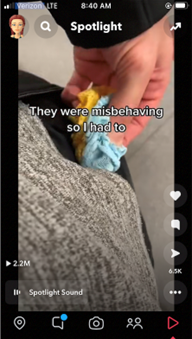

I have a fake account on Snapchat in which I pose as a 14-year-old, for the purpose of research. These are some snapshots (pun intended) of videos I was recommended on this account, within minutes of opening the Spotlight and Stories sections earlier.

Notice the video was taken from TikTok (“step brother” proceeds to gyrate against her)

Trust me, these were the most benign videos and images. I also have screenshots of:

- A joke that the difference between a girl and a mosquito is that a mosquito will “stop sucking after you slap it.”

- A young girl squirting a substance that looked like ejaculate into her mouth

- Graphic insinuation that a girl had used exercise equipment to pleasure herself

- Video of a man pretending to masturbate in a fast food kitchen and then someone being handed a burger with a clear-ish white substance on it.

- A close-up video of a man jiggling his private area while wearing short, lose shorts.

Interestingly, this is how Snap describes the sections in which these videos appeared:

Stories is the section that Snap assures parents “only features content from vetted media publishers and content creators.”

Spotlight is Snap’s “entertainment platform” and content supposedly complies with their Community Guidelines.

Clearly Snap and I have a different idea of what is acceptable content to share with teens.

NCOSE has been asking Snap to clean up the Stories (also referred to as Discover) section area since 2016…and believe it or not, it has improved greatly since then. . On paper, Snap seems to have good content guidelines. –

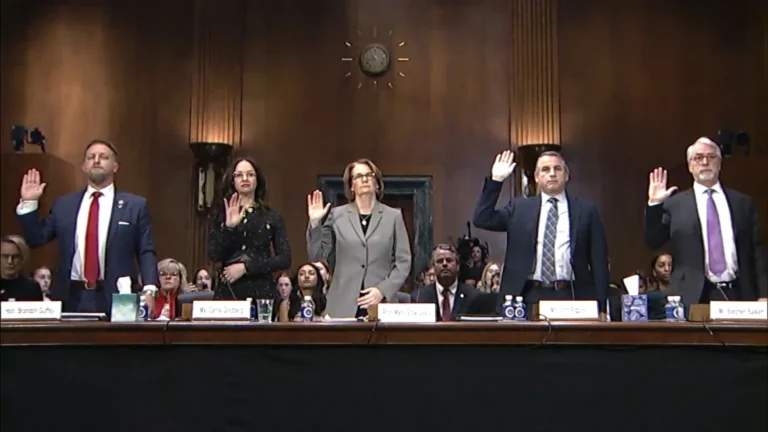

Despite this, however, Snap has continued pushing very sexualized videos (among other disturbing, inappropriate content) to minor accounts–something US Senators demanded Snap account for when brought to testify in front of Congress about the risks their platform poses to kids.

As social media and gaming apps are facing long overdue, much-needed, and increasing scrutiny, tech companies are scrambling to appease policymakers, the public, and especially parents that their platforms have children’s best interest at heart.

In this vein, Snap announced new Content Controls last week that “will allow parents to filter out Stories from publishers or creators that may have been identified as sensitive or suggestive.”

Sounds good. We certainly endorse tools to moderate content on platforms.

BUT HERE’S THE FINE PRINT: This feature is only available through the recently released Family Center and only the parent can turn it on. It’s not on by default and is not available to any teen directly.

So is this new feature actual progress?

At NCOSE we usually celebrate (we’ve even been accused of over-celebrating) every step a corporation makes to improve child online safety.

But for those of us advocating for child online safety–our patience is quickly wearing thin.

As seems to be the trend for tech when it comes to child protection, instead of making their platform inherently safer (e.g. having high safety settings on by default), they shift the burden of navigating and implementing safety tools onto caregivers and the kids themselves. Then they proceed to parade their “child protections” to policymakers, parents, and the press–when in fact they’ve relieved themselves of accountability for actual change.

The result of failing to default platforms to high levels of safety is that the safety tools are effectively only available to those kids who have the privilege of involved, informed, tech-savvy parents…or any parents at all. In other words, the protections are limited to the kids who are likely least at risk for exploitation and others harms in the first place.

That’s what Snap did here.

So is it an improvement? Suuuuuuure. But one that will likely affect a very small number of kids. Most minors on Snap will likely be served what I shared above. I just can’t call it progress. The new Content Controls won’t do much (if anything) to prevent the vast majority of kids on Snap from accessing inappropriate content, being accessed by dangerous people, or making them safer overall on Snap.

Like a band-aid on a gushing head wound

“Which do you think is the most dangerous app?” I constantly ask law enforcement, child safety experts, lawyers, survivors, youth…Without fail, “Snap” is in the top two, and usually #1.

Just this week, a Washington Post journalist posing as a 13-year-old broke this story: Snapchat tried to make a safe AI. It chats with me about booze and sex.

The subheading? Our tech columnist finds Snapchat can’t control its new “MY AI” chatbot friend. Tech companies shouldn’t treat users as test subjects–especially young ones.

We wish this commonsense concept was better recognized by the so-called safety teams in Big Tech.

Here’s a little excerpt from the article: in another conversation with a supposed 13-year-old, My AI even offered advice about having sex for the first time with a partner who is 31.

Just in the past few months, Snap was named by three separate child protection agencies as the top app together with Instagram for sextortion, one of the top three places children were most likely to view pornography outside of pornography sites, and the number one online site where children were most likely to have a sexual interaction. Multiple grieving families are suing Snapchat for harms and even deaths of their children because of sex trafficking, drug-realted deaths, dangerous challenges, severe bullying leading to suicide, and other serious harms originating on Snap.

This is what is happening on a platform that is used at least once a day by 42% of 9-17 year olds putting it in the top 5 with Youtube, TikTok, Instagram, and Facebook (P.S. Kids under 13 aren’t even supposed to be on Snap or other social media).

I want to be SUPER clear here: Stories and Spotlight are likely the “safest” and most “benign” sections on Snapchat (and yes, you should find that very concerning). And while they are certainly problematic, they are not the most dangerous or risky features on Snap by a long shot.

Yet instead of making significant changes that would actually stem the most dangerous aspects of Snap, they decided to focus on window dressing policies about the Stories section instead.

I’m afraid that parents and policymakers who may not know the app well will be led to believe (and this is a very smart move by Snap) that this is the worst content their kids will come across on the app, not understanding that Snap is dangerous by its very design.

In short, Content Controls is a distraction, a diversion from the greatest risks on Snap.

How Family Center and Content Controls specifically fall short

Needless to say, we strongly advise parents to do their homework about Snap before deciding to let their children use the platform.

And if they are on Snap, please do use Family Center and turn on The Content Controls–because when it comes to protecting our kids online, we need to use every tool we can…however flawed. At the very least, it may spur some conversations about social media and the content your kids are consuming.

Keep reading for a more detailed assessment of how Family Center and the Content Controls in particular fall short and what we would like to see Snap change to make all kids using their platform safer.

1. Problem: Only accessible to parents (not even teens) through Family Center

While Content Controls certainly are an important and appealing feature, the tool is not available to all minors or even adults who may want to use it. This tool only appears as an option to those teens who give their parents permission to link Snap accounts through Family Center and then the parents toggle this feature to on.

Why wouldn’t this feature be available to everyone–especially all minors on Snap? And why aren’t they given the agency to turn this feature on themselves (instead of the parents having to do it)? I speak with a lot of young people, and they are sick of being fed hypersexualized, exploitative, sensationalized content. And even if some youth think they want to consume this type of garbage–does Snap need to push it on them knowing it can be harmful to their health and well-being?

The only explanation we have is that Snap is more concerned about angering their advertising partners and risking profit loss than protecting and listening to their young users.

Recommendation: Make Content Controls available to everyone, at the very least to all teens.

2. Problem: Not on by default

While several of Snap’s competitors and tech companies in general are thankfully moving toward turning on higher safety and content control settings as the default for minors and even adults (thank you Google, Instagram, TikTok), Snap made the choice to leave this feature off. Since research shows that most people leave device and app defaults as they are upon set-up, it’s likely that many parents won’t toggle this on…if they even bother signing up for Family Center in the first place.

Recommendation: Turn “Content Control” on by default in Family Center and anywhere else it is made available in the future. Consider locking it to “on” for users under 16 years old as they are new on their digital journey and deserve greater protections.

3. Problem: Parents are misinformed about the content available on Spotlight or Stories and can’t even see their children’s content through Family Center

It’s even less likely caregivers will turn this feature on because most parents don’t have a good understanding of the type of material that surfaces on Stories or Spotlight. The descriptions Snap gives about what is found in these sections is almost laughably disingenuous. The way it’s described in their Family Guide, you’d think it was highly educational. And the Apple App Store and Google Play age ratings and app descriptions are downright deceptive.

Furthermore, parents can’t see their children’s Spotlight or Stories sections through Family Center (or course, in theory they could just have their kids show them directly on the app). How are parents to make informed decisions not only about using Content Controls, but about whether Snap is safe and healthy for their kids, if they can’t see the content they are consuming?

Recommendation: Allow parents to view kids Spotlight and Stories sections. Provide actual and accurate visual examples and descriptions of the type of content that not only surfaces but is common in these sections to parents in the Family Guide and/or Family Center.

4. Problem: Content Controls do not apply to Snaps (the majority of teen activity on Snapchat), Chat, Search or other content kids may access on Snapchat.

The Content Controls only apply to Stories/Discover. In fact, there are no protections for minors from seeing hardcore pornography and sexually explicit images and videos in their snaps. Posing as a teen girl on Snapchat with a public account, I consistently receive masturbation videos and nude images (almost exclusively from males) and can easily find and follow users posting pornography.

In striking contrast, dating app Bumble has made the principled decision to proactively blur sexually explicit images for their adult clients–and made the technology open and free! It truly surpasses understanding why apps like Snap that are wildly popular with teens and are known for extensive sexually explicit content and interactions refuse to adopt such technology.

And quite frankly, sexually explicit images should be completely blocked from being sent and received by minors. Period. If you actually need convincing, here’s a starting point.

Recommendation: Utilize existing technology to proactively blur sexually explicit images in snaps for adults. Block sexually explicit images and videos from being sent or received by teens (at the very least those 16 and under).

Too little, too late

We’re not saying Snap hasn’t made any progress in the past few years. And we’re not saying there aren’t dedicated people within Snap who truly are doing everything they can to make it safer. There are.

But while we’ve amplified and celebrated the improvements Snap has made, our pervasive feeling is: too little…and for many, many young people…much too late. The evidence and data showing the harms of Snap to children continues to increase–despite the changes Snap has made. Kids have literally died because of this app.

Snap is just not making significant enough progress that is keeping up with the constantly accelerating drumbeat of the grave harms perpetuated through its app.

ACTION ALERT

Join us in demanding Snapchat make fundamental changes to its design in order to truly protect its young users – including making Content Controls available to all teens, block My AI for minors, and use existing technology to blur sexually explicit images.

Ps. For further insight, I also recommend reading this blog by the Organization for Social Media Safety: Snapchat’s Family Center: A New Talking Point Not A Tool