I have to admit: when I was first asked to research Spotify as a potential Dirty Dozen List target, I may have rolled my eyes a little bit. Spotify is just for streaming music and podcasts, right? How bad could it be?

. . . As it turns out, pretty bad.

Pornography on Spotify

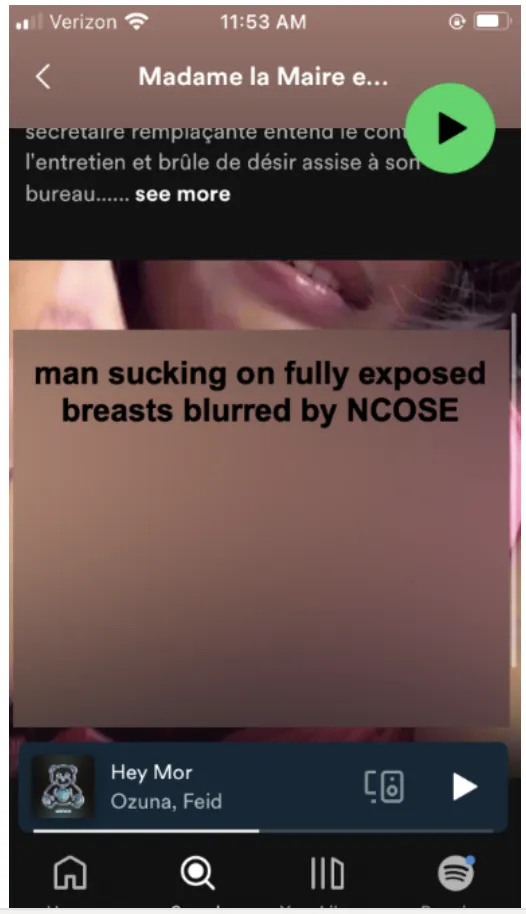

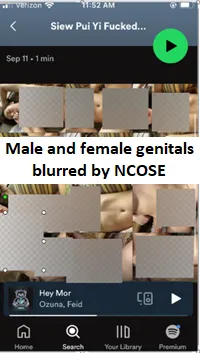

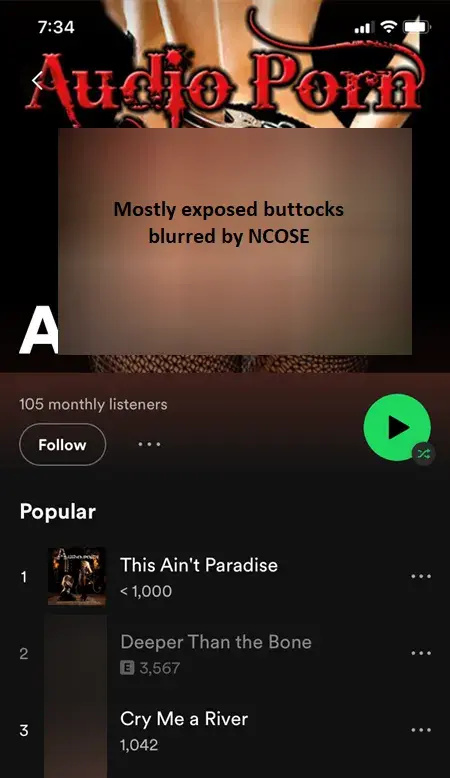

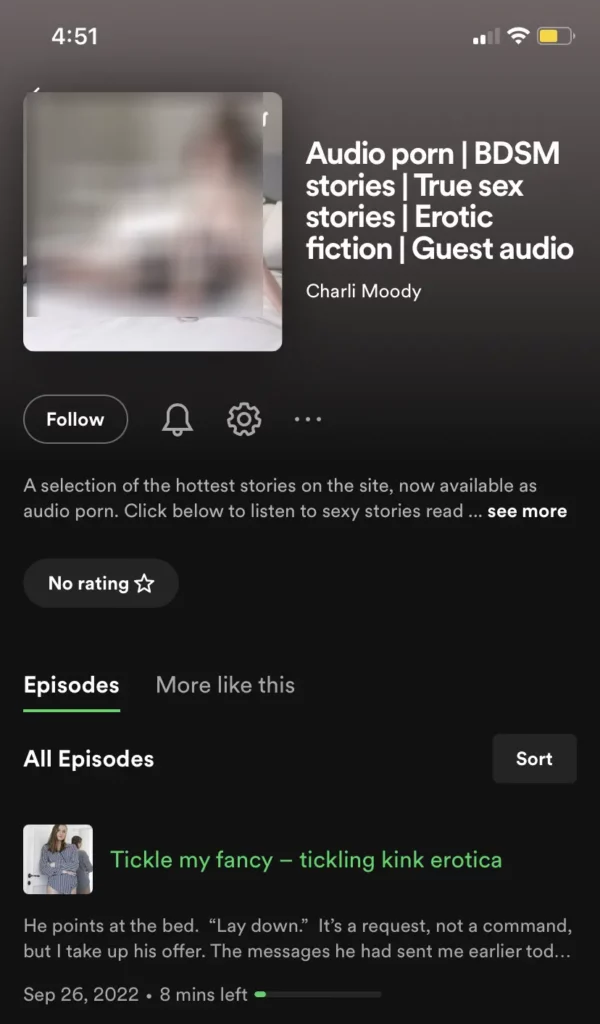

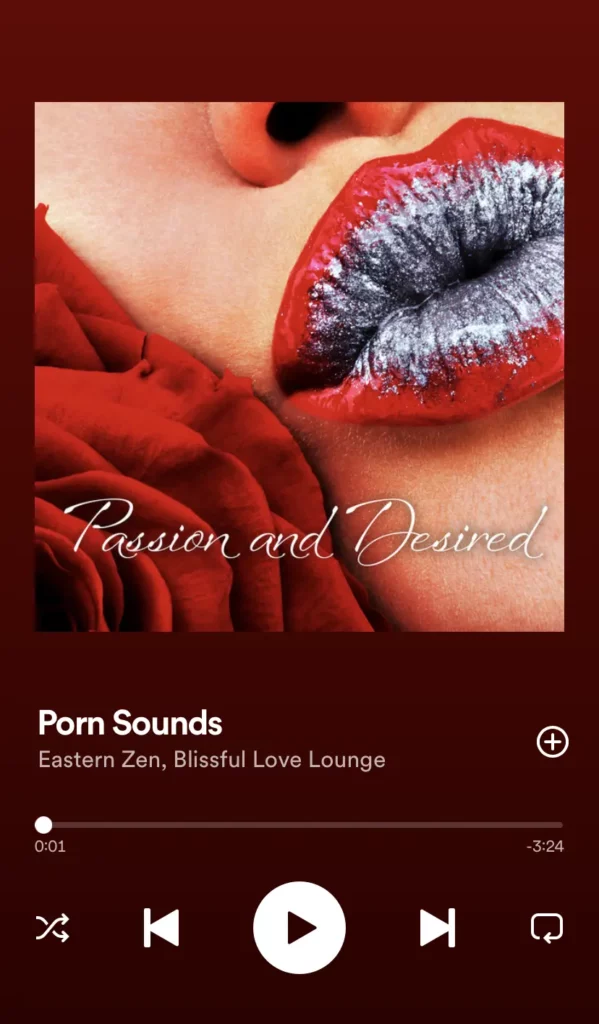

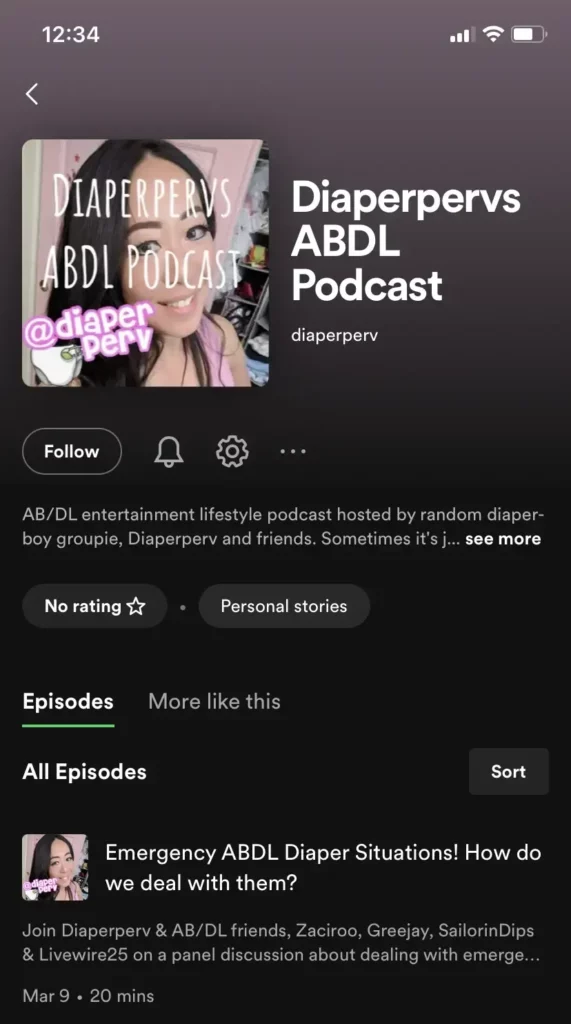

To my shock, a single quick search on Spotify revealed that music and educational podcasts aren’t the only thing on the streaming app. There’s also an abundance of pornography.

This can be in the form of sexually explicit images (e.g. profile images, playlist thumbnails), audio pornography (e.g. recordings of sex sounds or sexually explicit stories read aloud), or written pornography (e.g. in the descriptions for pornography podcasts).

What really outraged me was that, for the most part, this pornography was not blocked by Spotify’s explicit content filter—even though the company assures parents that this filter will block any content which “may not be appropriate for kids.” The audio pornography is rarely caught by the filter, and the visual and written pornography is never caught by the filter, because it is not designed to block images or description. Opting to block explicit content will only stop kids from playing the content, but everything remains visible.

Spotify’s reviews on Common Sense Media are overwhelmingly of parents expressing shock and dismay after discovering pornography on the app. Many of these parents even paid for premium subscriptions in order to be able to control the explicit content filter on their child’s account—only to discover that, contrary to Spotify’s deceptive advertising, this filter does next to nothing to protect their children from pornography.

“Totally shocked and appalled by discovering porn podcasts are not sensored by the explicit content function. . . What on earth are Spotify thinking???”

“BEWARE! This app has PORN, not just PORN MUSIC, but sexually explicit podcasts and graphic images.”

“Parents need to be aware that the spotify “family plan” DOES NOT block explicit content as it claims. Innocent children just trying to listen to music can very easily and accidentally come across the audio porn and “podcasts” that spotify has on their site.”

Further, much of this content should not even be on Spotify to begin with, as the company claims to prohibit “pornography or visual depictions of genitalia or nudity presented for the purpose of sexual gratification.” However, it is clear that the company is doing little, if anything at all, to proactively enforce this prohibition. The sheer ease with which pornographic content can be found would suggest Spotify is not actively looking for it to remove. I also spoke to a podcaster who shared that Spotify always flagged any of her episodes which mentioned the COVID vaccine (regardless of what was said), but that episodes which had the word “pornography” in the title were not flagged. What this shows is that Spotify can and does rigorously monitor potentially problematic content in some cases but is not interested in doing so when it comes to their prohibition on pornography.

Child Sexual Exploitation on Spotify

As if the existence of pornography on Spotify isn’t shocking enough, the platform has also been used for the purpose of child sexual exploitation.

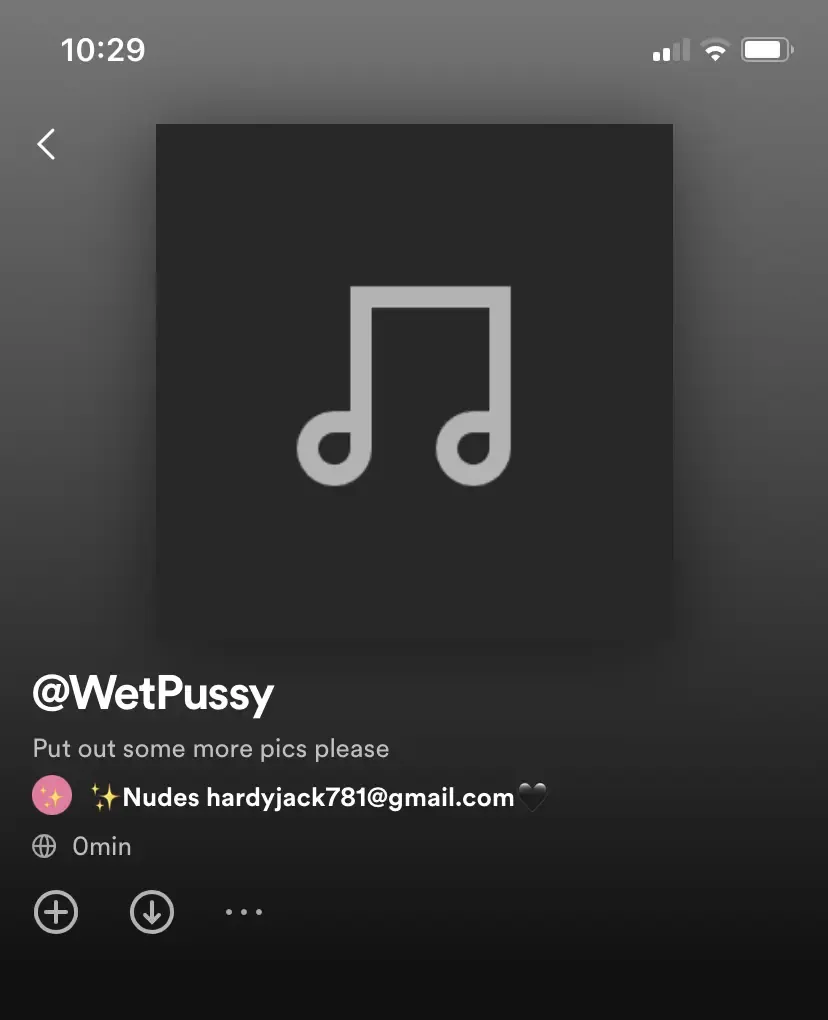

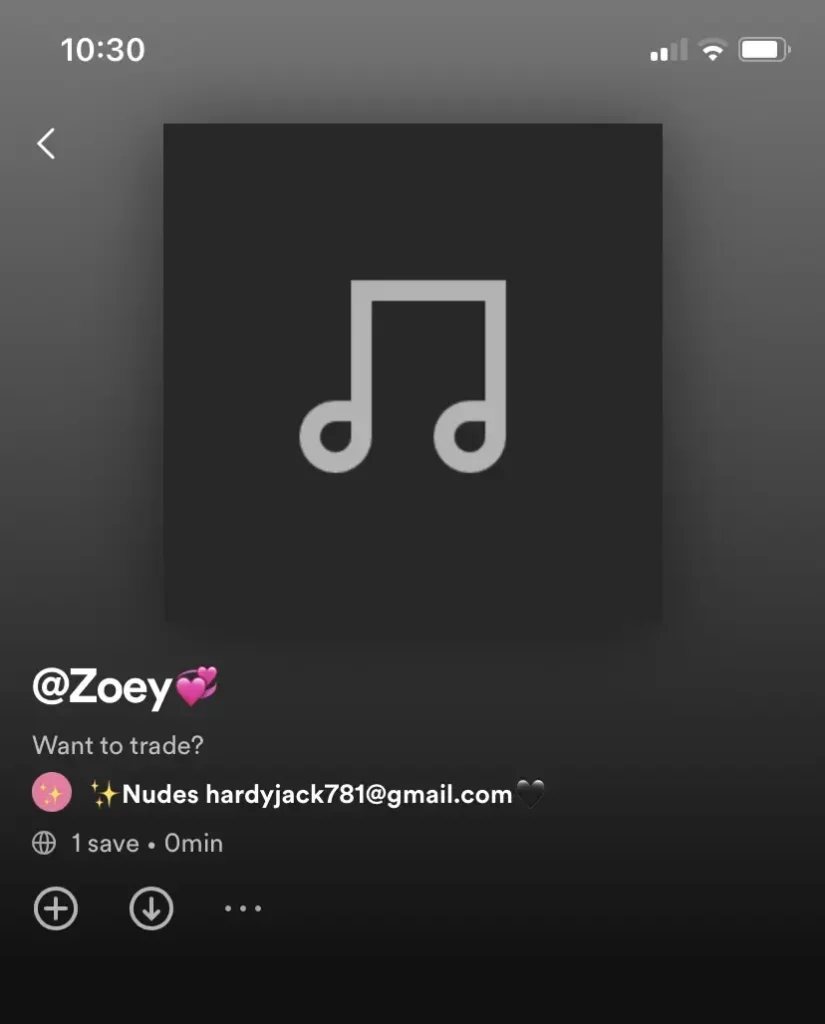

In January 2020, a high-profile case of an 11-year-old girl being groomed and sexually exploited on Spotify made news headlines. Sexual predators communicated with the young girl via playlist titles and encouraged her to upload numerous sexually explicit photos of herself as the cover image of playlists she made. They also exchanged emails via this method of communication.

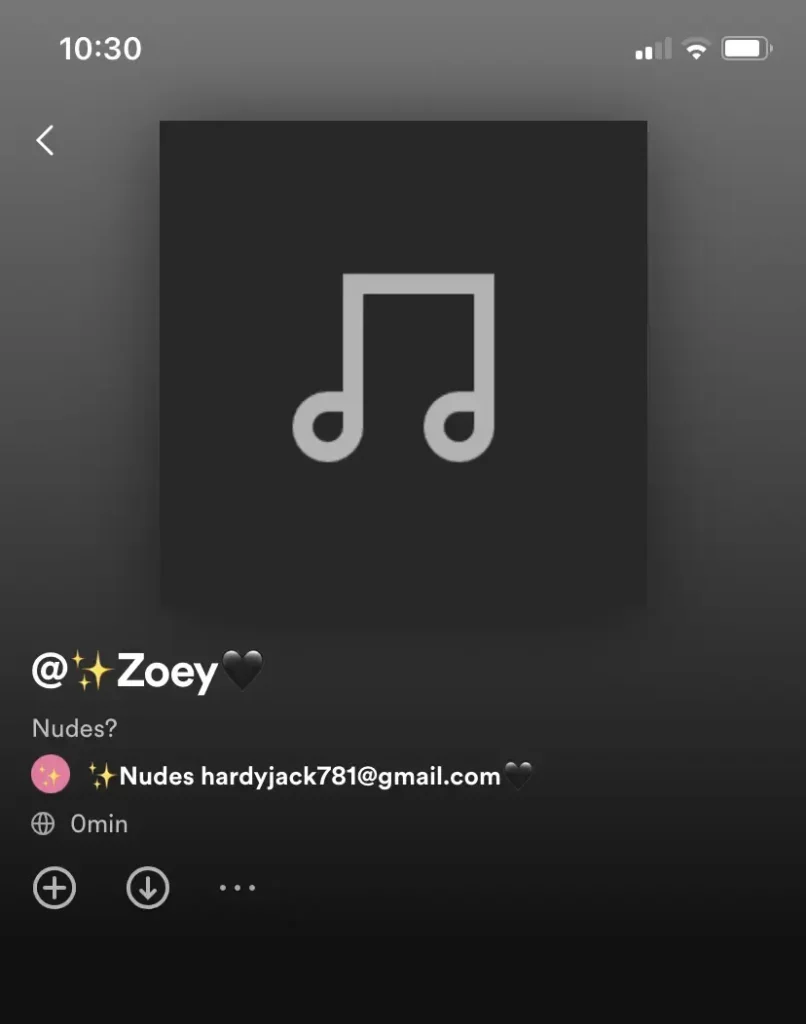

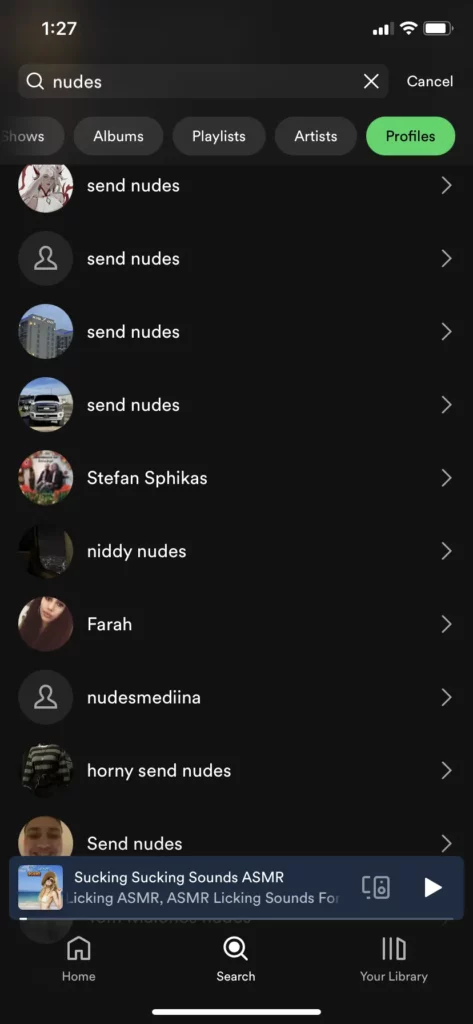

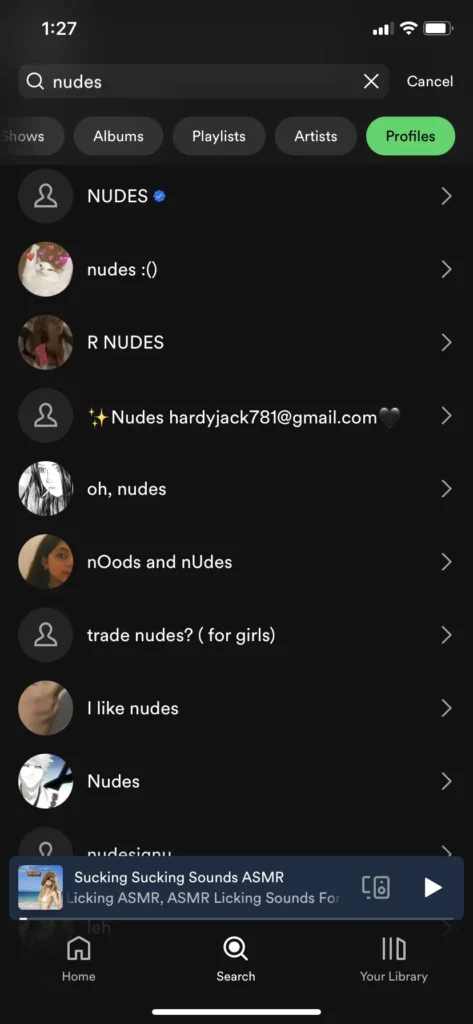

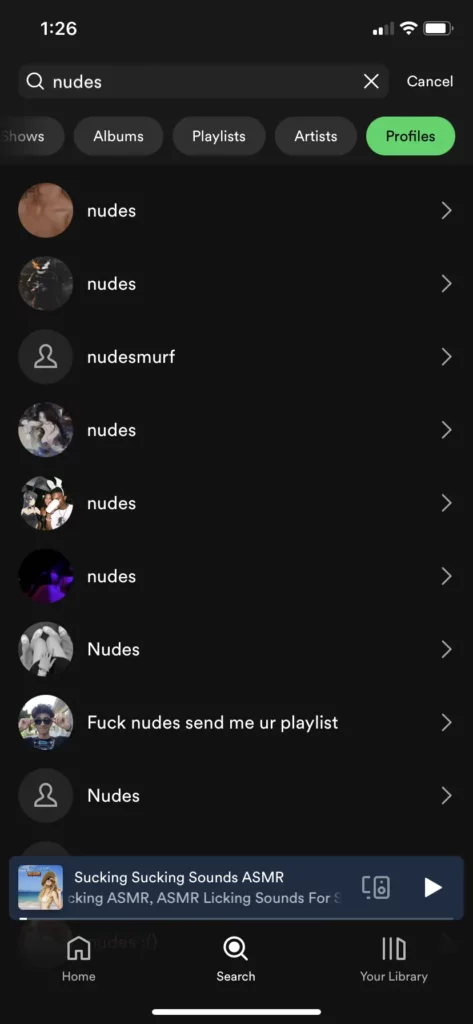

When I looked for myself, I easily found multiple profiles on Spotify that seemed to be or have been dedicated to soliciting or sharing “nudes.” One of these profiles shared an email address and tagged other users in playlists, asking them to send nudes.

(See also survivor-advocate Catie Reay’s TikTok video, which delves into the issue of grooming on Spotify)

Further, when I was gathering evidence of pornography on Spotify, one of the first results was a pornography podcast for which the thumbnail appeared to be child sexual abuse material (CSAM, the more apt term for “child pornography”).

After reporting this content to the National Center for Missing and Exploited Children (NCMEC), I attempted to report it to Spotify. What ensued was an appalling goose chase which, in sum, revealed that Spotify has no clear reporting procedure for child sexual exploitation. I was forced to make a general report for “dangerous content” and did not even have an option to add more details in the report explaining that it was for child sexual abuse material.

The report was made three weeks ago. Currently, the content is still active.

Further, NCMEC’s 2022 annual report found that, more than 90% of the time Spotify reported content to them, the report “lacked adequate, actionable information.” In other words, the reports “contained such little information that it was not possible for NCMEC to determine where the offense occurred or the appropriate law enforcement agency to receive the report.”

This is incredibly egregious, especially considering the recent media attention over the sexual exploitation of the 11 year-old girl, to which Spotify responded that they take the problem of child sexual abuse material “extremely seriously” and “have processes and technology in place” to address it.

Earth to Spotify: Streamlined reporting procedures for child sexual exploitation is the most basic, bare minimum safety measure!

Content that Normalizes Sexual Violence, Abuse, and Incest

I and my colleagues were deeply disturbed by the horrific content we found on Spotify which normalized, trivialized, and even encouraged sexual violence, abuse, and incest. This content was appallingly easy to find, and even surfaced when we weren’t looking for it.

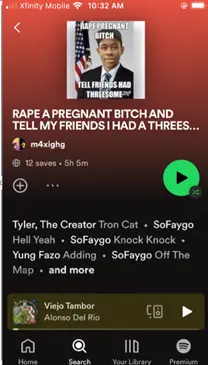

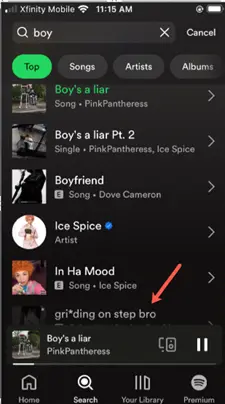

For example, one NCOSE researcher was attempting to search for rap music—but the term “rap” instead led her to content joking about “raping a pregnant b—ch.” In another case, she tried to search for the song “Boy’s a liar,” which is popular with teens. Simply typing the single word “boy” led her to results normalizing “step” incest. These results were on a newly made account for a 13-year-old, so search history was not affecting the results.

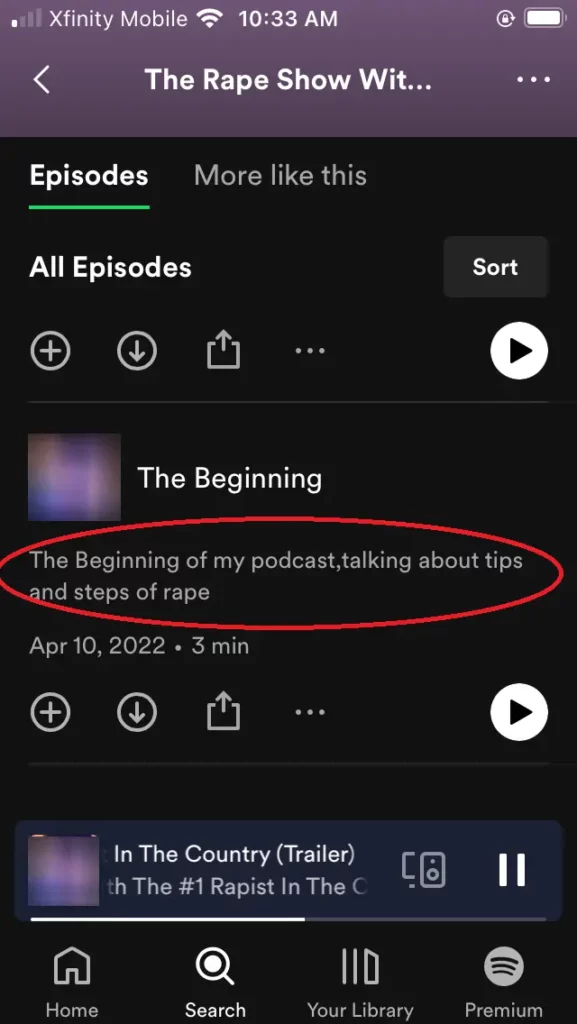

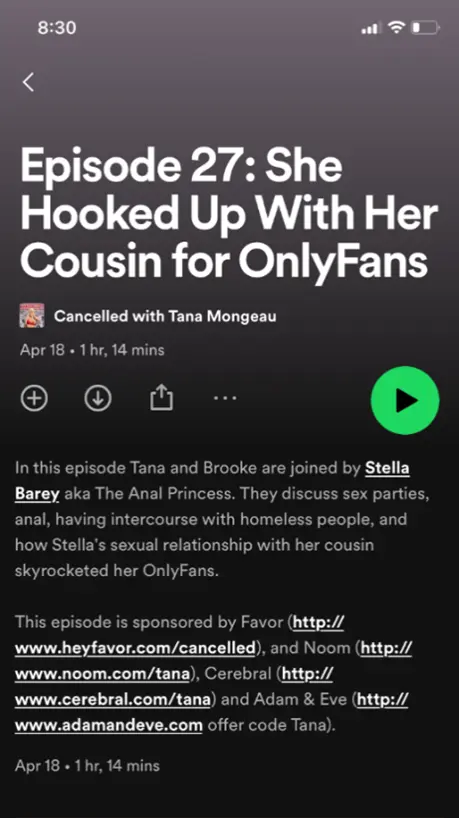

Other content we found included a podcast which walked people through the steps to commit rape and not get caught by the authorities, animated imagery of what appeared to be rape, audio pornography depicting child sexual abuse, content encouraging adults to roleplay child sexual abuse as a fetish, content normalizing cousins having sexual relations, and much more.

On paper, Spotify prohibits “advocating or glorifying sexual themes related to rape, incest, or beastiality”—but again, these policies are clearly poorly enforced.

Why Spotify is on the Dirty Dozen List

By placing Spotify on the 2023 Dirty Dozen List, NCOSE is not making a statement that Spotify is the worst corporation out there. The Dirty Dozen List is not a “top 12” list and we weigh numerous factors in deciding which entities to feature. In the case of Spotify, we think it is important to raise awareness of the dangers as it is a platform that most people trust, making it easy for the harms to take kids and parents off guard. Further, we think Spotify can (and must) make some quick, easy changes to greatly improve safety on their platform. Placing them on the Dirty Dozen List allows you to join us in calling on them to make those changes!

ACTION: Call on Spotify to Clean Up Its Act!

Please take 30 SECONDS to complete this quick action form, calling on Spotify to better protect kids and to stop facilitating sexual abuse and exploitation!