Once known as a “hunting ground for predators,” the wildly popular short-form video app TikTok has transformed itself into a model of fighting sexual exploitation and abuse on its platform.

Featured on NCOSE’s 2020 Dirty Dozen List for rampant grooming and exploitation children and on the 2021 DDL for highly sexualized content, TikTok has instituted a series of such sweeping and significant changes this past year that in many areas it now serves as an industry standard for online child safety. They’ve defaulted most safety settings for minors, removed high-risk features (like direct messaging) for 13–15-year-olds, is the only app that has pin-protected caregiver controls, and has instituted the most robust Community Guidelines we’ve seen for any corporation. They’ve also started released their Transparency Report on a quarterly basis (versus every 6 months) to increase their own accountability.

And they’ve kept up the trend.

New TikTok Changes Fight Sexual Exploitation and Abuse

Last Friday (July 9), TikTok announced that it will automatically remove “violative content” such as videos showing nudity or sexual activity or threating minor safety. TikTok’s current process uses technology to identify and flag potential violations for further review by their Safety Team. In the announcement, Head of US Safety Erin Han explains that in the US and Canada over the next few weeks, TikTok will begin using technology to a greater extent to automatically remove content upon upload in areas where it has the highest degree of accuracy: minor safety, adult nudity and sexual activities, violent and graphic content, and illegal activities and regulated goods. Uploaders are notified if their video has been removed and can appeal the decision. Repeat offenders will suffer increasingly heightened penalties, including being permanently banned. This move shows a genuinely proactive effort by TikTok to implement their Community Guidelines for the good of their users, rather than merely having them on paper to protect their own liability.

TikTok Paves the Way for Big Tech

Aside from the obvious win that this policy is likely to dramatically reduce the number of people (including minors) subjected to sexually explicit material, this move yet again has TikTok moving the needle on what can and SHOULD be expected of Big Tech:

Recognition of Harm (to viewer and their own employees)

TikTok explicitly stated that reviewing the type of content outlined (minor safety, adult nudity and sexually explicit material, etc.) can be distressing to their employees, showing a recognition that this content can be harmful to both adults and children.

While human moderation is still necessary in many cases, automated removal will further protect their Safety team from the potential negative emotional and psychological effects that many such content moderators suffer. This change exemplifies an empathy and care for their employees that does not seem to be the norm in Big Tech—it’s the first time we’ve seen a tech company explicitly note an improvement being made to protect their staff. It also seems to be a smart business move to use technology in a way that frees up a greater number of their human reviewers to address more “nuanced” content.

Erring on the Side of Safety

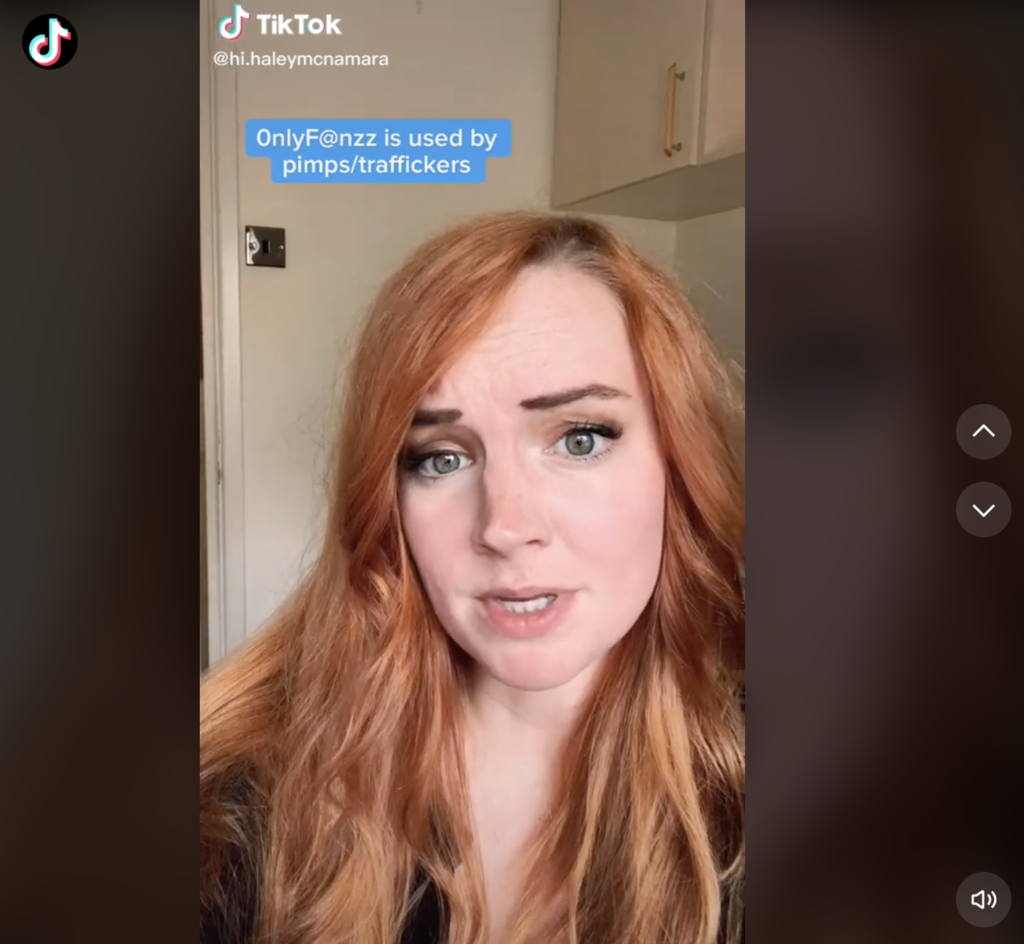

One of my colleagues posted a TikTok video about the harms of OnlyFans and due to this new policy, it was quickly removed (especially significant, because NCOSE has called out TikTok for the vast number of videos promoting OnlyFans and we’ve asked them to prioritize removal as it’s such a dangerous site—especially for minors).

At first, we were frustrated, but after brief reflection we cheered. She was able to appeal the removal and it was back up in no time.

Why did we cheer? Because we’d love to see more online platforms err on the side of removing potentially violative material rather than allowing it to proliferate while putting the onus on users (including minors) to report harmful material after they’ve already been exposed to it. A quick appeals process is possible—and we hold that if something is worth posting, then it’s worth the users time to appeal for the greater good of elevated safety measures.

Let’s be clear, there is still a lot of problematic material on TikTok. We found several OnlyFans-related videos with a quick search. And we also know this approach can backfire if there isn’t a swift appeals process. Survivor leaders and other allies have shared that their material is often blocked or removed on other platforms due to the nature of their work and the appeal process goes nowhere, effectively silencing their voices on social media. But if TikTok can prioritize this and figure out a way, then larger, more resourced tech companies should be able to as well.

Creativity and Entertainment Without Exploitation

TikTok’s mission is to “inspire creativity and bring joy.” The announcement reiterated their commitment to bringing together a “diverse, global community fueled by creativity” that can “express themselves creatively and be entertained in a safe and welcoming environment.”

Too many individuals and corporations have bought into the myth of our pornified culture that nudity and sexually explicit content are not only acceptable but necessity for any type of medium to be entertaining. TikTok is rejecting the notion that exploitation is entertainment.

TikTok not only hosts influencers, but with nearly a billion active monthly users, TikTok is an influencer in itself. Young and older people alike do take note when platforms legitimize—or don’t—certain cultural messages (as popular as they may be). We applaud TikTok for being vocal in its values and in making a concerted and genuine effort to foster the environment they claim to seek.

We hope to see greater use of technology by TikTok and other tech companies to combat, rather than perpetuate, sexual abuse and exploitation in all areas of their platform (content, comments, messaging, etc.)… and to err on the side of removal, protect the well-being of their users and employees, and to reject the notion that nudity and sexually explicit content is entertainment.