A young working professional and aspiring politician, Miread’s* reputation was everything to her. She was the youngest elected official in Florida, serving as a member of her city commission at age 21. Her future was bright.

One day, that was all taken away.

Miread discovered nude images of herself circulating online. Only, she had never posed for any nude photos.

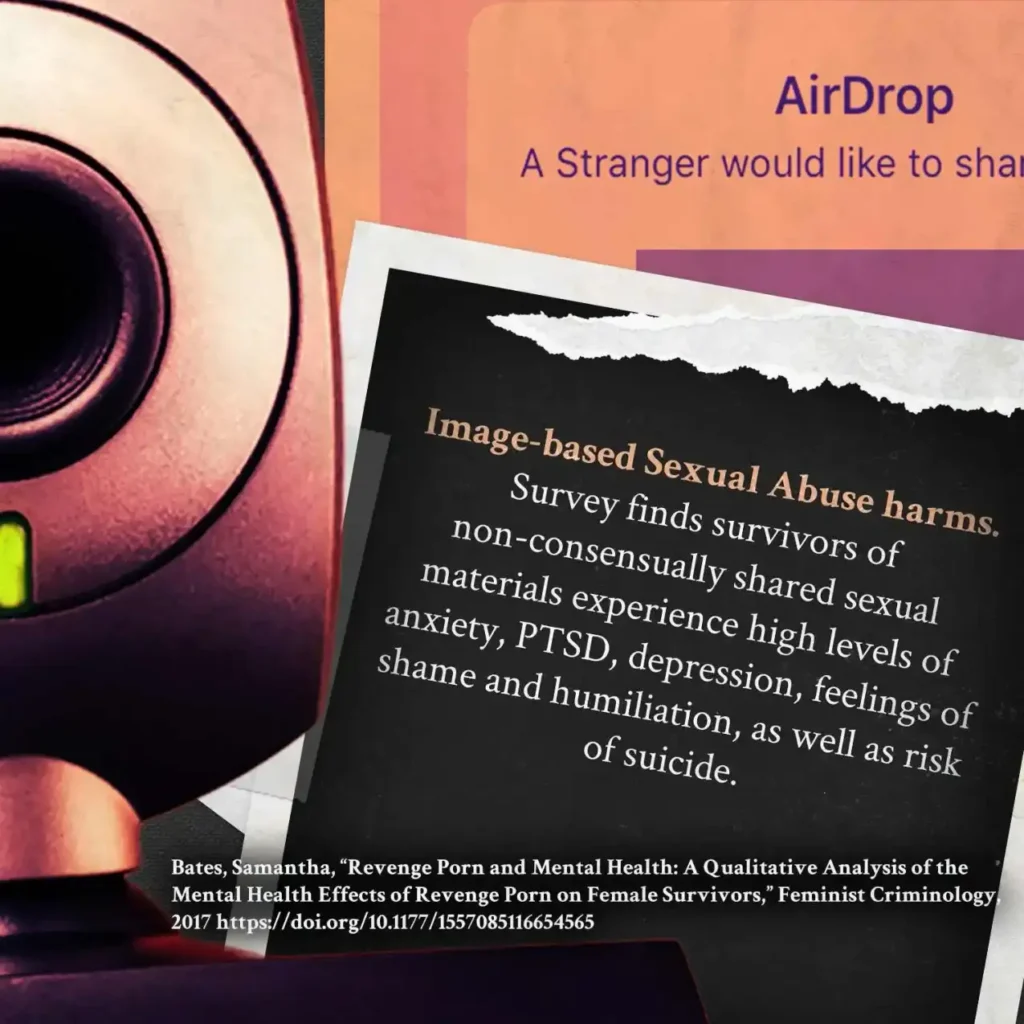

Someone had stolen innocuous images from her social media and used AI to strip them of clothing, forging nude photos of Miread. Like so many others, Miread was victimized through a growing form of violence called image-based sexual abuse (IBSA).

What is Image-Based Sexual Abuse?

Image based-sexual abuse (IBSA) is the sexual violation of a person committed through the abuse, exploitation, or weaponization of any image depicting the person. It includes but is not limited to the creation, distribution, theft, extortion, or any use of images for sexual purposes without the meaningful consent of the depicted person.

For example, IBSA could involve an ex-partner taking sexually explicit photos that were shared privately and posting them online. Or it could involve using artificial intelligence to generate sexually explicit images of people without their consent, like what happened to Miread.

The possibilities are numerous. You can learn more about the different types of IBSA here.

Recent Victories in the Fight Against IBSA

Historically, survivors of IBSA have had tremendous difficulty obtaining justice for what was done to them, as the law is lagging behind with this emerging form of sexual violence. When Miread went to law enforcement and lawyers, she was told there was little they could do.

But with your help, we are changing the law and giving survivors of IBSA a path to justice!

Over the past few months, we have achieved the following victories:

- The TAKE IT DOWN Act unanimously passed Committee on July 31st This bill requires online platforms to swiftly remove IBSA after it is reported and criminalizes the uploading of IBSA.

- The DEFIANCE Act unanimously passed the Senate on July 23rd: This bill creates a path to justice for survivors of AI-generated IBSA.

- The SHIELD Act passed the Senate on July 10th. This bill makes it a criminal offense to knowingly distribute (or attempt/threaten to distribute) sexual images of someone without their consent.

- On June 20th, Massachusetts became the 49th state to criminalize image-based sexual abuse.

Another big piece in the fight against IBSA is the tech companies. Tech companies facilitate the proliferation of IBSA. But if we can move them to be part of the solution, rather than part of the problem, we will have a tremendous impact! With your help, we’ve seen the following victories with tech companies in the past few months:

- Google introduced major updates that make it easier to remove or downrank IBSA in search results

- Apple, LinkedIn, and Google all removed “nudifying apps” that we raised their attention to, or ads which promote these apps. These apps allow people to take innocuous images of women and “strip” them of clothing.

- The CEO of Telegram, a site notorious for IBSA, was arrested in France and the platform is being investigated.

We’re so grateful to you for making all of this possible! The positive impact you’re having on survivors is something you can be incredibly proud of.

Prevalence and Harms of IBSA

IBSA is alarmingly common and can impact anyone. A 2020 international study found that 1 in 3 participants had experienced some form of IBSA victimization.

As more advanced forms of technology are being developed, specifically AI, instances of IBSA are becoming even more frequent. According to a December 2023 research study by Home Security Heroes, the availability of AI-generated IBSA (commonly called “deepfake pornography”) online has witnessed a staggering increase of 550% between 2019 and 2023. The same study found that women were the victims in 99% of all AI-generated IBSA.

The traumatic impacts of IBSA on survivors are severe and perpetual. A survey conducted by the Cyber Civil Rights Initiative found that, compared to people without IBSA victimization, victims had “significantly worse mental health outcomes and higher levels of physiological problems.”

What Can You Do?

While we have had many promising victories, there is still more work to be done. Please help push forward solutions to IBSA by taking the 2 quick actions below!

1) Ask Congress to Pass Legislation Combatting IBSA!

It is encouraging that we have seen so much progress on federal bills combatting IBSA. Help us push these crucial bills to the finish line!

2) Call on Microsoft’s GitHub to Stop Facilitating IBSA!

Microsoft’s GitHub is arguably the #1 tech company facilitating AI-generated IBSA. Join us in urging them to change!