“Boys for Sale.”

This was the text displayed on a Reddit post found by a NCOSE researcher this month. The post also featured an image … An image of an adult man, covering the mouth and grabbing the genital region of an underage boy. The underage boy was wearing only his underwear. Across his bare chest, there were clearly visible red marks of abuse.

This is the kind of horrific content that can be found easily on Reddit. It is why we named Reddit to the Dirty Dozen List—an annual campaign calling out 12 mainstream contributors to sexual exploitation—for the fourth year in a row. It is why we’re asking YOU to once again join us in demanding Reddit reform.

What is Reddit?

Reddit is one of the most popular discussion platforms on the Internet right now, with about 73 million active users each day. Unfortunately, it is also a hub for child sexual exploitation, image-based sexual abuse, hardcore pornography, and more.

While Reddit has made numerous public attempts to clean up its image—including taking steps to blur explicit imagery and introducing new policies around image-based sexual abuse and child safety—these have not translated into the proactive prevention and removal of such abuses in practice. Since Reddit went public on March 21, 2024 all these abuses are now being monetized even further.

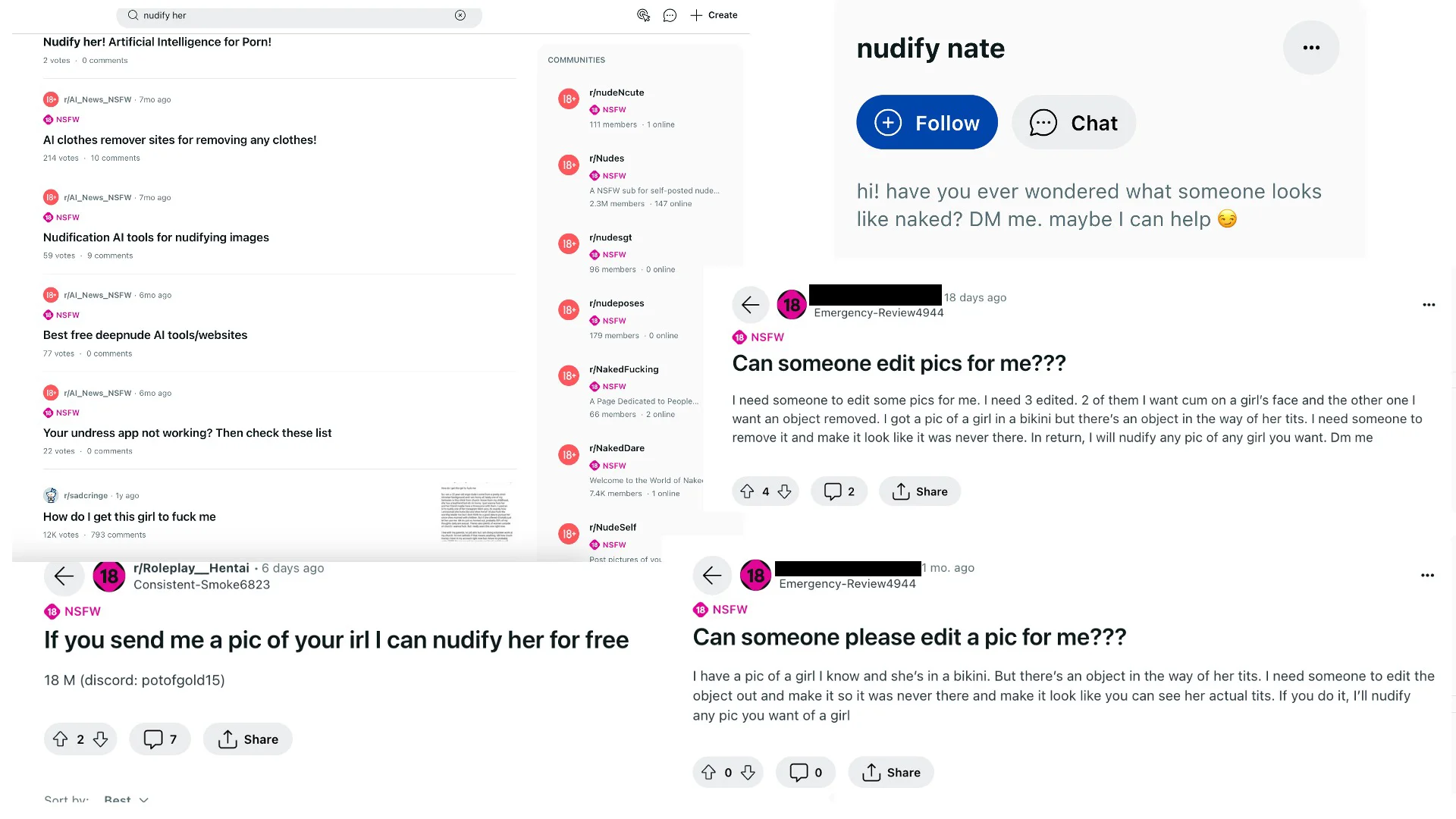

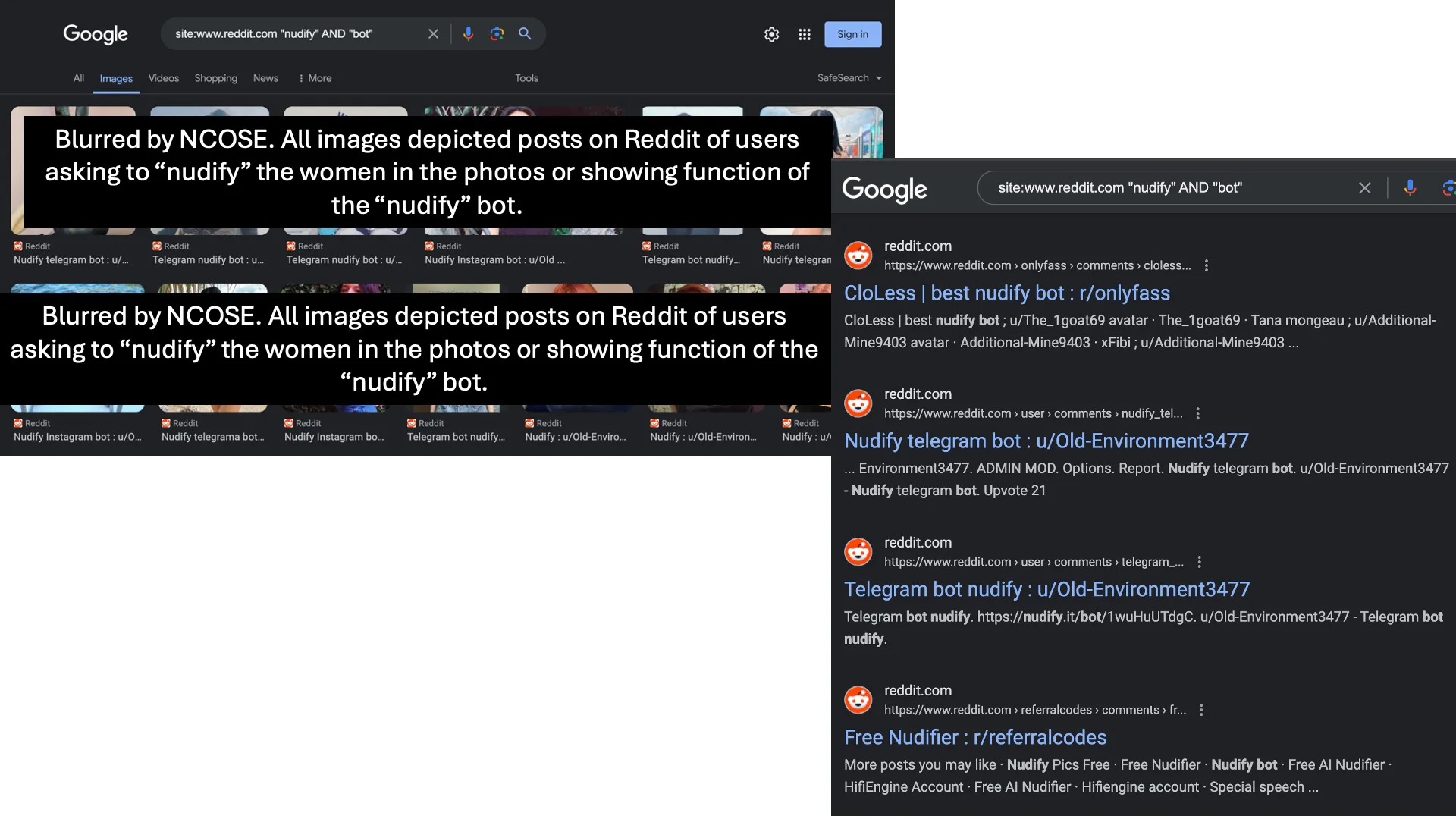

Image-based Sexual Abuse (IBSA) and Deepfake Pornography on Reddit

Image-based sexual abuse (IBSA) is the creation, theft, extortion, threatened or actual distribution, or any use of sexualized or sexually explicit materials without the meaningful consent of the person depicted.

Following Reddit’s placement on the 2021, 2022, and 2023 Dirty Dozen lists, the company finally made some long overdue changes to their policies governing IBSA. However, despite Reddit’s new policy literally titled “Never Post Intimate or Sexually Explicit Media of Someone Without Their Consent,” NCOSE researchers have found that Reddit is still littered with IBSA, including AI-generated pornography (sometimes called “deepfake pornography”).

Many posts on Reddit offer paid “services” to create deepfake pornography of any person, based on innocuous images someone submits.

Research has shown that the number of ads for “undressing apps” (i.e. apps that take innocuous images of women and strip them of their clothing, thereby creating deepfake pornography) increased more than 2,400% across social media platforms like Reddit and X in 2023

Reddit’s anemic content moderation, as well as its persistent refusal to implement meaningful age and consent verification for sexually explicit material (something NCOSE has been pressing on them to do for years), allows this abusive content to proliferate across the platform.

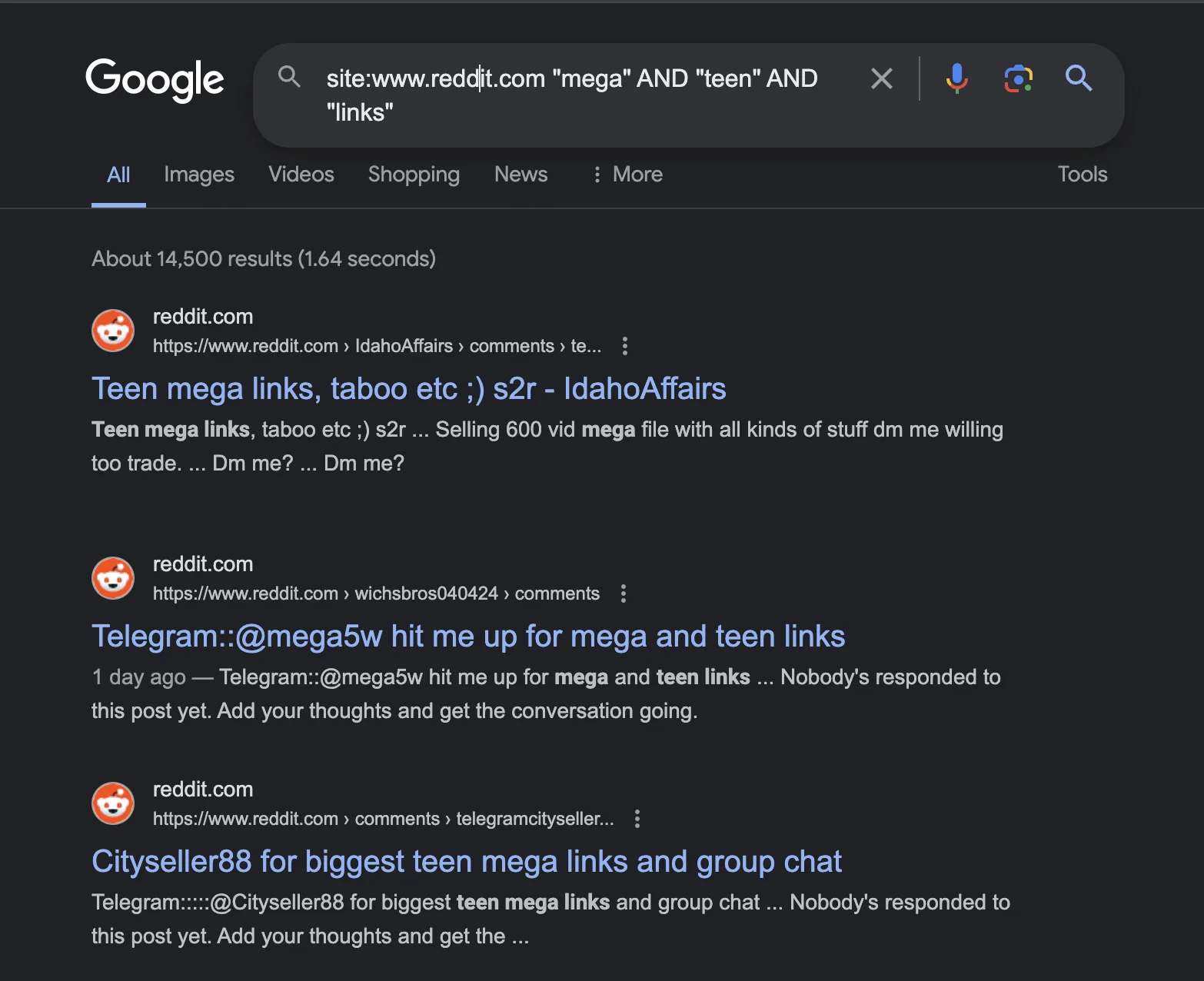

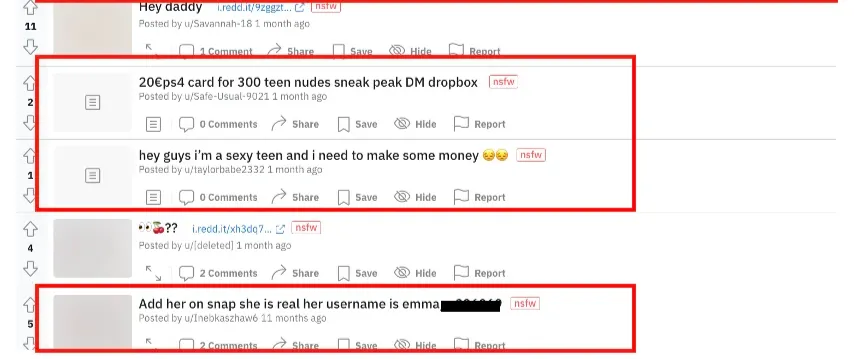

Child Sexual Abuse Material (CSAM) and Sexualization of Minors

Because Reddit refuses to implement meaningful age or consent verification for the sexually explicit content that is constantly being shared on its platform, Reddit is a hub not only for IBSA, but for child sexual abuse material (CSAM) as well.

February 2024 research found that Reddit is one of the top eight social media platforms child sex abuse offenders used to search, view, and share CSAM, or to establish contact with a child. It is also common for children to share their own self-generated CSAM (SG-CSAM) through Reddit. 2022 Research from Thorn found that 34% of minors who use Reddit at least once a day have shared their own SG-CSAM, 26% have reshared SG-CSAM of others, and 23% have been shown nonconsensually reshared SG-CSAM.

NCOSE researchers were easily able to identify high indicators of CSAM on Reddit. For example, when we analyzed the top 100 pornography subreddits (what is tagged on Reddit as “NSFW”), we found titles like “xsmallgirls” and references to flat-chestedness. Further, when we conducted a Google search for “site:www.reddit.com “mega” AND “teen””, we were shown 14,500 results of content on Reddit containing high indications of CSAM sharing, trading, and selling.

Even with the Sensitive Content filter ON to block sexually explicit results (not to mention their policies banning sexualization of minors), NCOSE researchers were exposed to links and images sexualizing minors labeled “teen nudes”—one of which was a link to a minor no more than ten years old.

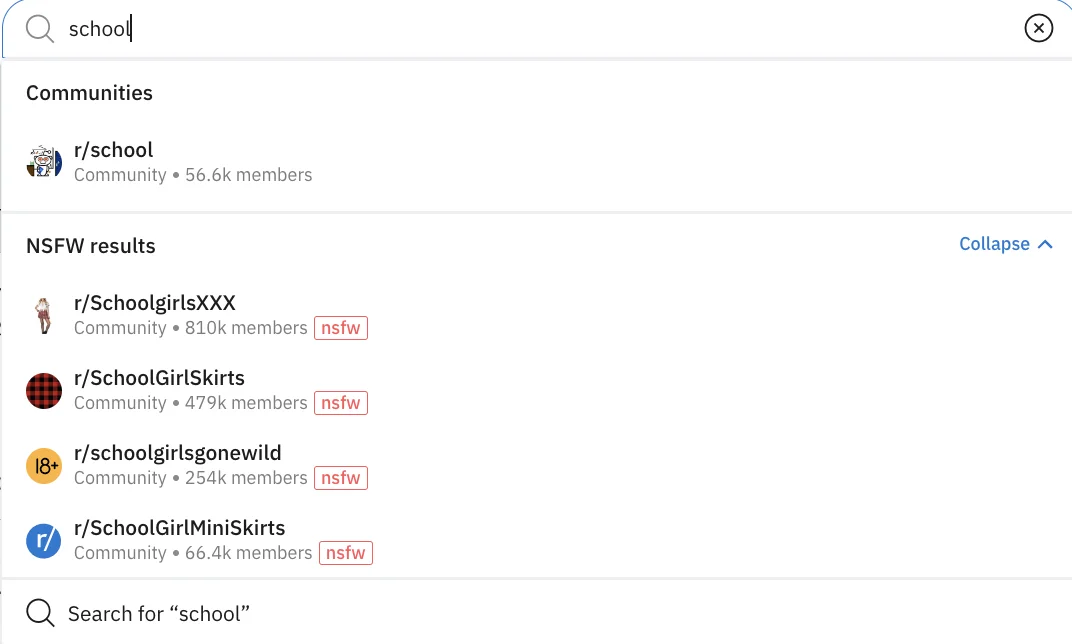

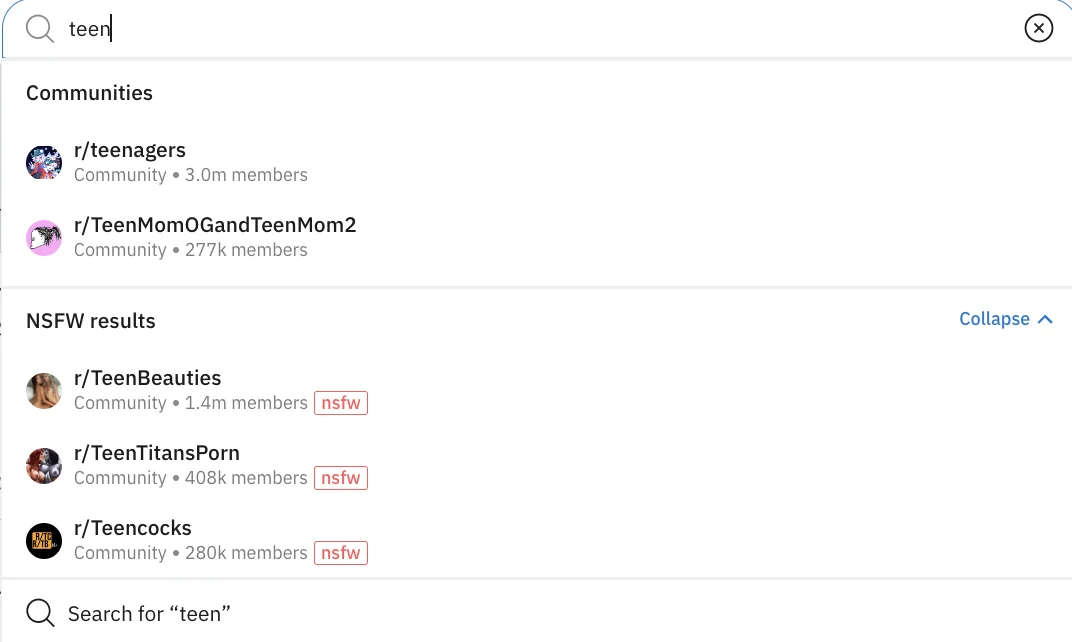

When NCOSE researchers typed in seemingly innocuous search terms like ‘school’ and ‘teen,’ subreddits sexualizing minors were immediately suggested by Reddit’s search algorithms.

ACTION: Urge Reddit to Reform!

We are demanding Reddit make meaningful changes to curb the sexual exploitation on its platform, including implementing robust age and consent verification, banning all forms of AI-generated pornography, and more.

Please join us in calling on Reddit to change! It only takes 30 SECONDS to complete the action below.