Recently, news broke about 12-year-old students in Florida who were victimized through AI-generated child sexual abuse material (CSAM, the more apt term for “child pornography”). Male peers took innocuous images of the students and used AI to render them pornographic. They proceeded to share the forged CSAM with others, all without the depicted children’s knowledge.

“It makes me feel violated,” one victimized child said, after discovering the abuse. “I feel taken advantage [of] and I feel used.”

This is only one of many high profile cases in which AI has been used to create sexual abuse images of children and adults alike. One of the ways to do this is with “nudifying apps”, which make it possible to “undress” innocuous images of a person.

VICTORY! Apple Removes Nudifying Apps from App Store

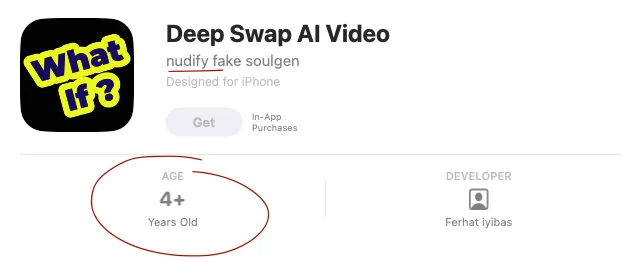

Now, here’s something really scary: Until very recently, nudifying apps were easily accessible on the Apple App Store, and were rated for ages 4+.

“Until very recently?” you may ask. “What happened since then?”

Answer: YOU DID!

After you took action through our Dirty Dozen List campaign against Apple, the company removed all four of the nudifying apps we raised to their attention. 2.2 billion active Apple devices across the globe are now promoting less sexual exploitation!

We are so grateful to you for causing this victory!

But the fight is far from over. There are still many ways Apple is facilitating sexual abuse and exploitation. We need your help to continue pressing on them to change!

CSAM Saved on the iCloud

In 2021, Apple abandoned its plans to detect child sexual abuse material (CSAM) on the iCloud. Other corporations in the space proactively scan and provide the National Center for Missing and Exploited Children’s CyberTipline and law enforcement with tens of millions of leads a year. The following year, Apple reported only 234 pieces of CSAM.

Apple’s policy decisions have monumental impacts on an entire generation of young people. Apple must prioritize the safety of their children and teen users.

Though Apple removed the nudifying apps we raised to their attention, there is little solace for victims who know that their sexual abuse material can be saved forever on the iCloud. Sexual exploitation of minors is at an all-time high, yet, Apple refuses to detect known CSAM on its iCloud. 87% of US teens own iPhones, so it is likely that iPhones are disproportionately used to record, share, and obtain CSAM.

Apple’s decision to not detect CSAM goes directly against 90% of Americans, who believe that it is Apple’s responsibility to do this.

Apple Refuses to Default Safety Settings for All Minors

Thanks to you joining us in pressing on Apple, the company now automatically blurs nude images or videos for children 12 and under, in iMessage, FaceTime, Air Drop, and Photos. A safety message also appears when someone under 12 is about to send a nude image. However, these common-sense safety features are not automatically activated for children 13 to 17-years-olds.

If CSAM describes sexually explicit material of anyone under 18 years old, and pornography is intended to be an 18+ product, why does Apple not automatically enable these safety features for all minors?

The importance of these safety features cannot be overstated. It is common practice for predators to send sexually explicit images to minors as a way of grooming them, usually asking for images in return. Once sexually explicit material of the child is obtained, the predator may share or post it online, or use it for sextortion (i.e. blackmailing the child in order to obtain money, more images, or other demands). A 2024 report from Thorn and the National Center on Missing and Exploited Children found that 14 to 17-year-old boys account for 90% of all cases where minors were sextorted for money. Apple is refusing safety settings to the demographic of children who are most at risk!

Deceptive Ratings on the Apple App Store

Apple’s App Store’s age rating and description system has routinely failed in reflecting the dangers on some apps. For example, take the recently removed nudifying apps that were rated for ages 4+.

Parents and caretakers trust in Apple’s rating and description to determine the safety of apps for their children. So, when Apple accepts apps that have deceiving descriptions or misleading age ratings, it is the child users who pay the price.

Apple has immense power and control in the world of technology. The app store is vital for companies that are trying to reach an audience. With the massive amount of money that Apple makes through app and in-app purchases, they have the resources to verify the descriptions of these apps and the content of ads within them.

As well as lacking accuracy in app descriptions/age ratings, Apple has not disclosed a lot of important shortcomings of their app store. Parents and guardians should be aware of the potential dangers in apps, like interaction with adult strangers, that aren’t written into descriptions or calculated into age ratings.

Apps in the app store are beholden to Apple’s discretion and the multitrillion dollar corporation needs to adhere to their own terms of service.

Apple: With Great Power Comes Great Responsibility

90% of Americans believe that Apple has a responsibility to detect CSAM. With a market cap of almost 3.3 trillion dollars, Apple has the means to develop measures to ensure the safety of children who use their devices. We implore Apple to use their vast resources to help protect the millions of children that use their devices.

Apple is very concerned with privacy; the safety of children should be a part of privacy. Their privacy protocols right now prioritize predators access to CSAM and IBSA instead of the privacy of children.

There must be incentives put in place that make Apple prioritize the safety of their children users, ignoring this problem will have unforeseen negative impacts on an entire generation. We call on Apple to utilize their market domination for the good of people instead of maximization of profits.