Reddit promotes itself as the “frontpage of the internet,” but unfortunately this extremely popular news aggregation and discussion website is frequently used more as a “backpage”—a forum where sex buyers, traffickers, and other predators anonymously post and view hardcore pornography and non-consensually shared images and videos, promote prostitution, and advise each other on how to purchase and abuse children and adults alike.

Reddit was named to the National Center on Sexual Exploitation’s 2021 Dirty Dozen List for creating a “hub of exploitation.” Investigative reports and our own researchers have found countless subreddits (forums dedicated to specific topics on Reddit) normalizing and depicting incest, non-consensually recorded and/or shared images, pornography (much that could fall under federal obscenity laws), and prostitution promotion. Many of the subreddits also normalize and even host child sexual abuse material.

Reddit is one of the only mainstream social media platforms that still allows pornography (along with Twitter)—while others such as Facebook, Instagram, YouTube, Tumblr, TikTok, and Snapchat have banned pornography and even nudity. While sexually explicit content, including pornography, can still be found on several of these social media platforms, they’ve at least instituted various degrees of enforcing their policies through reporting tools, technology to identify and remove problematic content, and human reviewers—usually trained employees or contractors: measures that Reddit has not taken.

Reddit is a hub of exploitation. Better reporting tools, removal of problematic content, and increased moderation would safeguard the platform. #DirtyDozenList Share on XIn a recent interview with Axios, Reddit CEO Steve Huffman asserted that allowing pornography would continue to be part of Reddit’s mission—and in the same breath conceding that “much of pornography is [exploitative.]” He goes on to say that “exploitative” pornography is not the type of pornography he wants on Reddit and stresses that Reddit “has rules.”

As is typical of so many corporations, policies exist on paper for the protection of the business—but are not put into practice for the protection and wellbeing of the people using their products.

Despite the well-documented sexual exploitation on its platform, and even the CEO’s acknowledgement that much of pornography is exploitative—Reddit hasn’t made any significant effort to stem the harmful and abusive content on its site.

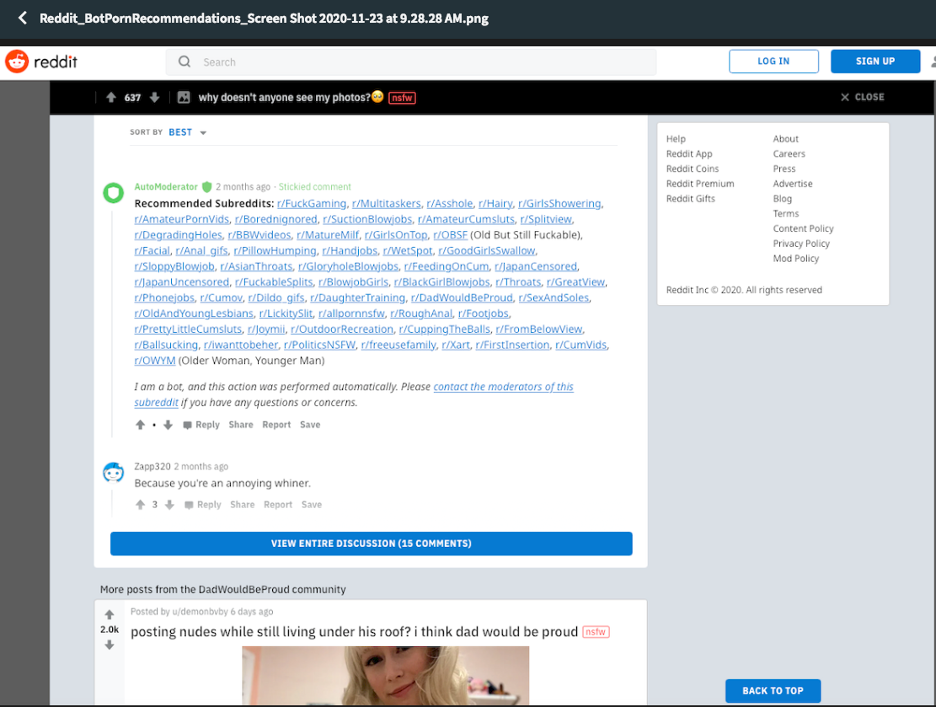

Reddit—which doubled in value from $3 billion in 2019 to $6 billion in 2020—relies on volunteer content moderators and hasn’t invested in technology widely used by other tech companies to identify and root out sexually exploitative material. In fact, it seems that AI technology in the form of bots is used to further and encourage redditors’ access to harmful content—offering subreddits with similarly exploitative themes.

The refusal to employ technology to better protect its users is especially ironic given that Reddit board member and early investor Samuel Altman is also the Co-Founder and CEO of OpenAI: a for profit research company whose goal is to advance digital intelligence in a way that is most likely to benefit humanity as a whole, rather than cause harm.

Reddit’s reliance on volunteer moderators and trust these untrained, self-appointed arbiters will act in good faith has proven to be a failed experiment. Reddit must reinvent its content regulation.

At a time when sexual assault, child sex abuse material, non-consensually shared images, and trafficking threaten the safety and wellbeing of so many individuals—and therefore society as a whole—corporations have a social obligation to stem these horrific abuses facilitated on their platforms. Specifically, Reddit must:

- Verify consent of any posted images or videos

- Insist on documenting the identity of anyone uploading NSFW material

- Permanently ban users violating Reddit policies on CSAM and non-consensually recorded or shared intimate images

- Invest in technology to scrape and remove harmful content

- Pay and train moderators to identify and remove exploitative material rather than relying on volunteer moderators

Reddit should also consider banning all pornography—at the very least until the above measures are instituted. The unfortunate reality is that even with safety measures and verification methods in place, sex traffickers and predators know how to manipulate people and systems alike. No person or bot can ever really know if someone is posting nude pictures or promoting paid sex with full, enthusiastic consent or is victim to economic circumstances or at the mercy and manipulation of another person. Corporations should ere on safety and security of their users (and possible unconsenting victims) rather than protecting predators’ and perverts’ desires with disingenuous professions of women’s empowerment and free speech. At bare minimum, platforms should at least do everything they can to make trafficking and exploitation through their products as difficult as possible and proactively root out criminal and predatory behavior.

If Reddit and other social media platforms don’t regulate themselves with the urgency and scope the gravity of these issues require, they must be held accountable through external pressure. And indeed we are witnessing a long overdue wave of litigation such as the federal lawsuit against Twitter and criminal investigations such as against Pornhub’s parent company Mindgeek, as well as legislative action through bills such as the EARN IT Act, which revokes Big Tech’s immunity from liability for sexual exploitation of children on their platforms and was introduced with strong bipartisan support to the US Senate in 2020 and will likely be introduced in the 117th Congressional session.

If Reddit doesn’t make some significant changes quickly, it should expect to be held to account in court: either that of public opinion or of the law.