With more than 500 million active users worldwide, TikTok is a social media video app for creating and sharing short videos and the app is well known for being popular with minors.

TikTok has been placed on the 2020 Dirty Dozen List due to lack of moderation and insufficient safety controls, which put minors at risk of exposure to sexually graphic content and grooming for abuse.

While TikTok has recently made attempts to increase security measures and launch online safety campaigns in response to child privacy law violations, it continues to operate in a way that fails to protect its users.[1]

TikTok Safety Problem #1

By default, all accounts are public on TikTok, which means that anyone on the app can see what even minors share.[2] Instead, TikTok should operate to default to safety, so that minors accounts are automatically set to private when the account is first created.

TikTok Safety Problem #2

While TikTok’s Digital Wellbeing tools attempt to allow parents to limit screen time and potential

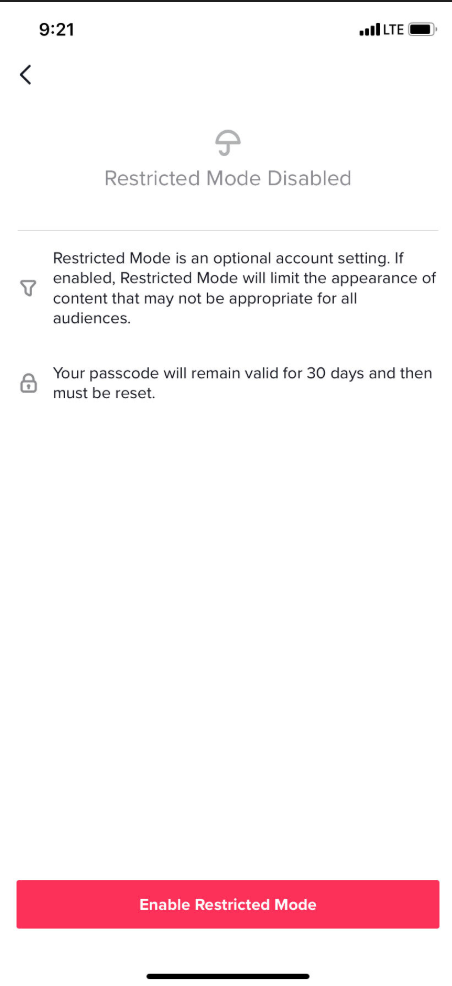

exposure to sexually graphic content, these tools are insufficient and are easily altered after initially being set up. Both the Screen Time Management [described by the app as a took ti “manage your screen time” with available time limit options as 40 minutes, 60 minutes, 90 minutes, and 120 minutes—with 60 minutes being the suggested default] and the Restricted Mode [described by the app as “an optional account setting If enabled, Restricted Mode will limit the appearance of content that may not be appropriate for all audiences”] both require a passcode to enable and disable the settings. However, according to the app, for both settings “your passcode will remain valid for 30 days and then must be reset.”

This places an unreasonable and undue burden on minors to opt-in to protecting themselves again and again and again, and it is reasonable to assume that TikTok disables Restricted Mode every 30 days because it increases profits, which TikTok is prioritizing over safety on its app.

TikTok Safety Problem #3

Further, the ability to report content and accounts that exploit or harass is not efficient. The reporting process requires the user to go to the predator’s profile on which the inappropriate content exists in order to create a report. Additionally, reports have been ignored or overlooked to the point where a petition was created and signed by 2,500 people in order to draw attention to the issue.[3]

TikTok needs to invest in improved reporting processes and greater transparency about the reporting review and protective action process.

Take Action Here: