Caroline’s (pseudonym) parents were startled when a strange man showed up on their doorstep one day, asking to see their 12-year-old daughter.

They soon discovered this was not by accident. This 20-year-old man is being accused of grooming Caroline for months on the Internet, sending her graphic sexual images and then coercing her to do the same. After chatting online for a while, they decided to meet in person. It was then that he appeared at their front door.

The parents quickly uncovered what had been going on and reported it to the police. But they kept wondering how she came in contact with this man. And how were they able to trade such sexually explicit content back and forth with no repercussions from the online platform?

That’s because the platform they were chatting on, Discord, is notorious for its inadequate safety measures that has led it to become a hotbed for this exploitative behavior.

Caroline’s parents were heartbroken. They were disappointed in themselves for not seeing any warning signs. But the truth is, it doesn’t matter how diligent a parent is. Platforms like Discord make it remarkably easy for predators to manipulate, groom, and exploit children.

Discord: A Long-Time Dirty Dozen List Target

Discord proudly touts 150 million monthly active users, but what they don’t want to brag about is their four-year residency on the Dirty Dozen List.

Predators flock to Discord to coerce children into sending them sexually explicit images, also known as child sexual abuse material (CSAM), which they then trade amongst each other on different servers. They also frequently use artificial intelligence to create CSAM and image-based sexual abuse (IBSA, a form of sexual violence that includes the non-consensual creation or distribution of sexually explicit images).

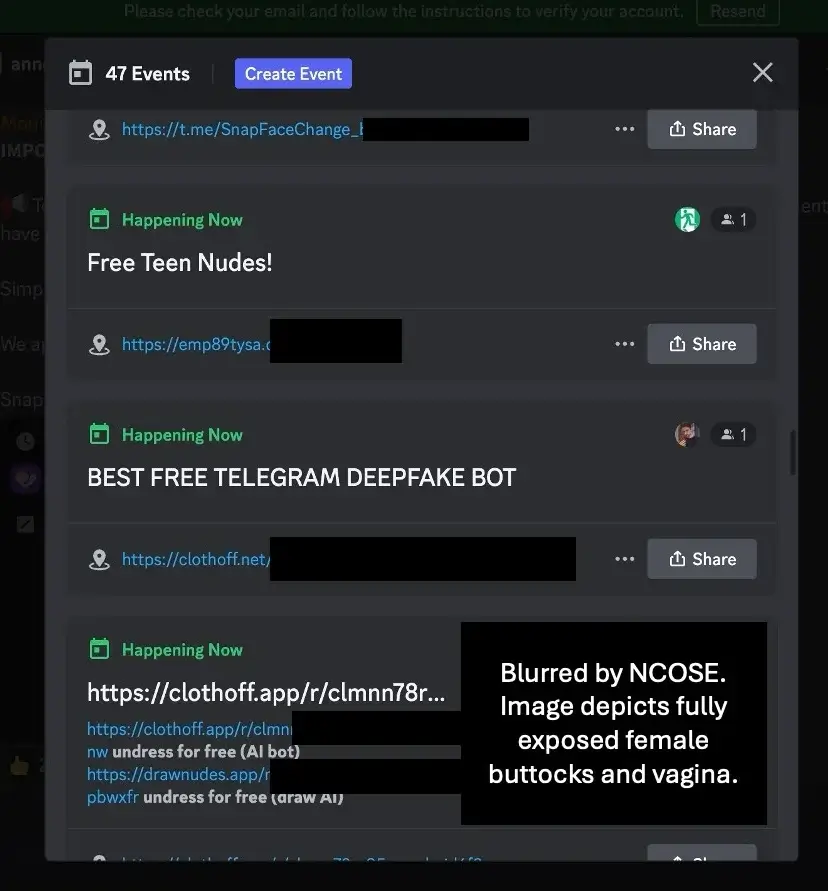

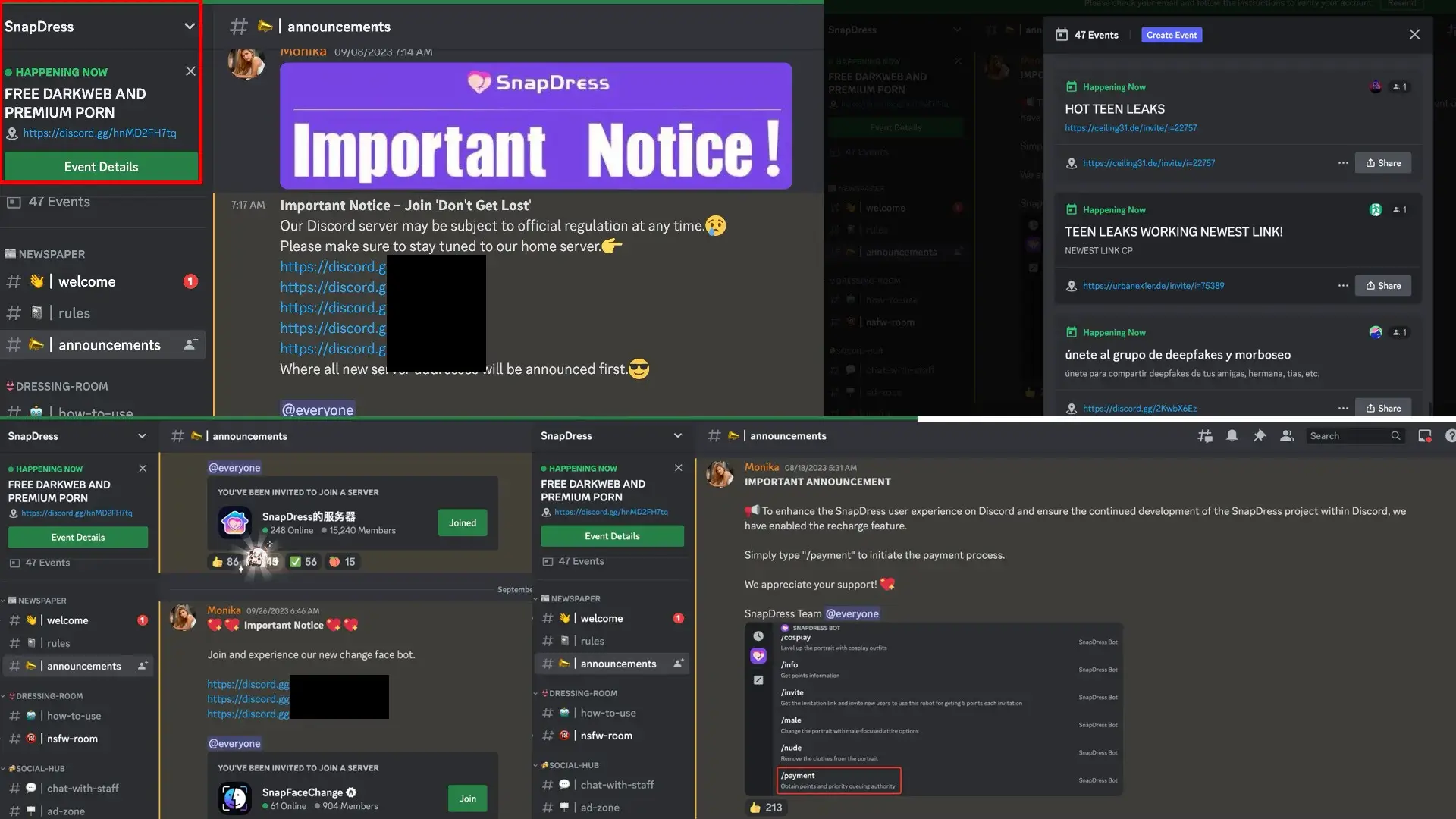

One Discord server known as “SnapDress” hosted 47 events in one day dedicated to AI-generated IBSA (commonly called “deepfake pornography”) using an AI bot that digitally “strips” women of their clothing in images. NCOSE discovered this server had 24,000 members. Discord removed the bot multiple times, but with each removal, users would simply create another server with the same purpose. This sheds light on how ineffective Discord’s “whack-a-mole” approach to moderation is.

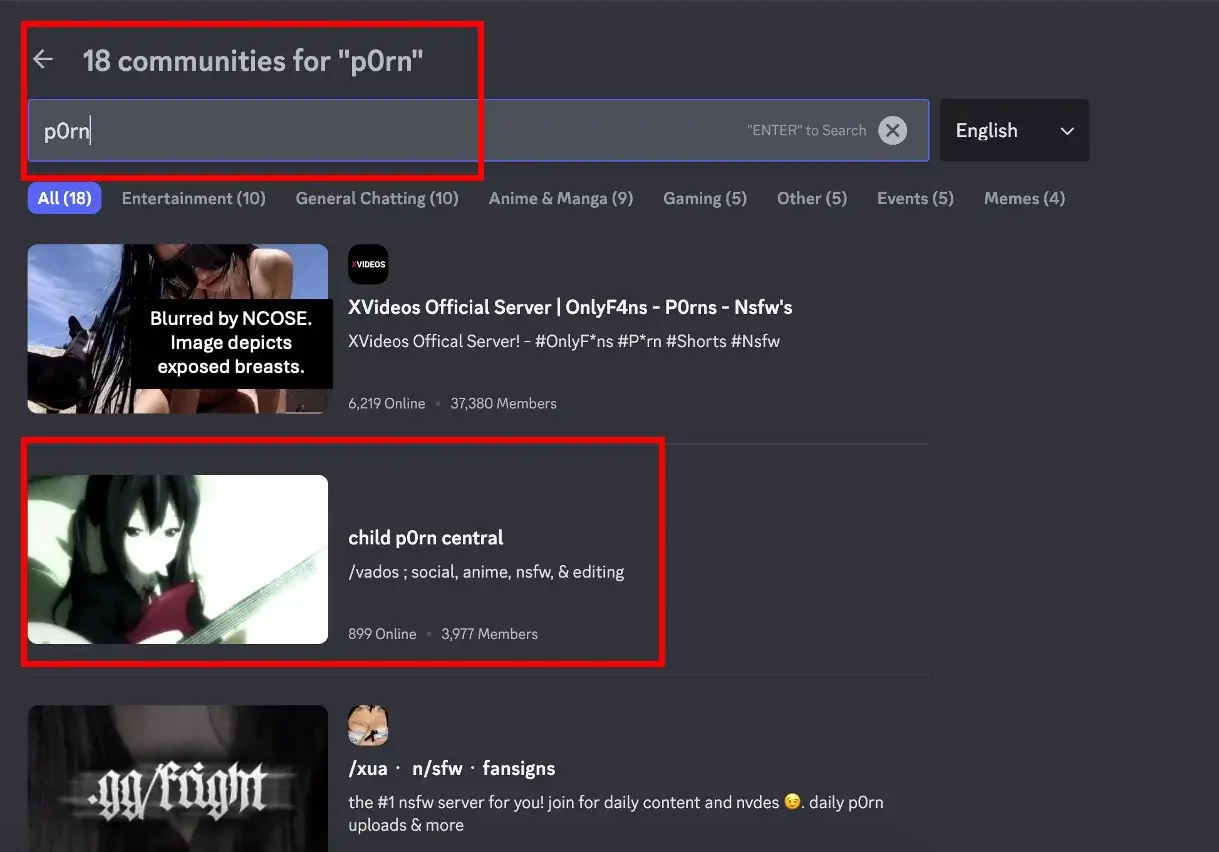

There are also dozens of servers blatantly used for the purpose of trading child sexual abuse material. For example, in what seemed to be a server for troubled teens, NCOSE researchers were immediately and inadvertently exposed to dozens of images and links of what appeared to be CSAM. The images and links contained disturbing titles and comments by other users. Some servers are even titled “child pornography.”

Discord’s Faulty Safety Measures

Even though Discord claims to have made changes to prevent exploitation, these policies are merely performative. NCOSE and other child safety experts have proven these safety changes to be defective.

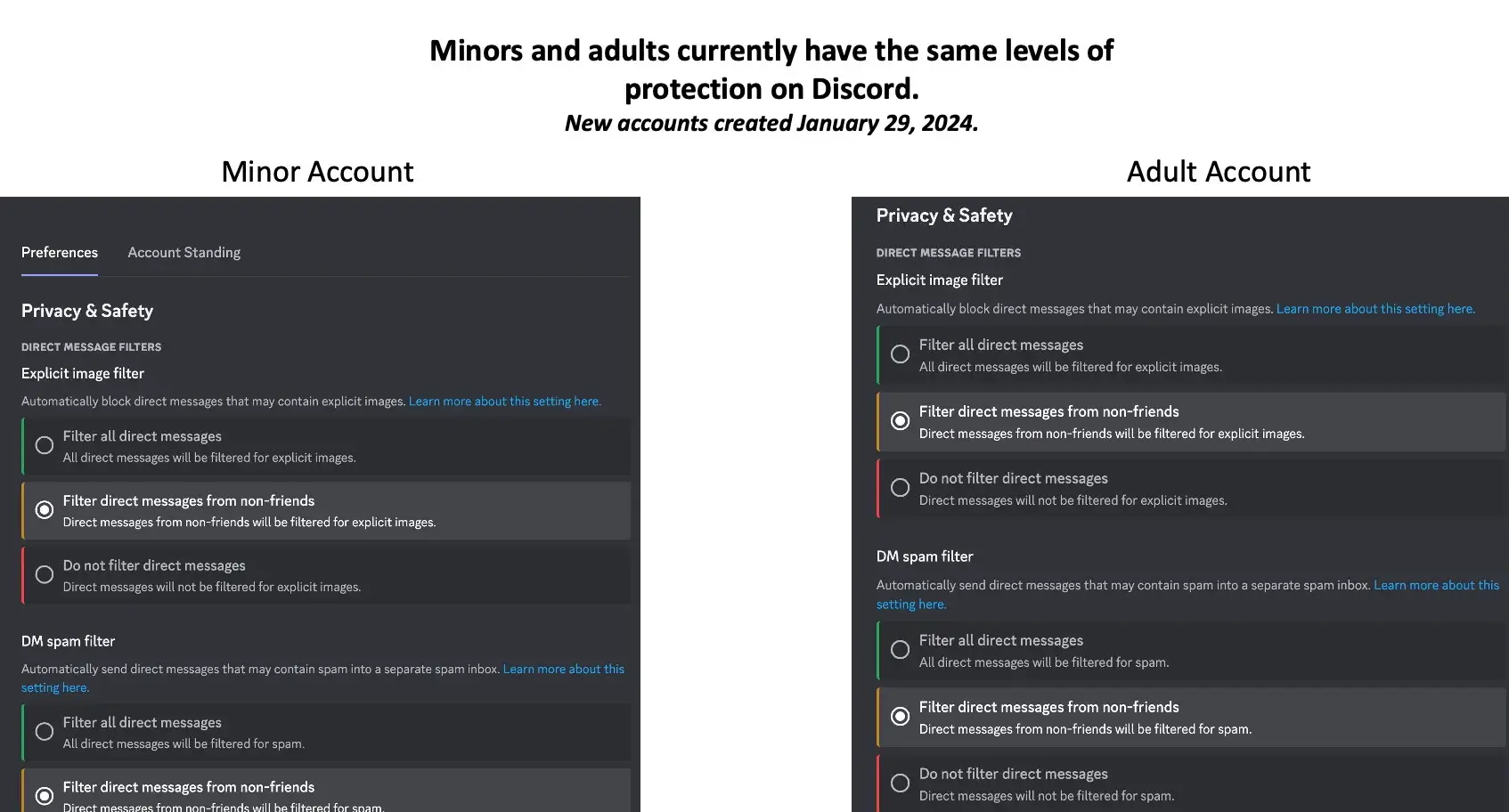

Teen Safety Assist, one of the so-called safety protocols implemented by Discord, is supposed to blur sexually explicit content by default. It’s also supposed to include security warnings to child users when unconnected adults send them direct messages. Yet NCOSE researchers were able to send sexually explicit content from an adult account to an unconnected 13-year-old account, and no warning or resources were displayed. Furthermore, adults and minors still have the same default settings for explicit content, meaning minors still have total access to view and send pornography and other sexually explicit content on Discord servers and in private messages.

NCOSE reached out to Discord multiple times in late 2023 offering to share our test results of their new “safety” tool for teens and send them evidence of activity that showed high indicators of potential CSAM sharing. We never heard back.

Discord CEO Jason Citron has continuously lied to Congress and the American people, testifying that Teen Safety Assist works as described, even though NCOSE disproved this claim.

Mr. Citron was also resistant to appear before Congress to testify at a hearing on online harms to children early last year and had to be issued a subpoena, which he refused to accept! Eventually, Congress was forced to enlist the U.S. Marshals Service to personally subpoena the CEO.

NCOSE’s Requests for Improvement

Children can access this dangerous app with essentially zero guardrails, leaving them extremely vulnerable to abuse and exploitation by the vast amounts of predators that inhabit this platform. Discord’s continuous refusal to protect its child users showcases just how dangerous this platform is.

NCOSE recommends that Discord ban minors from using the platform until it is radically transformed. They should also prohibit pornography until substantive age and consent verification for sexually explicit material can be implemented. Without this, IBSA and CSAM will continue to plague the platform.

Accounts registered to minors should be defaulted to the highest safety settings, which includes age-gating servers with sexually explicit material. Discord needs to update their parental controls to monitor friend requests.

Tech companies frequently declare that they value the safety of their users. However, when this lack of safety is what’s making them money, suddenly, safety doesn’t seem so important to them anymore.