As you settle in at your work desk, you suck in a long breath in an attempt to calm your fraying nerves.

Like every morning, it was nothing short of a miracle when you finally managed to wrangle your kids into the car, drop them off at two different schools, and make it to work just in time.

Now, you open your email, bracing yourself to see the slew of time-sensitive demands that have undoubtedly come in overnight …

Instead, the first thing you see is: “ACTION ALERT! Call on Instagram to stop facilitating child sexual exploitation!”

Your brow pinches together. It’s with a strange sense of disconcertion that you open the email and fill out the action form.

‘Does signing these petitions and actions actually help anything?’ you can’t help but wonder.

You care about these issues and you wish you could get more involved—but frankly you just don’t have time. Is keeping up with these email action alerts nothing more than a way to appease your guilty conscience?

***

If this story strikes a chord, then we have good news for you:

YES, you signing our actions DOES do something!!

Just six weeks since the launch of our 2023 Dirty Dozen List, we have already seen victories and progress with seven different corporations! Thanks to an army of grassroots advocates like you, progress which used to take years to achieve is now happening in a less than a week, on average!

Read on to learn about all the exciting updates!

Kik has made multiple radical changes that NCOSE suggested

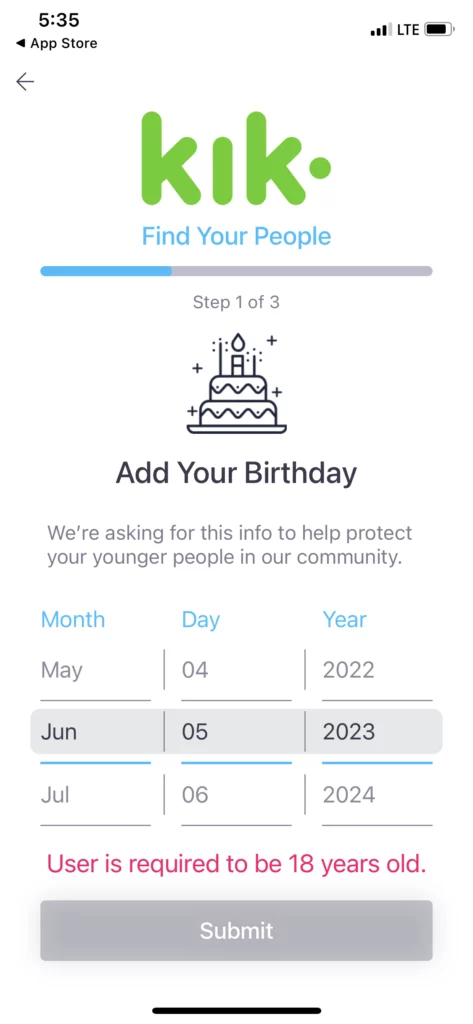

NCOSE researchers found so much illegal and harmful behavior on Kik that we strongly suggested minors be banned from the platform (not a request we normally make). Although no announcements were made and Kik’s policy page is inactive, NCOSE researchers checked the app last week and were shocked to see it had had become an 18+ platform!

Granted, it is still possible to give a fake birth date—but this is improvement, especially considering Kik primarily marketed itself to teens previously. Further, Kik now requires users to provide a valid email address to make an account—another change NCOSE requested.

Significant steps have also been made to limit sexually explicit and other harmful content—including implementing our recommendation to automatically turn on the explicit content filter for all accounts, and having bots monitor livestreams for nudity and inappropriate content.

Further, Kik created an option to block direct messages from strangers, after we provided evidence of how the direct messaging feature was being used by predators to groom children.

VICTORY! After being put on the 2023 Dirty Dozen List, Kik has implemented almost all of NCOSE's recommended safety changes! #DDL2023 Click To TweetReddit restricts access to sexually explicit content through third-party apps … and NCOSE is being given credit

The CEO and co-founder of Reddit just announced that the platform will soon limit access to pornography and sexually explicit content (what they term “not safe for work”) through third-party apps: meaning it may soon be impossible to view porn on Reddit through unofficial apps. This is significant considering one “NSFW” subreddit conducted a survey revealing that 22.4% of members use third party apps to access their subreddit.

Reddit’s CEO goes on to say it’s “a constant fight” to keep explicit content on the platform, noting that the regulatory environment has gotten “much stricter about adult content, and as a result we have to be strict/conservative about where it shows up.”

A recent Vice article covering this story cites NCOSE’s efforts to hold Reddit accountable for image-based sexual abuse and CSAM, and bemoans Reddit’s recent move as “telling of the increasing hostility against adult content that platforms face from banks, legislators, advertisers, and moral crusaders.”

Reddit also banned the use of “save image/video” bots within their API (application programming interface). NCOSE specifically requested banning these bots, as they contribute substantially to the circulation and collection of image-based sexual abuse, child sexual abuse material, sexually explicit imagery, and pornography on the platform.

Progress! Reddit has restricted access to sexually explicit content through third party apps and banned "save image/video" bots for sexually explicit content! #DDL2023 Click To TweetApple to automatically blur nudity for kids 12 and under

Our contacts at Apple reached out to let us know about major improvements to their nudity blurring feature that NCOSE has been asking for and advising Apple about since the feature was originally announced nearly two years ago.

With the fall iOS update, images and videos containing nudity will now be automatically blurred for kids 12 and under in iMessage, FaceTime, Air Drop, and Photos picker. This tool will also be available for teens and adults as an opt-in feature. Previously, this blurring feature had to be turned on by parents, was unavailable to anyone over 13, only detected still images, and only worked in iMessage.

That’s not it: Apple has already made their blurring technology available to other apps for free through API (application programming interface)! What this means is that apps accessed through iOS could apply the nudity blurring feature to their platform. Discord said it plans to use it and NCOSE has already reached out to contacts at other major social media companies like Snapchat and TikTok to urge them to accept Apple’s invitation. As my 13-year-old daughter quipped: “Well, those companies definitely have no excuses now!” We commend Apple for this admirable move, which indicates a commitment to cross-platform collaboration on child safety.

You can read our press release on Apple’s changes for more details.

BREAKING: In a major update, Apple’s nudity detection and blurring feature—applying to both videos and still images—will be on by default for accounts age 12 and under! Accounts age 13+ can also opt in, and the tech is offered for other platforms to use. https://t.co/FlXpLmmE71

— National Center on Sexual Exploitation (@NCOSE) June 8, 2023

While NCOSE is thrilled about these improvements, the Apple App Store remains on the 2023 Dirty Dozen List for deceptive app age ratings and descriptions that grossly mislead parents about the risks, content, and harms on available apps—thereby putting children at serious risk (so please take our action asking them to do better!).

Discord responds to being on the Dirty Dozen List and tests parental controls

Discord has been on NCOSE’s Dirty Dozen List for three years in a row … and they were not happy to be placed on the List again for 2023.

Responding to a news article about DDL, Discord dishonestly claimed that they remove child sex abuse material “immediately” when they become aware of it. NCOSE clapped back with this press release, showing they are in fact one of the slowest corporations to remove or respond to CSAM.

Coincidentally (?), a few weeks after the Dirty Dozen List Reveal, Discord confirmed that it is testing parental controls—something NCOSE has been pressing on them to implement since 2021. These controls would purportedly allow for increased oversight of Discord’s youngest users by parents through a “Family Center,” where parents would be able to see the names and avatars of recently added friends, which users they’ve directly messaged or engaged with in group chats, and which servers their teenager has recently joined or participated in.

We look forward to pressure-testing the tools once they are released, while continuing to demand Discord make its platform safer by design rather than primarily push responsibility to parents.

Progress! After being named to the Dirty Dozen List three years in a row, Discord confirms that it is testing new parental controls! #DDL2023 Click To TweetInstagram starts task force to investigate facilitation of child sexual abuse material

NCOSE has repeatedly pressed on Instagram since 2019 to remove the extensive pedophile networks that operate openly on the platform. Due to their inaction on this and other child exploitation and abuse issues, NCOSE placed Instagram on the 2023 Dirty Dozen List.

NCOSE staff advised Wall Street Journal reporters who were conducting a joint investigation with the Stanford Internet Observatory resulting in this powerful piece: Instagram Connects Vast Pedophile Network. The article exposed not only Instagram’s inaction, but their facilitation of CSAM-sharing and sexualization of minors

In response to the WSJ exposé, Meta started a task force to investigate how Instagram facilitates the spread and sale of child sexual abuse material.

See our press release for more details.

Progress! Instagram starts task force to investigate facilitation of child sexual abuse material! #DDL2023 Click To TweetSnapchat promises NCOSE it’s making changes

Snapchat responded to its placement on the the 2023 Dirty Dozen List in a detailed letter to NCOSE, stating that they “immediately conducted a comprehensive review of your concerns and recommendations to identify what actions we could take quickly, as well as additional tooling and product improvements that we could make longer term.”

Some of the initial actions Snap took included implementing consequences for the problematic content providers which NCOSE brought to their attention, conducting an audit of the effectiveness of Content Controls and other features in the Family Center, and initiating a deep-dive into their editorial guidelines for both Spotlight and Discover.

Snapchat is consistently ranked among the most dangerous platforms for kids. NCOSE was encouraged by Snapchat’s response, and we’re hopeful that they will now make some meaningful changes that truly make the platform safer for all their users.

Our team will be meeting with Snapchat later this summer to review promised improvements.

Twitter appears to improve faulty child sex abuse material detection system

NCOSE kept Twitter on the Dirty Dozen List this year as there were clear indicators that CSAM and child exploitation in general was increasing on Twitter since Elon Musk took over—despite his promises that tackling CSAM was priority #1.

Recently, Twitter supposedly fixed their child sexual abuse material detection system after a report by the Stanford Internet Observatory exposed how dozens of hashed images of CSAM on Twitter were failing to be identified, removed, and prevented from being uploaded.

According to researchers, “Twitter was informed of the problem, and the issue appears to have been resolved as of May 20.”

That being said, Twitter has yet to confirm whether they were still using PhotoDNA or any other type of CSAM detection technology. NCOSE researchers will be carefully monitoring the situation on this app.

It’s thanks to YOU that all this progress has happened.

You may be just taking a few seconds to sign a petition or complete a quick action form—but you are rocking some of the most powerful corporations in the world. You are catalyzing changes that have an impact on millions of kids and adults worldwide.

THANK YOU for using your voice to make a difference!